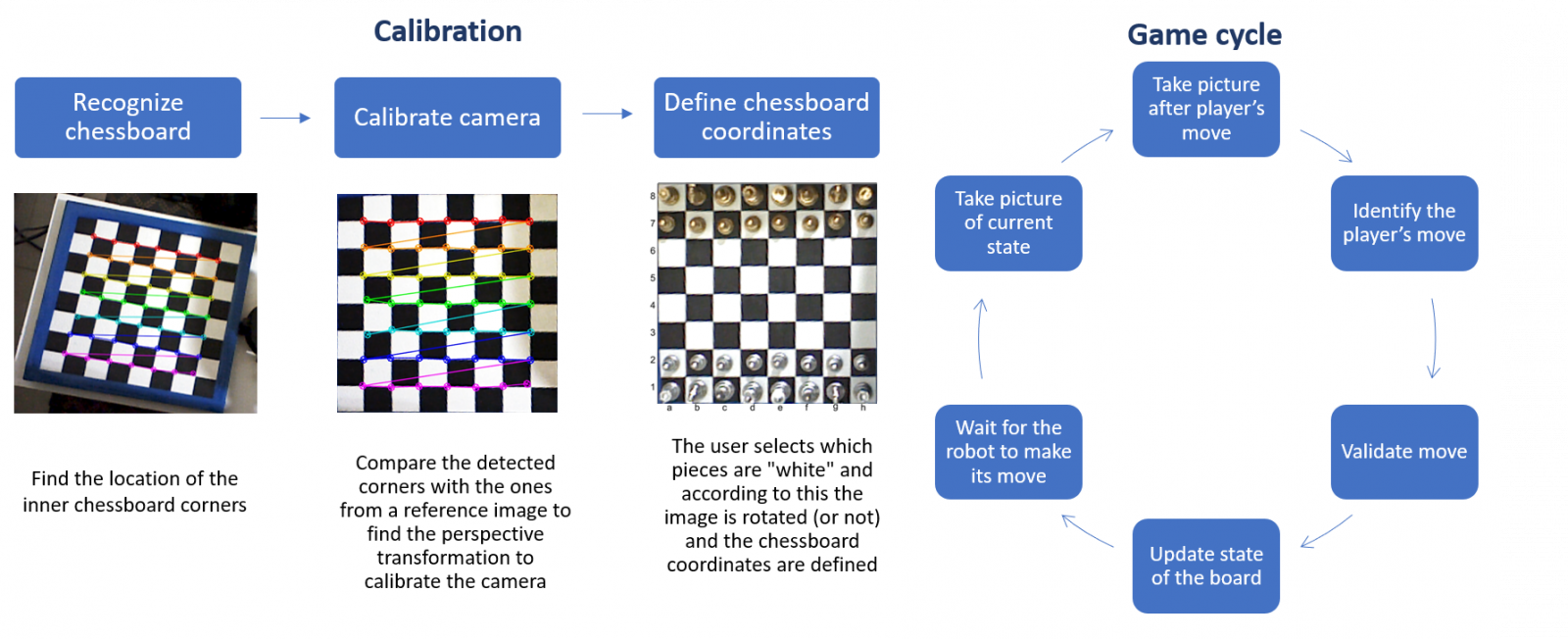

As previously mentioned, the robot uses a Raspberry Pi camera module (or USB camera) and computer vision algorithms to get all the information required to make its moves. However, in order to see the board properly, it needs to be calibrated first.

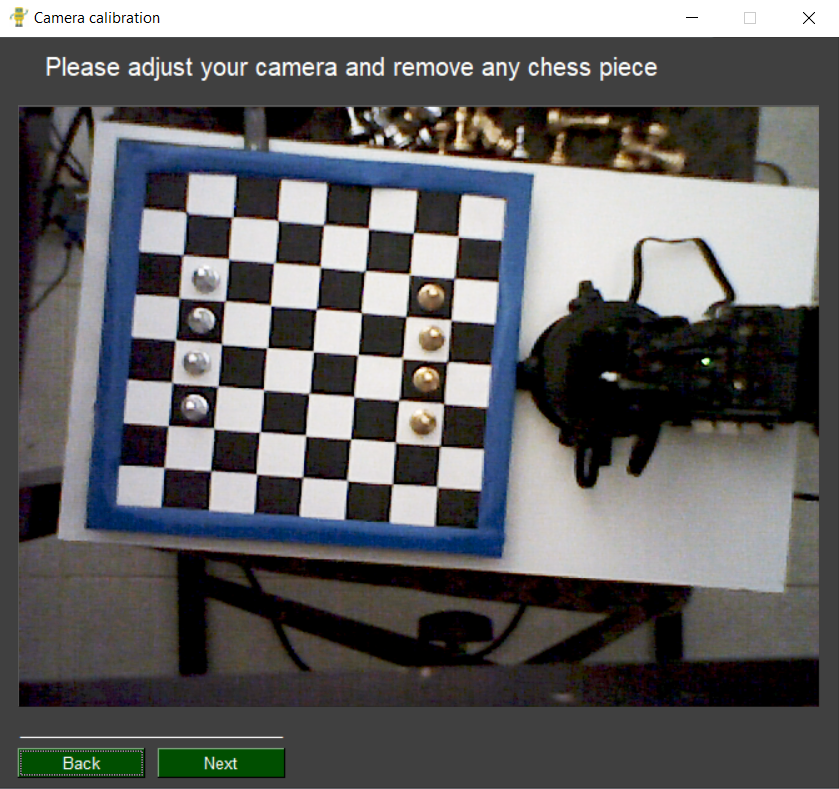

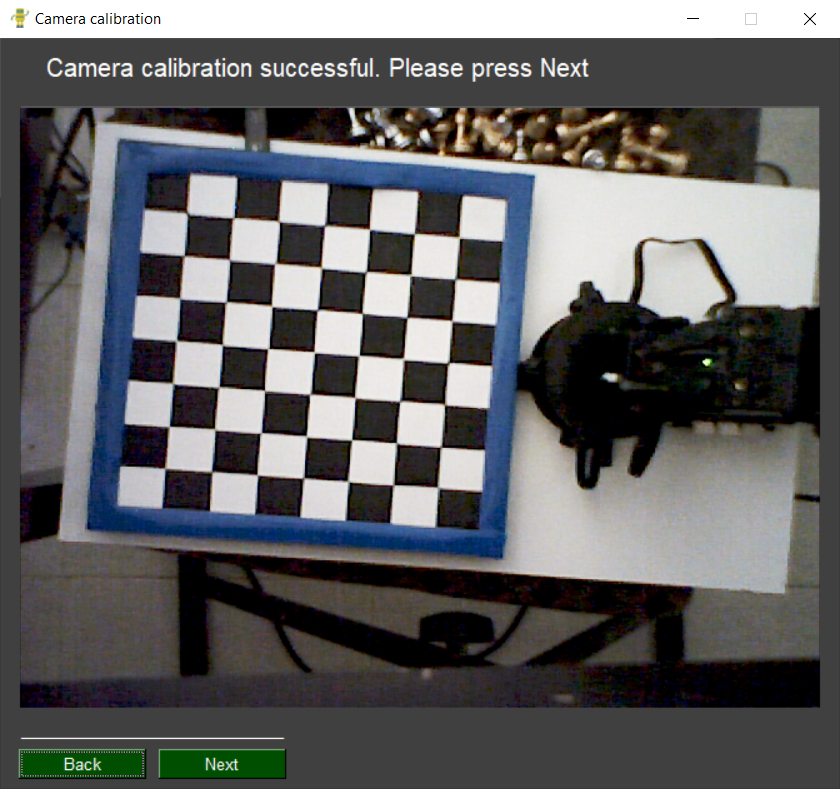

At the start of the game the GUI prompts the user to clear the board so that it can calibrate the camera. This process is only done once at the beginning of the game and consists of taking pictures of the empty board and analyzing them to find the corners of the chessboard. If it is not able to find the chessboard corners it warns the user and repeats the process until it is able to do so.

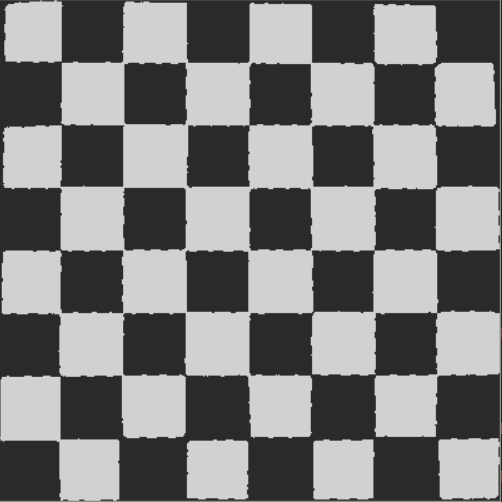

In this process the image is converted into a grayscale copy of itself and using OpenCV's algorithm findChessboardCorners it finds the location of the inner chessboard corners. It then uses this information in conjunction with the corners location of a reference image used for calibrating the camera to find the perspective transformation using the findHomography function which returns the Homography matrix that we will use to transform all the raw images using the warpPerspective function.

In case you are not familiar with the homography matrix, in general terms it’s a matrix that allows you to rotate, translate, scale and, unlike an affine transformation, change the perspective of an image. If you want to investigate a bit more about this, I invite you to visit the linked pages. With this process the raw images are converted to a 400x400px image and the non-chessboard part of the images is cropped.

To give more flexibility to the user it is allowed to use pieces of any color as long as they are different from the colors of the board. The GUI allows the user to select which pieces he wants to play with and which are considered "white". In order to do this after the calibration the algorithm divides the image in four sections and the user is asked to select the quadrants that have the "white" pieces. According to this information the image is then rotated (or not) and the chessboard grid and coordinates are defined so we can now consider the image in terms of chessboard squares.

In case the “white” pieces aren’t in the left side of the image the algorithm defines an angle according to the user input. This angle is then used for obtaining the new transformation matrix using the getRotationMatrix2D function. Finally, it uses this rotation matrix to apply the affine transformation using the warpAffine function. This affine transformation along with the perspective transformation, determined in the calibration step, are applied to all the subsequent images.

And last but not least we need to detect the movement of the pieces. However, instead of detecting what type of piece is on each square, we can take a shortcut. Because the algorithm internally keeps track of the state of the game and knows what pieces the robot moves, all it needs to do is to detect which squares changed in the human’s turn.

In order to do this the algorithm requires two images one that shows the previous and one of the current state of the board. So, after the human player makes a move, he/she has to click the chess clock in the GUI which works as a trigger for the camera.

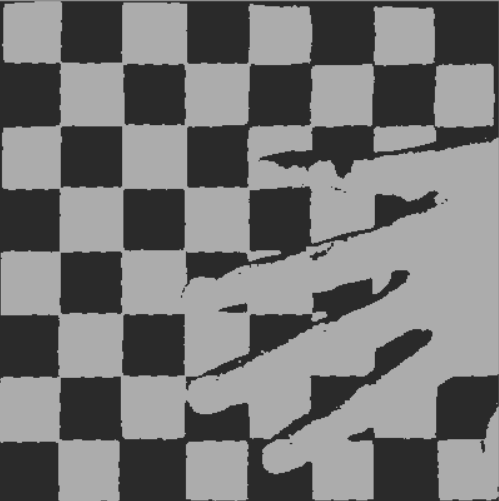

After the camera takes a picture the algorithm applies the corresponding transformations (perspective and rotation). Next, the program iterates through all of the squares on the board, determining the change in color from the previous to the current image. It does so using the absolute difference norm of the image’s RGB components. In a typical move, two squares have the largest change in color, the square from which a piece moved and the square to which it moved. However, there are a few special cases:

- Castling: In castling, both the king and the rook move. This leads the algorithm to find that four squares changed.

- En passant: Is a special pawn capture that only occurs immediately after a pawn makes a move of two squares from its starting square. The opponent can capture the just-moved pawn "as it passes" through the first square. The result is the same as if the pawn had advanced only one square and the enemy pawn had captured it normally. This results in three squares changing.

- Pawn promotion: When a pawn reaches the other end of the board, the player can promote that pawn to any piece of his liking. While it is common to simply promote it to a queen, this is not always what is best so in this case a GUI window pops up and asks the user to select which piece he wants to promote to.

Taking this into account, it is necessary to detect the four squares with the greatest change between frames. However, due to the unstable lighting, noise and other factors, even if only two squares had actually changed in a given turn, the algorithm will always detect a change in more squares. For this reason, the algorithm sorts the four squares with the greatest change from highest to lowest and saves its coordinates. This information is then used in another module that finds the most probable move and checks its validity. In general terms, the process can be summarized as follows:

Besides the main parts of the vision module there is an additional function that allows to check if there are any obstacles when the robot is trying to make its move. This function maps the chessboard calibrated image to only two colors and then tries to detect the inner corners of the board, that way if there’s an obstacle, for example the user hand is covering the chessboard, the algorithm won’t be able to detect the chessboard corners and this will “warn” the robot triggering a response which is explained in the Arm Control Module tutorial.