Want to become involved? It’s as easy as jumping into the conversation. Even if you don’t have the hardware, the software chosen and the code created is open source. If you really want to work with the hardware but cannot afford it, send us a private message and we’ll see what’s possible. Have you worked on a RoboCup team or are considering starting one and want a sponsor? Reach out via private message and we’ll be happy to discuss.

BACKGROUND

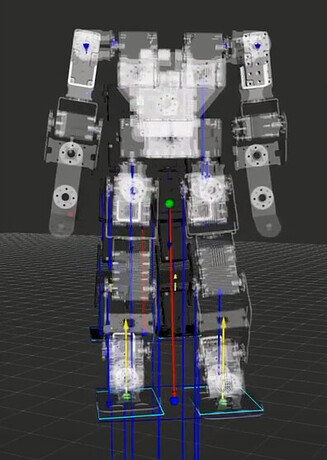

When people think about a “robot”, what often comes to mind is a walking, talking, human-like biped or android. This goes back to the goal of “making robots in our image” and although so far the progress of creating such a robot has fallen short of what science fiction has shown us might one day be possible, the dream is still there.

Some may rightly indicate that it’s best to create robots with specific goals like vacuuming, lawn moving, pool cleaning and product assembly, and while these robots are able to serve a need, the dream of recreating an artificial life form able to understand and navigate the complex environment and society which humans have created is ever present. Humans are likely to more accept a highly intelligent companion (helper, assistant or friend) if it is in the form of a humanoid. In the distant future, if people want to live forever beyond their mortal bodies, they will likely want the new body to be in the same form factor that they have gotten used to.

Companies like Honda (Asimo), Hyundai (Boston Dynamics’ Atlas), Toyota (HR3), and Hanson Robokind are among many which are pushing the limits of modern technology to accomplish such a feat. Competitions like RoboCup and FIRA World Cup provide clear goals and guidelines in order to try to make each robot as self-sufficient as possible, while still working in a team.

Normally the development of such robots is reserved to on-site team members, and the hardware alone can command a hefty sum. Lynxmotion has decided to take a more open approach in the development of this humanoid in order to have others around the world be able to participate, provide opinions, contribute to and build upon the project. In order to try to ensure that the project has scope and people can participate using the same system, some characteristics have been predetermined.

Prototype specifications (subject to change):

COMPETITION COMPLIANCE

ACTUATORS / SERVOS

- 18x HT1 Lynxmotion Smart Servos (LSS)

MECHANICS

- 5 Degrees of Freedom (DoF) Leg

- 3 DoF arm

- 2 DoF neck

- SES V2 Bracket System

- Composite Plates

ELECTRONICS

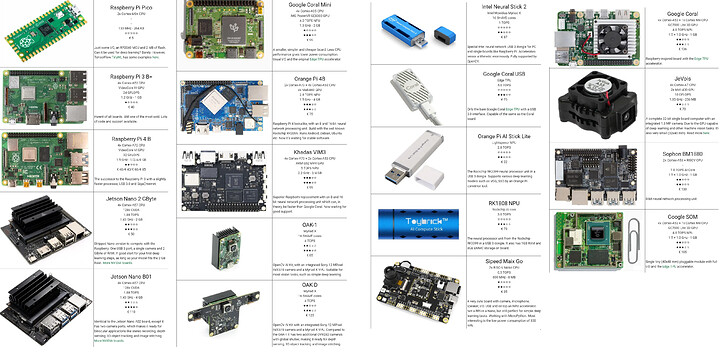

- Raspberry Pi 4 Single-Board Computer

- LSS Adapter

- LSS-2IO

- LSS-5VR

- LSS Power Hub

- Camera (TBD)

POWER

- Tethered: 12VDC Lynxmotion Wall Adapter

- Autonomous battery: TBD 3S LiPo

SOFTWARE

- GitHub

- ROS 2.0

- Python

![[RoboCup][V-RoHOW] Computer Vision is a 3D Problem](https://img.youtube.com/vi/FhJwdtnVusY/maxresdefault.jpg)

![[RoboCup][V-RoHOW] Hands-on with ROS 2](https://img.youtube.com/vi/ZgzsYvne5Gs/maxresdefault.jpg)

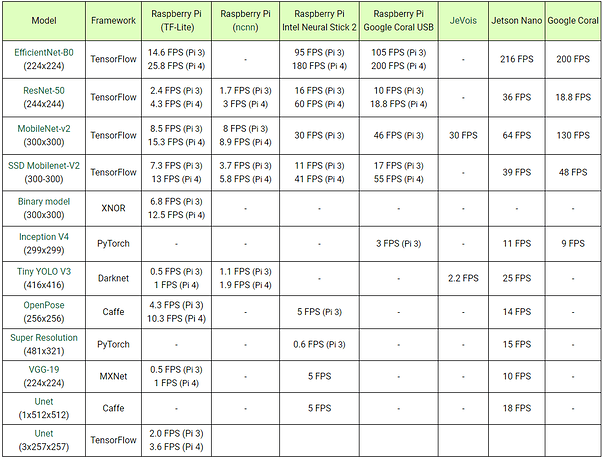

) this way they would not have to start from scratch in the future in case they decide to change hardware.

) this way they would not have to start from scratch in the future in case they decide to change hardware.

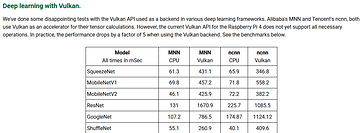

Seems like it is a recent development and may not be “production quality” yet.

Seems like it is a recent development and may not be “production quality” yet.