Awesome, that might be the breakthrough you were hoping for.

Can’t wait to see that bad-boy walk.

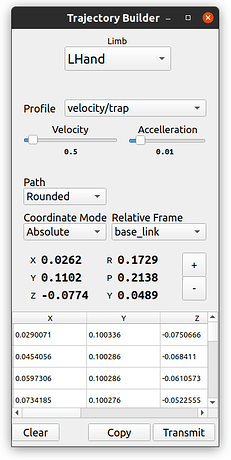

Over the last week I’ve been working in python to generate trajectories and send them over ros2 to the robot. One thing that became apparent was trying to generate manual coordinates for limbs is hard. I only need to know the limb endpoint like the hand or foot position and the IK will do the inner joints, but still tedius. I was using calipers to measure positions from the robot base. So I coded up my first Qt application with UI elements that populate the Trajectory message, shown below. I can select the limb endpoint (LHand here) and what relative frame I want the coordinates in (base_link i.e. torso). I then physically move the robot’s left hand while hitting the + button to add coordinate into the list. When done a sequence I hit Transmit and the robot will follow the path I just created. I can also copy the trajectory to the clipboard to paste into code. So easy!

Other items:

- There were algorithms fighting each other like the “stand up” slider which is no longer needed since the torso axis manipulator does the job just the same.

- Manipulation of the limbs and body is working much better now. Legs interacting with the floor is much better with the nailing update working.

- Balance algo seems to be working much better from the response I get in RViz, but I haven’t been able to test physically yet

- Added menu to toggle what axis manipulators to show. Previously all limbs would HOLD in place, which meant (for ex.) the hands tried to stay at their XYZ position in world-space as you moved the legs and base around. Which is cool no doubt, but not always desired. Now you can just enable legs, or just arms, etc, and the non-enabled limbs float with the robot. Better control.

I’ll do a video over the weekend.

Python Qt app for programming trajectories. The XYZ RPY show are the actual coordinates of the robot limb as you move it around. I have many points already added in the list. The planner will still smooth the point segments using spline curves.

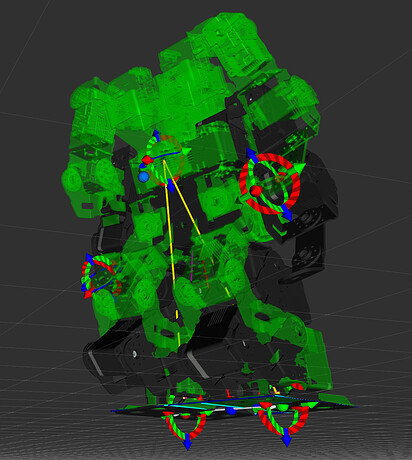

Manipulating limbs directly on the robot in RViz2 now working much better. All imbs are showing control manipulators here, but I can now turn each one on or off.

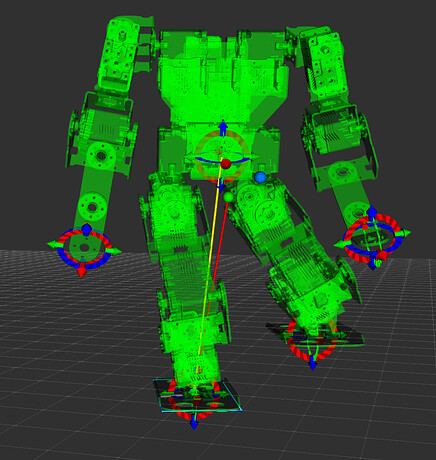

I’ve hidden the main robot viz, and only showing the green “target” pose. I lifted a leg and the balance algo has automatically adjusted the base to keep it inside the stable center-of-pressure box.

Excellent! That will make things so much simpler! That’s really great news!

Looking forward to seeing the video.

Your pictures reminded me of:

I can now generate trajectories by moving the physical robot limbs and recording the path, then replaying that back with the click of a button or by copying it into a python program.

[rant]

Also, fixed a huge bug on Friday night!!! I’ve been struggling with this one for around a year. I was having a lot of trajectory paths fail due to KDL’s IK function returning an error. When this happens the trajectory doesn’t render during that time. I spent uncountless hours googling for an answer and trying to tune the arguments to the KDL’s IK function to try to fix. Turns out…the error code it was returning is not an error LOL ROFL - OMG I could cry. This one I can blame on a documentation problem in the KDL project that does the IK. It was returning error code E_INCREMENT_JOINTS_TOO_SMALL…The fact that it is classed as an “error code” and has E_ prefix you’d think it would be an error…amirite? Well no, it’s not, it just meaned the function returned early because the solution was "easy’. So having that error is actually a good thing and my effort to create trajectories that didnt return this error was simply me making things less performant. lol. If I just ignore the error-thats-not-an-error it all worked. Frustrating that a simple change in docs could have prevented this but I’m so glad to be over it.

[/rant]

This has the feel of classic “off by 1 errors” (if you think creatively about it!)… My developer  feels for your pain on this one. I stopped counting how many times in the last few years I’ve had such issues that make me scratch my head for a long time only for it to be the most trivial, inane thing I didn’t catch at first… and then is super obvious afterwards!

feels for your pain on this one. I stopped counting how many times in the last few years I’ve had such issues that make me scratch my head for a long time only for it to be the most trivial, inane thing I didn’t catch at first… and then is super obvious afterwards!

I’m really glad you caught that one out though! Nicely done! Did you submit to that project an update for their doc?

I was contemplating if I should, but yeah just did.

My apologies, I am catching up to this thread and looking at your AI/ML board comparison chart. I really appreciate the work of collecting the info, many boards I didnt know about and I’m an “avid collector” lol. My AI/ML “stamp collection” from that list consists of Intel Neural stick, Sipeed Maix, Jetson Nano, OAK-D and of course enough raspberry pies to feed a family. I think the Jetson Nano NX is missing from your list and it blows them all away at 21 TOPS. It’s also a rucksack if you were to put the deb board onto a humanoid. I would love to design a custom carrier board for the NX that is more compact, lighter and with the many LSS bus connection and IMU included. That and the OAK-D would rock.  Alas, no time at the moment…the Khadis looks interesting but if the software suite isn’t there we probably shouldn’t be the first to try it. The MAiX suffers the same problem (at least it did 8 months ago).

Alas, no time at the moment…the Khadis looks interesting but if the software suite isn’t there we probably shouldn’t be the first to try it. The MAiX suffers the same problem (at least it did 8 months ago).

All of your videos are professional (easy to understand you - great for teaching!).

Well done! A record / playback feature will make things so much easier for everyone.

True, that is the main issue with using NVidia at the moment. The Nano development board is quite large, and the Nano module alone requires a carrier for connections etc. Same for the TX series. These boards are quite complex and would likely require quite a bit of support even with a successful design, as well as production volume. The Pi 4 + OAK-1 however may do the trick (like your idea of pushing much of the code to Python to it’s modular and runs faster).

Yeah, agree on all points…and the honkin heat sink required as well adds weight. I wonder if a carrier could be made that supports the NX and a RPi in one (either/or not necessarily both at once). Again, all in the future for now.

Hahaha…

Really interesting to read, thanks for sharing this info!

The best hardware as of Oct 2021 would be an Nvidia Jetson NX or Nvidia AGX Xavier with a 3D depth camera (Intel D435i maybe).

The major difference maker is a custom-tailored DNN or RDNN (maybe the new deep reinforced learned algos). But the difference lies in how you truly make your reinforcement heuristic function more AI based and making this algo teach and build DNNs.

Agree with you cmackenzie. I would take it one more step further and try this on AGX Xavier as it is especially a dedicated system (I wouldn’t say SBC or anything). In my opinion, this hardware can truly change the face of robotics & AI/DL.

NX and AGX are not complex if one had enough practice with Linux dev and Python driver dev. The problem lies that we look for existing tailor-made solutions.

But anyhow, AGX Xavier is a dedicated Vison accelerated hardware especially supports the autonomous vehicle dev or robots. It takes a while to get used to it but it will be worth at the end of the day.

I was wondering if we could develop a voice based virtual assistant for these robots based on TensorFlow. Maybe NLP and sentiment analysis also could be integrated. I would say not in connection to any hardware but just the software part. Would that be possible?

@rvphilip Welcome to the RobotShop Community.

What would the “virtual assistant” actually do in the context of a small robot? Given that there are already multiple AI assistants out there which have teams of developers and are quite advanced (Siri, Cortana, Google, Alexa), why develop one from scratch? I see a follow-up thread here: OpenCV and TensorFlow for Chatbot

i saw a Kit on ebay. Looks interesting…i may just get one…

@Rhampton21 This Lynxmotion SES V2 Humanoid kit is still in development, so nobody has a commercial version yet. You must have seen a different humanoid robot.

oh ok thanks for the reply cbenson…this is the Link i saw…i’m not sure if it’s the same or a clone but i am certainly am interested in this type of build project…17DOF Biped Robotic Educational Robot Kit Servo Bracket Ball Bearing Black US-x | eBay