Vulcan mind meld…

Aight - gonna start by givin you what I know… It seems you are interested in “coding” so I’ll pontificate ad nauseum

I believe Processing is coded in Java - it has a scripting language which in turn gets compiled into Java classes and executed. It looks pretty damn nifty. I downloaded it once and messed around with some of the demos (damn cool) … however my interests made me look around further for specifically “robot/machine control” software… Processing could do this to some degree, but it was designed for a different purpose.

OpenCV is the powerhouse of vision software ! It was started by intel - release to the public and contains amazing collection of functions and utilities. It has had a long life, software years are like dog years …

http://sourceforge.net/projects/opencvlibrary/ - it is written in C and has interfaces for C++ & Python - I have downloaded and played with it… it was designed to be built on all sorts of platforms - I’ve built it on windows and Linux

So… initially you might be interested in OpenCV + Processing … well you need some Java Glue to do that so they stick together…

These guys created some of that glue http://ubaa.net/shared/processing/opencv/ …

Really its not like glue but more of a “specialty fastener”… limited in some ways… It’s wicked cool, I tried it … thought it was cool. Got it to work with Processing…

What I Got :

I made a service based multi-threaded java framework… A framework is like a lot of glue… and It currently is gluing OpenCV + Arduino + Servos + Motors + Speech Recognition + EZ1 Sonar + IR Module + MP3 Player + Control GUI + Joystick + RecorderPlayer + Text To Speech

Here is a screen shot on a recent experiment… - the little rectangles are the services - and the little arrows are message routes

So in this case the “camera” service which uses OpenCV sends messages to the “tracker” service which in turn send messages to the “pan” and “tilt” servo services which in turn send messages to the “board” in this case an Arduino Duemilanove…

Make sense ?? a service is like a little piece O your brain ... visual cortex, cerebellum, hypothalamus, etc.. ;)

Services make messages, relay messages, receive and or process messages...

Message messages messages neurons synapsis dendrites ..... wheee !

So if you really want to try it I need to know some of the details of your system :

1. what is your puter OS - it looks bill gate'ish (bad boy)

2. what are your "boards" - picaxe if I recollect - I currently don't have a PicAxe board in my library of services - but I could write one with your help - I'd be interested in adding it

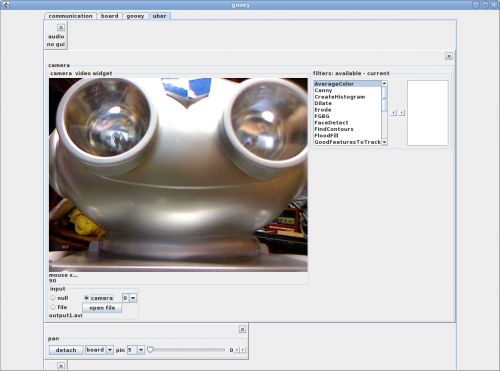

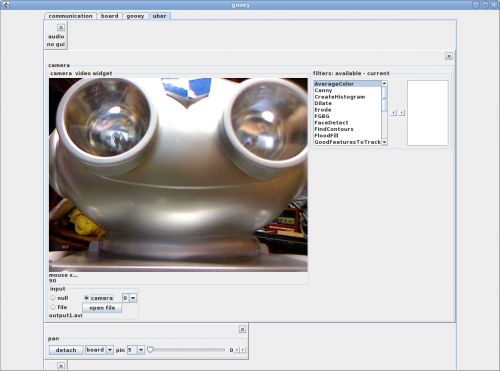

Below is the "uber" pane - which have most of the controls for the "Services" .. e.g "camera", "pan".. etc... remember?

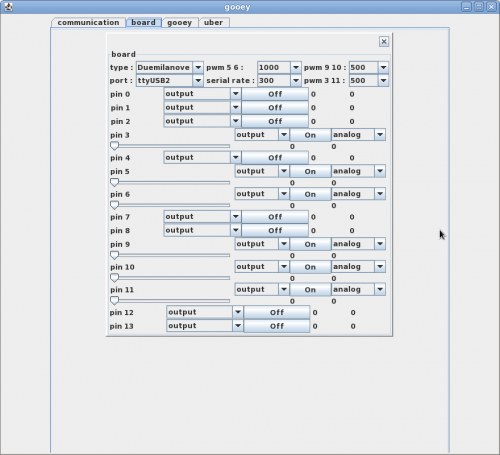

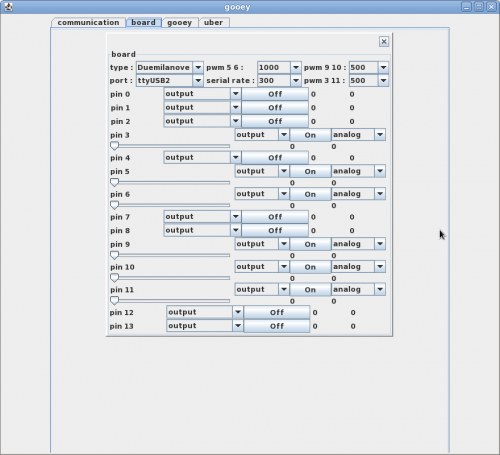

The "board" service is a bit more complicated so it has its own pane...

This is a work in progress - so things will be bumpy ... still interested?

GroG