Hey guys!

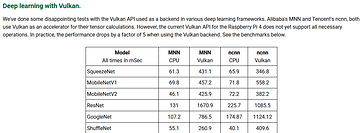

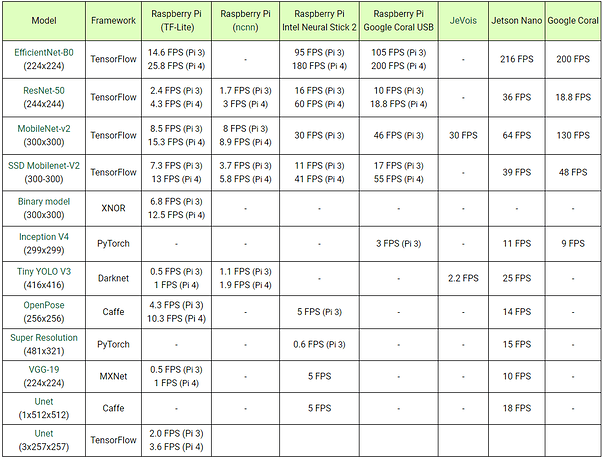

I wanted to provide some updates on what I’ve been working on/researching for the vision system. As you may have seen from the first post on this thread we are planning on using a Raspberry Pi 4 but we haven’t chosen a camera yet. So I started by checking the hardware and software used by teams that have participated in previous competitions for reference and noticed that most of them were using very powerful processors and in many cases GPUs which are pretty much a requirement to run real-time object detection. For this reason, I focused on looking for solutions for real-time object detection on a CPU (not a GPU) because even though the Pi4 is certainly an improvement from the previous versions, according to what I’ve seen online, it is still not capable of achieving fast object detection.

Some teams have used the Raspberry Pi (3B+) for their robots and experimented with possible solutions by trying fast models with slightly lower accuracy (compared to popular architectures such as R-CNN or SSD) such as YOLO or YOLO Tiny or even modifying them to increase the speed by sacrificing the accuracy a little bit more (xYOLO, Fast YOLO). But it is worth noting that even though they had an improvement in performance most of the teams that used the Raspberry Pi opted to change it for other solutions such as the Nvidia Jetson Nano or Intel NUC later on. However, I’m still planning on testing these options on the Pi4, and try to share the results here.

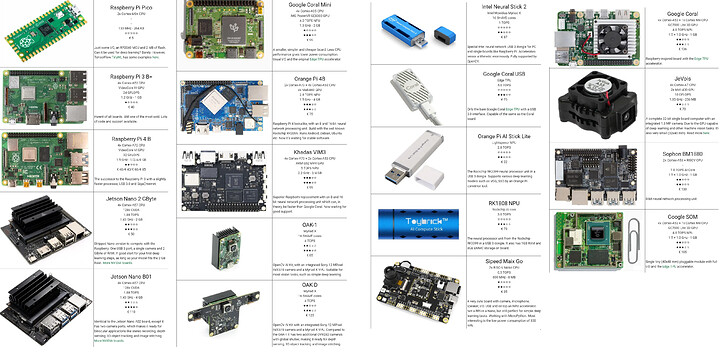

I also did some research on other options which basically consist of adding extra processing units like the Intel Neural Compute Stick which is powered by a Movidius VPU or the Google Coral USB Accelerator which has an Edge TPU. I’ve seen some examples of what can be done using either of these along with a Raspberry Pi and it definitely looks like they are up for the task.

But now there seem to be even better options! Camera modules that also include a processing unit, among which OpenCV AI kits (OAK-D/OAK-1) stand out. If you haven’t heard about them you can check them out here:

So at the moment the main hardware options under consideration are:

- Raspberry Pi 4 + OpenCV OAK-D / OAK-1

- Raspberry Pi 4 + Intel’s Neural Compute Stick 2 + Pi Camera / Webcam

- Raspberry Pi 4 + Google Coral USB Accelerator + Pi Camera / Webcam

- Nvidia Jetson Nano / TX2 + Webcam

The next step is checking and testing some of the most popular CNN architectures for real-time object detection, but from what I’ve seen so far YOLO (You Only Look Once) based models seem to be quite popular amongst the RoboCup community. But there are some other original architectures created by some of the teams I would like to check as well.

) this way they would not have to start from scratch in the future in case they decide to change hardware.

) this way they would not have to start from scratch in the future in case they decide to change hardware.

Seems like it is a recent development and may not be “production quality” yet.

Seems like it is a recent development and may not be “production quality” yet.

feels for your pain on this one. I stopped counting how many times in the last few years I’ve had such issues that make me scratch my head for a long time only for it to be the most trivial, inane thing I didn’t catch at first… and then is super obvious afterwards!

feels for your pain on this one. I stopped counting how many times in the last few years I’ve had such issues that make me scratch my head for a long time only for it to be the most trivial, inane thing I didn’t catch at first… and then is super obvious afterwards!