Reserved post for software advancements and updates.

Reserved post for mechanical advancements and updates.

Reserved post for electrical advancements and updates.

RoboCup Humanoid League has some nice videos on their YouTube channel:

RoboCup team NUbots discussing vision:

RoboCup team Bold Hearts moving to ROS 2.0:

More from team Bold Hearts about ROS 2.0:

Awesome videos, thanks for sharing!

Hey guys!

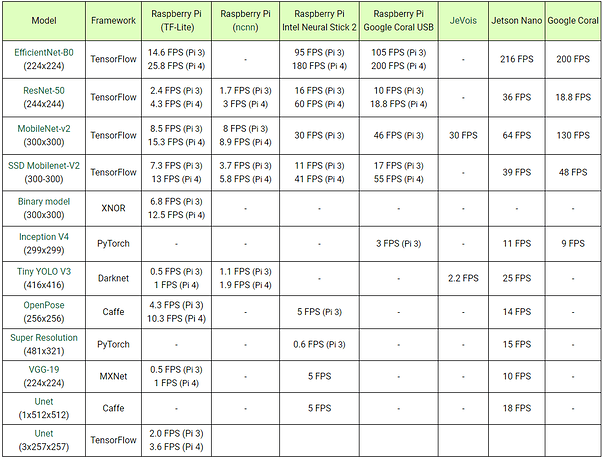

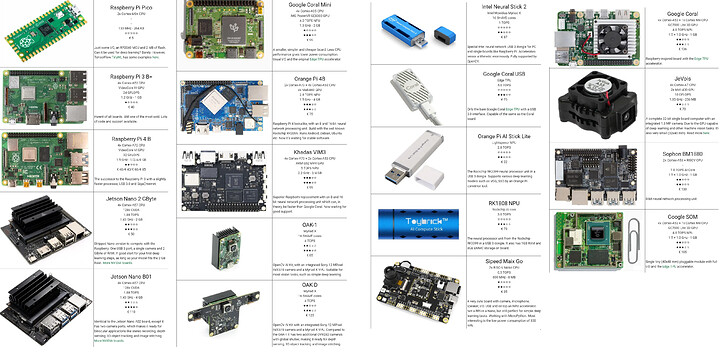

I wanted to provide some updates on what I’ve been working on/researching for the vision system. As you may have seen from the first post on this thread we are planning on using a Raspberry Pi 4 but we haven’t chosen a camera yet. So I started by checking the hardware and software used by teams that have participated in previous competitions for reference and noticed that most of them were using very powerful processors and in many cases GPUs which are pretty much a requirement to run real-time object detection. For this reason, I focused on looking for solutions for real-time object detection on a CPU (not a GPU) because even though the Pi4 is certainly an improvement from the previous versions, according to what I’ve seen online, it is still not capable of achieving fast object detection.

Some teams have used the Raspberry Pi (3B+) for their robots and experimented with possible solutions by trying fast models with slightly lower accuracy (compared to popular architectures such as R-CNN or SSD) such as YOLO or YOLO Tiny or even modifying them to increase the speed by sacrificing the accuracy a little bit more (xYOLO, Fast YOLO). But it is worth noting that even though they had an improvement in performance most of the teams that used the Raspberry Pi opted to change it for other solutions such as the Nvidia Jetson Nano or Intel NUC later on. However, I’m still planning on testing these options on the Pi4, and try to share the results here.

I also did some research on other options which basically consist of adding extra processing units like the Intel Neural Compute Stick which is powered by a Movidius VPU or the Google Coral USB Accelerator which has an Edge TPU. I’ve seen some examples of what can be done using either of these along with a Raspberry Pi and it definitely looks like they are up for the task.

But now there seem to be even better options! Camera modules that also include a processing unit, among which OpenCV AI kits (OAK-D/OAK-1) stand out. If you haven’t heard about them you can check them out here:

So at the moment the main hardware options under consideration are:

- Raspberry Pi 4 + OpenCV OAK-D / OAK-1

- Raspberry Pi 4 + Intel’s Neural Compute Stick 2 + Pi Camera / Webcam

- Raspberry Pi 4 + Google Coral USB Accelerator + Pi Camera / Webcam

- Nvidia Jetson Nano / TX2 + Webcam

The next step is checking and testing some of the most popular CNN architectures for real-time object detection, but from what I’ve seen so far YOLO (You Only Look Once) based models seem to be quite popular amongst the RoboCup community. But there are some other original architectures created by some of the teams I would like to check as well.

@geraldinebc15 - Regarding this option, how locked to a particular hardware the project would be ? What i mean is, can we use another hardware that run OpenCV code if the need be ?

What about using the RPi’s GPU? Found a few interesting links, such as this topic.

This tutorial is also quite interesting. Unsure how GPU related it is, though, but they do mention changing GPU settings… so they may be using it a bit?

Good explanation here about GPU involvement… and overall how it all works on RPi!

But yeah, the performance of a RPi without extra hardware seems to be about a fifth (best case scenario) of what you’d get with those dongles for extra processing power.

As for OpenCL on RPi4… well: https://github.com/doe300/VC4CL/issues/86 (and confirmed here). Kinda hard to implement drivers for a GPU without the doc…

The stuff from QEngineering also seems to turn off OpenCL on the RPi4 tutorials… :’(

That being said, there’s this stuff. Might be interesting to look into.

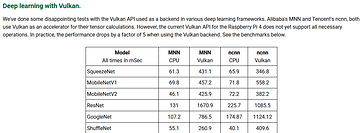

On a different note, since OpenCV 4.0+ also supports Vulkan and the RPi4 has a proper Vulkan driver now might make it a bit different?

They also mention the drivers, while functional, may not all have the needed bits yet to be useful for optimized tasks:

So, I guess there’s good stuff possibly on the way with those new drivers but they literally only got approved (compliance with Vulkan standard) in November 2020, so it is still pretty recent.

Overall, it seems the RPi4 would need external help for the time being to do any significant computer vision/deep learning to recognize objects in a useful way at a good frame rate (like 15-30 fps). That being said, if the requirements/expectations are changed: for example snapshots are used instead of constant video and a lower framerate is aimed for (1-2 fps) and the images are in lower resolution than maximum it may be sufficient for finding stuff like players, goal and a ball.

From what I’ve seen of robocup, the robots are pretty slow to react and move around (when they do at all / are not flaying on the ground looking confused). Therefore, slower performance might not be all that bad. Especially if later on in 2021 the Vulkan drivers pan out to be more useful (i.e.: for OpenCV proper use!  ).

).

Regarding this option, the idea would not be to limit the hardware that can be used. What I had in mind to do is to use OpenCV and TensorFlow to train a model (on my laptop to take advantage of the NVIDIA GPU) or maybe even use a pre-trained model, not sure yet. And then deploy the model on any option we choose (Pi 4, Jetson Nano, etc). And in case we decide to use an OAK camera or an Intel NCS I could later use OpenVINO to optimize and deploy the model that was trained with TensorFlow.

I guess with proper constraints on the model itself while training it you could possibly come out with something that can run (slowly, but maybe fast enough to be useful?) on just a bare RPi4 with a CSI camera?

Yes, I’m aware it is possible to use the RPi’s GPU but it seems to be a more complicated route with not many benefits.

But thank you for sharing those QEngineering posts! I found this one in there and I recommend checking it out:

Actually, the whole series of Computer Vision with a Raspberry Pi is great!

I also like the fact that the AI camera kits make it easy for beginners to use deep learning tools which is ideal for the robotics enthusiast community. That way anyone who is interested in using the robot for other tasks can do so without having to spend lots of time to be able to perform a simple CV/DL task.

Also, thanks for sharing your review on other options! But yeah, we pretty much reached the same conclusion, the RPi 4 will likely need some external help.

From what I’ve seen of robocup, the robots are pretty slow to react and move around (when they do at all / are not flaying on the ground looking confused). Therefore, slower performance might not be all that bad.

This is exactly what I thought at the beginning of my research. The robots I have seen participating in the RoboCup soccer competition don’t seem to react fast anyway, however, reading that the vast majority, if not all, of the teams that used the Raspberry Pi opted to switch to it in future competitions led me to look into these other options.

Maybe the problem is not only the low fps rate that can be processed with the RPi alone, but the fact that this has to be done in parallel with many other tasks to get the robot to play soccer. Or maybe it is due to the many excellent options that have emerged in the market (which do not necessarily imply higher costs), because if we compare the option of Jetson Nano + a good webcam or the Raspberry Pi 4 + OAK camera, we see that the difference is not much.

Or perhaps it could be because the teams are preparing for the future knowing that the goal is that robots have the ability to perform tasks more efficiently (maybe not real-time soccer, but at least not look like little drunk people trying to stay on their feet  ) this way they would not have to start from scratch in the future in case they decide to change hardware.

) this way they would not have to start from scratch in the future in case they decide to change hardware.

Yes! This is also an option I’m planning on testing. I mentioned it in my first post, the teams that have used the Raspberry Pi actually already tested this idea and created or modified existing models to increase inference speed by sacrificing some accuracy. Some examples:

xYOLO: A Model For Real-Time Object Detection In Humanoid Soccer On Low-End Hardware

Fast YOLO: A Fast You Only Look Once System for Real-time Embedded Object Detection in Video

And there are even more!

Yeah, those are good papers on it. I guess it is worth mentioning though that the RPi4 (vs RPi3) has a GPU that’s two generations ahead, so it may be able to do more… and also nothing there’s no OpenCL support on RPi4 so OpenCV and other stuff that would use a GPU barely get any acceleration currently from it (so nearly pure CPU).

And with those results above for the new Vulkan drivers in deep learning… it is not looking too good yet.

So, overall, yeah, something more beefy with a large amount of small programmable cores might be best, like the stuff form nvidia. I guess a Cortex-A SoC with an FPGA (and plenty of RAM!) embedded might also be an option to possibly run a model efficiently.

But sticking to the ones that are already mass produced I think the Jetson Nano + a good webcam might be the best solution. If I remember correctly the JN has a quad core ARM A-5x with 4 GB of RAM. It can probably be made to use some of the RPi-compatible CSI cameras, too!

edit: There’s the info!  https://developer.nvidia.com/embedded/jetson-modules#tech_specs I’m sure with that A-57 quad core it can more than enough take care of all the things RoboCup requires processing wise…

https://developer.nvidia.com/embedded/jetson-modules#tech_specs I’m sure with that A-57 quad core it can more than enough take care of all the things RoboCup requires processing wise…

Hello everyone!

Here are some updates of what I’ve been working on

I started testing some YOLO implementations for object detection on my laptop (TensorFlow, TFLite and OpenCV-dnn) (both on CPU and GPU) and also tested them on a Raspberry Pi 4, here are the results in terms of inference speed.

The models were all pre-trained using the COCO dataset and I used a network size of 416 for the tests, except for the last one which is 320. As can be seen from the results the best performance is achieved on the GPU (no surprise there) with the YOLO V4 tiny model in TensorFlow and the slowest is using the TensorFlow Lite models. On the RPi the best inference speed was achieved overclocking the CPU to 1.9GHz and using the YOLO V3 tiny OpenCV-dnn implementation, it gets up to 5 fps using a network size of 320. The OpenCV-dnn implementation can also be accelerated with the use of cuDNN (NVIDIA CUDA Deep Neural Network library) so if anyone is interested to see how it performs let me know.

The next step would be custom training with a dataset more related to soccer so we can detect custom objects (soccer ball, robots, goal posts, field lines) the great thing is that a RoboCup team (Hamburg Bit-Bots) created an amazing tool where teams share and label their datasets.

Some other implementations I’m also interested in checking out are:

On another note, I also assembled a small mobile platform with pan and tilt to test the vision system. So I think I’m going to start by simply tracking a ball and following it with the pan and tilt.

Wow @geraldinebc15! Amazing work!

And yeah, definitely no surprise on the GPU being the big winner here. I still feel like there’s something to be done with low power FPGA-based acceleration though… especially for those ARM-based solutions with an FPGA on the SoC directly. I just happen to find this article the other day… kinda gives me a bit of hope!

Definitely if using the RPi4 (or a similar platform is used), it needs some kind of accelerator card (GPU, FPGA, etc.) to be usable in real time.

Anyway, great work and details! Looking forward to the next steps!

Thank you @scharette

I just happen to find this article the other day… kinda gives me a bit of hope!

Great find! The LeFlow toolkit sounds great.

Definitely if the RPi4 (or a similar platform is used), it needs some kind of accelerator card (GPU, FPGA, etc.) to be usable in real time.

Agreed, that’s why these suggestions are under consideration:

- Raspberry Pi 4 + OpenCV OAK-D / OAK-1

- Raspberry Pi 4 + Intel’s Neural Compute Stick 2 + Pi Camera / Webcam

- Raspberry Pi 4 + Google Coral USB Accelerator + Pi Camera / Webcam

But I also agree with what you said here:

Sticking to the ones that are already mass-produced I think the Jetson Nano + a good webcam might be the best solution.

Sounds great as long as it works, I guess?  Seems like it is a recent development and may not be “production quality” yet.

Seems like it is a recent development and may not be “production quality” yet.

Since I have FPGA design experience and a few boards (USB 2.0 only I think, though) available - one is literally between the keyboard I am typing this on and my main screen, with the cable still connected to it!  - I think I may have a look into using it to do some acceleration through USB. Not expecting much result-wise, but still kinda curious…

- I think I may have a look into using it to do some acceleration through USB. Not expecting much result-wise, but still kinda curious…

On that front, maybe a different bus/interface (than USB) could be used to connect the RPi4 and the FPGA breakout… a CSI channel, maybe? Hmm… maybe even the SDIO interface!

Sounds great as long as it works, I guess?

Seems like it is a recent development and may not be “production quality” yet.

Hahaha yes! that’s why I used the word “sounds”

Since I have FPGA design experience and a few boards (USB 2.0 only I think, though) available - one is literally between the keyboard I am typing this on and my main screen, with the cable still connected to it!

- I think I may have a look into using it to do some acceleration through USB. Not expecting much result-wise, but still kinda curious…

Nice! Let us know how it goes.

On that front, maybe a different bus/interface (than USB) could be used to connect the RPi4 and the FPGA breakout… a CSI channel, maybe? Hmm… maybe even the SDIO interface!

Interesting idea

Finally! I have the IMU and Robot Localization (R_L) working properly. The popular package in Ros2 that handles Robot Localization just doesnt work well for legged robots. It rotates around the base/torso instead of pivoting on the support feet. This caused the balance algorithm to get confused. I got rid of the R_L node and wrote my own code that reads the IMU, adjusts the base while pivoting on the support foot (or feet).

In the video the servos are limp, I am pickup up the robot torso while holding the feet to the floor to test. The feet should not move in the Rviz visualization. There is some jitter sometimes when the ground contact is lost for a split second but it should work better under power.

Next up, programming stand, walk, etc trajectory sequences in python.

Awesome, that might be the breakthrough you were hoping for.

Can’t wait to see that bad-boy walk.

![[RoboCup][V-RoHOW] Computer Vision is a 3D Problem](https://img.youtube.com/vi/FhJwdtnVusY/maxresdefault.jpg)

![[RoboCup][V-RoHOW] Hands-on with ROS 2](https://img.youtube.com/vi/ZgzsYvne5Gs/maxresdefault.jpg)