For Object tracking and recognition, I will write the code myself with OpenCV in C++. And the program will run on a PC, images are transmitted from Wall-E using the wireless Wecam, and after processing the corresponding cammands will be sent back to Wall-E via bluetooth.

I have been looking very hard for programming solution for speech recognition, and hope someone has already wriiten a API or some sort. And Iaccidentally bumpped into a YouTube Video showing a much simpler way of doing this - EasyVR Arduino Shield! So I might use that instead of writing codes myself!

It's a great toy for 8's, it's only got one motor, which means it can only turn left, or go forward. Moves its hands as well, but that's pretty much it. Here is a video showing roughly the same one:

http://www.youtube.com/watch?v=VEoh8Iws-kk

=============================================

It was quite dirty since it's second hand. I had to wash every piece of it with soup water!

I will leave the assembling another day.

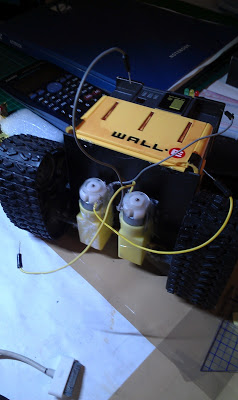

Finally found the time to look at the robot pieces and could get started to assemble it.

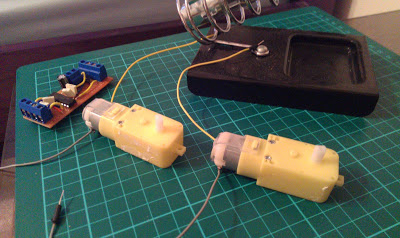

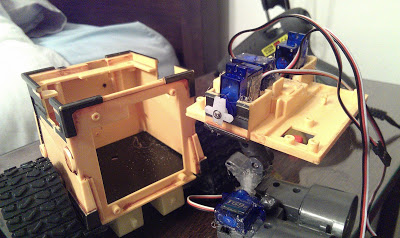

I recycled the motors and motor driver from my previous robot (Wally Object tracking robot).

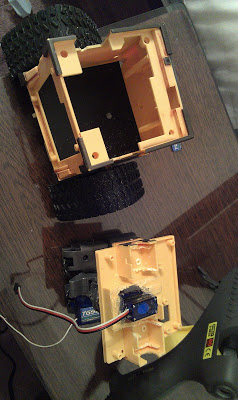

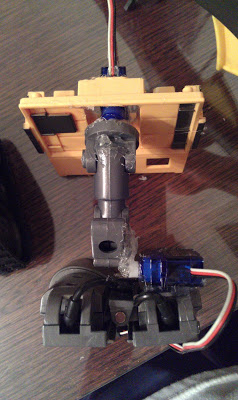

It was quite challenging to modify the robot to fit the servos. but i did at the end ^.^.

I will start coding another day!

capwebcam.read(matOriginal);

if(matOriginal.empty() == true) return;

inRange(matOriginal, cv::Scalar(0,0,175), cv::Scalar(100,100,256), matProcessed);

GaussianBlur(matProcessed, matProcessed, cv::Size(9,9), 1.5);

cv::HoughCircles(matProcessed, vecCircles, CV_HOUGH_GRADIENT, 2, matProcessed.rows/4, 100, 50, 10, 400);

for(itrCircles = vecCircles.begin(); itrCircles != vecCircles.end(); itrCircles++){

ui->txtXYRadius->appendPlainText(QString("ball position x =") +

QString::number((*itrCircles)[0]).rightJustified(4, ' ') +

QString(", y =") +

QString::number((*itrCircles)[1]).rightJustified(4, ' ') +

QString(", radius =") +

QString::number((*itrCircles)[2], 'f', 3).rightJustified(7, ' '));

cv::circle(matOriginal, cv::Point((int)(*itrCircles)[0], (int)(*itrCircles)[1]), 3, cv::Scalar(0,255,0), CV_FILLED);

cv::circle(matOriginal, cv::Point((int)(*itrCircles)[0], (int)(*itrCircles)[1]), (int)(*itrCircles)[2], cv::Scalar(0,0,255), 3);

}

// Convert OpenCV image to QImage

cv::cvtColor(matOriginal, matOriginal, CV_BGR2RGB);

QImage qimgOriginal((uchar*)matOriginal.data, matOriginal.cols, matOriginal.rows, matOriginal.step, QImage::Format_RGB888);

QImage qimgProcessed((uchar*)matProcessed.data, matProcessed.cols, matProcessed.rows, matProcessed.step, QImage::Format_Indexed8);

// update label on form

ui->lblOriginal->setPixmap(QPixmap::fromImage(qimgOriginal));

ui->lblProcessed->setPixmap(QPixmap::fromImage(qimgProcessed));

As a starting point, I wrote a Qt program to detect colour (red), and send out command via serial port to arduino, to turn Wall-E's head to follow the object. I will extend the object that can be tracked to faces, certain objects, light source etc..

I struggled so much at the beginning, because everytime i connect to arduino via serial port, it freezes the video. I later realized it's the thread issue. when the program is waiting for data from serial port (or reading, or writing? i am not sure), it actually hangs the thread, so I decided to modify both serialport class, and video class to have their own thread when running.

Some people suggest it's not a very good idea to use thread if we don't have a formal education on this subject. And I did find it confusing how to start with thread, because some say we shouldn't make QThread subclass and we should instead move a object into a thread. But since it's in the official documentation that we should make it subclass, I followed the latter.

I am still very new to Qt and OpenCV, since I only started learning these a few days back, and I was already thinking about multithreading, and I now realized how crazy that was!

With frustrations, I spent the whole weekend and my bank holiday just debugging the code. I dropped my diet routine, my exercises, and my movies! But I won at the end. Altought it is still not as good as I would expect, tracking is quite slow and inaccurate, and the head shakes a lot, at least it works ^.^

I will look around for some better algorithm, at the meantime might add a few more functionality in the program like adjusting the video properties, and better threading coding...

see you now..

=============================================

=============================================

Assembling the eye. Hot Glue gun is really helpful :)

=============================================

update 05/09/2012

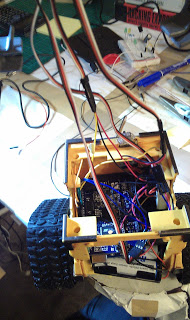

Finally I have some time to sit down and continue my project! I finished the inside layout and tidied up all the cablings tonight. I Also tested the servos and motor driver, all seem working fine!

But i just have to say how much i hate soldering right now!! I literally spent 2 hours trying to solder a switch to some long cables. The first try, i found the cables was a bit out of contacts, so I put hot glue to stick them together, didn't help. So I tried very hard, to take the glue off, and replace all the cables with new ones, and soldered again.

This time was better. I then installed it on the robot, and hot-glued it on. Only found that the switch itself isn't working properly, I have to occasionally push the plastic bit. I guess i might have damaged the switch when I was taking the hot glue off...

There is a reason I love programming so much! When I was at Uni doing projects, I always left all the soldering and cabling works to my lab partner, and I would take care of all the coding and maths. I just don't have the hands to do these things i guess... :(

Motor Driver information can be found here:

http://arduin0.blogspot.co.uk/2011/12/what-does-it-do-it-will-take-external.html

=============================================

update 08/09/2012

We can now control Wall-E from PC.

We can also use it as a spy robot :)

With some modifications, we can see the video on a web interface, and control it over the internet.

=============================================

update 22/01/2013

Sorry I haven't been making any progress on this robot, as I am currently working on a few website projects.

But I have decided in the next few updates, I will be using Raspberry PI instead of Arduino as Wall-E's brain, which will make Wall-E more compact and react faster.

- Sensors / input devices: camera sound bluetooth

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/wall-e-in-action