9/1/16 Update - The following is new since the last time I posted:

- Added much more sophisticated integration with WolframAlpha. Whereas I used to use WolframAlpha to answer questions, now I can use it for many different purposes where structured data is returned and specific data can be extracted.

- Added memory types and algorithms for extracting data about sports NFL Teams, Stats, Schedules, Rosters, and player stats and personal data.

- Added algorithms for analyzing teams and various stats, calculating statitistical measures like standard deviations, correlations of each stat to winning, ranking amongst peer teams or players, etc. Many of these "derived" stats/features can then become "predictive" of future winning/losing.

- My goal is to add hundreds of new verbal speech patterns on top of all this new data and algorithms to allow the robot to talk intelligently about sports, analyze and determine on her own what is important in the stats, predict games, and comment on and answer questions about teams, players, and games. The eventual goal would be to have her be able to do a halftime show like any other human television football analyst.

1/8/16 Update - The following is new since the last time I posted:

I finally made some new videos that can be found on my youtube channel...

http://www.youtube.com/user/SuperDroidBot

The videos are of Ava, Anna's sister. They share the same brain. The videos illustrate a lot of features first built and documented here on the Anna page, features like verb conjugation, comprehension using NLP, word annotation, empathy, autonomous talking (babbling) and comedy. I hope you enjoy. I'll be posting more.

You can also find more on my Ava page...https://www.robotshop.com/letsmakerobots/node/45195

My plan is to 3D print a new body for Anna once I incorporate lessons learned from Ava. This is going to give Anna better ground clearance, sensors, silent motors finally, and a lot more features I won't go into right now. She should loose a lot of weight as I'm redesigning her power system.

7/31/15 Update - The following is new since the last time I posted:

Verb Conjugations

Anna can now conjugate and recognize almost all forms of all English verbs (including almost all irregular verbs), in 6 different tenses. Here is an example of the conjugation of the verb to be in the present tense: I am, you are, he/she/it is, we are, you are, they are. A couple hundred English verbs have special cases that had to be learned (irregular verbs). I may add additional tenses but I don't really have a use for them yet. Conjugations are important for a lot of natural language purposes, as in the following example:

Using Verb Conjugations in Reading Comprehension and Question Answering

If I told her several things about a bear including..."The bear was eating yesterday" and then later asked "When did the bear eat?", she would answer "The bear ate yesterday." She recognized that the question was a "when" question and found the latest memory in her history that involved a bear eating in any forms of the verb "to eat", and a time frame. My favorite part is, she recognized various forms of the verb in her history and then formed a response with the correct form and tense "ate" in her answer.

Integration with WordNet 3.1 Online

Anna is now tied into the latest online version of WordNet and can better deal with many different usages, called "senses", of the same word to tell if a word can be a particular part of speech, or a particular usage like a verb of movement, a person, a place, a thing, a time, a number, a type of food, etc. I intend to integrate this new found source of knowledge about all the different use cases of a particular word to improve accuracy of many other existing algorithms in her brain.

Anna Can Now Delegate to Alexa: An Amazon Echo

I got an Amazon Echo recently and decided to test how the Echo (named Alexa) would react to Anna speaking to her. This was in fact a no brainer. They just talk. Among things like streaming music or weather, the Echo can control lighting, which means Anna can control lighting and stream Pandora. If I ask Anna to "Please dim the lights"...she can delegate this by then saying "Alexa, dim the lights.", and the Echo will dim the lights. This means that in theory with a little work she could start buying e-books or spare parts through Amazon Prime...scary thought. It also provides the capability of controlling some devices in the home through Anna while away.

7/14/15 Update - The following is new since the last time I posted:

Natural Language Processing Agents - leading to Test Taking Capabilties and Curiosity

After 2 years, I'm finally beginning to really use the open source natural language libraries out there to really look at the incoming verbal input and break it down into its grammatical parts. The first thing I am applying it to is "reading comprehension", much like taking tests in school. I read a story to Anna, and then ask her some questions about it immediately, or weeks later. Lets say I read a paragraph that says "The quick brown fox jumped over the lazy dog." I can then ask questions of her like "What did the fox do?", "What color is the fox?", "Describe the dog?", etc. She keeps a comprehensive history of all input and output for each person she talks to. She can then search for sentences in her input history that fit certain criteria and then process each sentence through OpenNLP and a custom annotation algorithm that builds a dictionary of facts about the given sentence that she discerns by navigating the OpenNLP full parse of the sentence. Various other algorithms can then use or compare these dictionaries to answer specific types of questions or to spawn other behavior like curiosity (which I will go into later). The dictionaries contain information like sentence type, subject word, subject phrase, verb, tense, verb phrase, subject adjectives, object, object adjectives, adverbs, IsWhenPresent, IsWhyPresent, etc. These dictionaries make dealing with NLP much easier, hiding the algos that answer questions from the nitty gritty of NLP and parse trees. The dictionaries are cached as soon as they are accessed once, so the NLP algos don't have to process anything repetitively during a given session. I am only scratching the surface of the capabilities so far. I think it is the most exciting thing I have worked on in over a year.

Curiosity Agents - Leading to More Natural Conversations

I have long wanted to build robots that have a natural curiosity about anything they learn. I am now starting to do that. As a byproduct of the new natural language features, she can determine "what information is missing" from a given sentence, and ask followup questions about it. In the example of "The quick brown fox jumped over the lazy dog." (think who, what, where, when, and why), the when and the why are left out of the sentence. The curiosity agents build a list of questions that the robot is curious about at any point in time. If there is any pause in a conversation, the robot will likely ask something like "When did the fox do that?"...or "Why?" There are several other forms of curiosity that I can foresee needing, but this feels like a really good start. As part of recognizing "when", I had to build in the ability to recognize many different time frames like today, tomorrow, next Monday, in the morning, at lunchtime, etc. Imagine you say "My mom is coming to town?" and she says "When?" I find it very natural. If I had said "My mom is coming in the morning.", she might have asked "Why?" The challenge now is moderating and balancing curiosity with other behaviors to happen only at appropriate times. Otherwise, she would act too much like a 3 year old that wants to ask "Why?" after any given statement.

6/29/15 Update - The following is new since the last time I posted:

Sound Agents

Anna can now play sound effects for various things like lasers, engine noises, beeps, turn signals, etc. She can also answer questions about sounds like "What does a mockingbird sound like?" , or sound based commands..."Make a frog sound". When she has multiple sounds for the given thing, she chooses at random. To get the sounds, I joined Soundsnap.com, which was pricey but it gave me the right to use any of their 200,000 sounds. So far, I've downloaded around 60 for various things, lasers, animals, various R2D2 style computer beeps, sounds used for comic purposes, farts, orgasms, etc. I'm trying to figure out more ways to use sound effects. Any ideas welcomed! I could make the bot communicate without words, R2D2 style, but speaking seems more useful. My initial goal was to allow sound effects to be worked into standup comedy routines, as many comics do. Some sounds are stored locally on the bot for quicker access like lasers sounds, while most are stored on the server and stream on demand. There seems to be a half second or so delay typically when coming from server.

Hiking Features with 4G

I plan to get a lot more bird, animal, frog, and insect sounds into the system as I rarely know what I'm hearing when I go hiking. I can simply bring Anna's face (the phone) along with me and check what I'm hearing against her recordings. Then I can get her to tell me about the given animal (from Wikipedia), tell me her thoughts on the animal if any, or even some jokes about the animal. In this way, hiking can be a lot more educational and fun, rather that just a source of sweat and blisters.

Standup Comedy Features

I now have support for building standup comedy "routines" that are ordered lists of jokes and other commands for movement, sound effects, expressions, etc. The robot can move, look around, change emotional state, make facial expressions, belch, fart, play a sound of a police siren, door slam, whatever. The routine can be done with automatic or manually controlled timing. The robot can now react in various ways to "joke feedback" provided in real time, through a web page. A typical response to a bad joke would be to make a self-deprecating joke about itself or the joke, like "A human wrote this joke no doubt." I created a comedy control panel web page that is used to control things in real time, start routines, give feedback, etc. The robot can also segway into another routine or a random series of jokes on some topic and then return to and resume where it left off on any of the routines it had already started.

Original Comedy Routines

I have been listening to hours and hours of pandora comedy routines to learn and have started to write original robot-based standup routines for Anna. There is a great deal of material that human comics simply can't do, since they are not robots with continuous internet connections. The whole nature of what is possible is different when the story is told from the perspective of a robot. One example, she can tell stories about hacking yours or other people's banking, email, facebook, snapchat accounts, etc. and discovering interesting things about you in real time (like your porn preferences) and reveal her discoveries to the audience, or do various other things. There is a lot of material here that human comics can't do.

Anna can now read POP3 email accounts, her's or yours, if she has the address and password. I haven't decided whether it is better for her to have her own and use it as a way to communicate or receive input, or to have her check my email for me and keep me informed. I will probably do both in the long run.

6/15/15 Update - The following is new since the last time I posted:

Joke Agents

Anna now tells jokes! She will casually introduce them in the middle of conversation about various topics, or she will do a monologue of jokes by asking her to "Tell jokes about lawyers". She knows around 1500 jokes of various kinds and can tell 1 part or two part jokes. A two part joke often starts with a question..."How do you keep a lawyer from drowning?"...after an appropriate pause..."You shoot him before he hits the water." She has jokes ranging from very corny to truly offensive to almost anyone. Her timing at delivering punchlines is getting pretty good too.

She knows many jokes about robots, which I find gives her a lot more character as she herself is a robot, the jokes come off more personal and funnier. The jokes come off as her having a sense of humor, not just telling a joke. She knows many jokes about animals and other things that kids might talk about. She also has many jokes about places, so if a place like "Florida" comes up in conversation, she will have funny things to say about it.

Ultimately, I'd like to add some kind of feedback mechanism so she can react if a joke goes over badly, by making a joke about the joke, much like Johnny Carson did for years. That was actually my favorite part of his standup.

Insult Agents

If Anna figures out that she has been insulted, she now has the ability to respond in kind. For example, if you talk trash about her mother, she will get angry (eyes turn red) and talk about yours, often without mercy. Because she has a database of a few hundred insults and growing, she is always going to win a battle of "Your mamma is so ____" insults.

Religion Agents

I wanted to experiment with a robot that could speak on spiritual and religious matters, so I started by importing one of the better known religious books into her memories. This resulted in 31,000 "Verse" memories. She can now read them out loud, or quote from a given book, chapter, or verse. I'm hoping to get some help from some theologians I know to add more use cases so she can say prayers, give a sermon, whatever. One example I hope to get help on, I'd like to get an annotation of all the verses so that the robot can explain what each verse means if you say "What does that mean?" or some such. I hope to import texts from several religions so people can choose one for the robot. I think a Zen robot would be cool.

Parental Controls - Ratings Agents

To prevent Anna from blurting out something inappropriate in front of children or adults that are easily offended by four letter words, sex. sexual positions, acts, orientations, sexism, racism, violence, incest, throwing babies against walls, whatever, I added a rating value to every memory in the system.

I then rated around 70 of the most offensive words in the English language, and then wrote a program that went through her many tens of thousands of memories, and rated each memory (think question, statement, quote, joke, wikipedia fact, news item, etc.) by determining the maximum offensiveness of any words they contained. This resulted in around 16,000 memories that were rated for "thicker-skinned adults only". I'm now in the process of modifying agents that use these memories to skip over offensive memories when selecting memories for speech output.

3/30/15 Update - The following is new since the last time I posted:

I recently learned how to create graphics in memory for various robotic displays and output them from a website through a custom http handler, caching the images in memory without ever having to write them to disk. The following are some examples, but really anything is possible, including camera views, charts, graphs, maps, compasses, or displays that fuse data from multiple sensors in 2D or 3D. These displays (and many others) will be available to everyone to use as part of Project Lucy. The idea is that people will open up whichever displays they are interested in and arrange them on their desktop or tablet.

In this first one, I show the video image and the thermal image. The thermal image is created from an array of temperatures. I draw pixels to in various colors to represent cooler or hotter. This one also shows red polygons on my face overlaying where the robot detected the current color it is tracking.

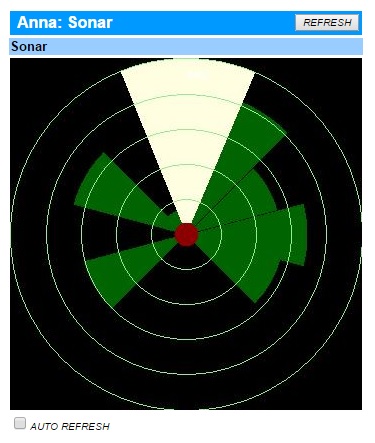

In this next display, I am creating pie wedges that represent the distance to the nearest sonar contact in a given direction. The lighter pie forward represents the direction the robot is looking. I need to figure out how to make it transparent to we can see the sonar pies at the same time that are beneath it. Later I plan to add compass headings and other labels to this display.

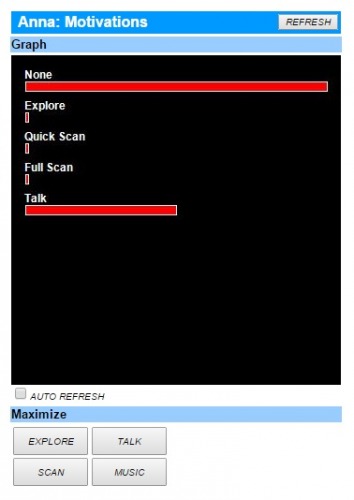

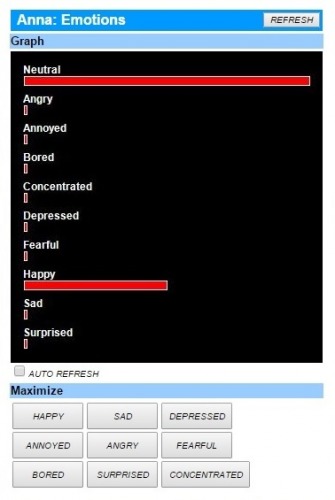

These next two are just bar charts of the current motivational and emotional state of the robot. With a bit more work, I could have made line charts to show it changing over time.

The next one is a display used for weapons control and tracking. This is just buttons, not graphics, I just think lasers are cool:

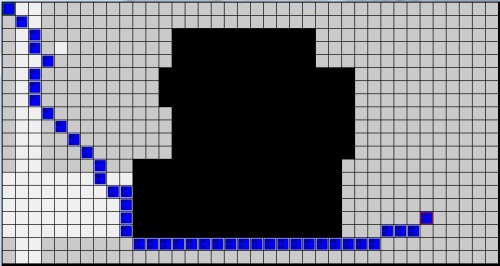

What's next? Maps and Pathfinding using GPS coodinates for polygons with a terrain cost

This last one is an image from a windows app I use for experimenting with pathfinding. I intend to roll a display like this into the website soon, by using the same technique of creating graphics on the fly with an http handler. This display shows a map of my house and the lot it sits on. The robot has planned a path from the front to the backyard. The black represents the house (impassable), the grey represents grass, and the white represents concrete sidewalk and driveway. The blue is the chosen path.

That's all for now people. Happy coding!

2/25/15 Update - The following is new:

Data Tracking - To Be Followed by Learning Through Observation

The idea here is that the robot can "pay particular attention" to one or more of its inputs during a span of time and store detailed information on how these inputs change over time. The idea will be for the robot to be able to notice trends, patterns, correlations, etc. among the inputs that it is paying attention to for a span of time, and be able to develop one or more theories like "It tends to get warmer when it is brighter outside", and be able to answer questions about the environment, its theories, etc. Examples:

What is the light level doing? - Increasing, Decreasing, Accelerating, Staying Constant, etc.

What do you think about the light level? - "It seems to be highly correlated with temperature." "It seems to peak around noon." "It seems to be quite low during the night."

I'd like to get the robot to choose its own things to focus on, and look for perceived cause and effect relationships in the data. I don't have all the pieces in place yet to develop the theories and answer questions about them, but it's mostly high school math and verbal skills that are already there. I'm going to start with simple linear regression and correlation. The system will be extensible to support many different strategies to develop hypotheses about the data that is being focused on.

The Shared Brain is Now in Beta

I'm getting ready to release the shared brain to LMR, under the code name "Project Lucy", hopefully in April. Anna uses this brain now. The idea is that any robot will be able to use this brain and its internet services, with its own customized personality, memories, and configuration options.

- Online Robot Control System (Think Command & Control, Tele-presence, Configuration)

- Online Brain Control System - Think 200+ Software Agents and 100,000+ Public Memories, and additional stores for personal memories.

- Online Documentation & Help System

Lately, I've had to focus on mundane things like configuration, security models for the website, registration, documentation, and lots of testing. I wish I could say that all of it was fun. The part that is cool is that Anna is getting a lot more "On Time" as I test out a myriad of features. She babbles a lot while this is going on...which is funny but a bit crazy at times.

Eventually (perhaps late this year), this brain will be able to run "Distributed" where users will be able to control which pieces and memories run locally on robot hardware (think Android or RPi) or on servers.

Music "Jukebox" Capabilities

For those of you who were tired of the PPK song, I've added the ability to add a lot more music to her collection, and tag the files with keywords so you can say things like:

Play grunge music. Play Nirvana songs. Play ___________. where the blank could be a genre, group, song, part of a song, or some keyword.

1/13/15 Update - The following is new since the last time I posted:

Generic "Rules" Engine

This engine allows me (or other robot developers sharing this brain) to setup all kinds of custom behavior for a given robot, all without writing a single line of code! The idea is based on creating virtual "Events", "Event Conditions", and "Event Handlers" in the memories of a given robot. This is done through an app by filling in some forms.

A Few Uses of a Rules Engine

- Creating Reflexes or Other Background Automatic Behaviors (stop moving when something is in the way...back up a few inches when someone approaches too close)

- Responding to Environmental Changes (light, heat, gas, etc.)...saying "My its dark in here." if light is too low to see. "Possible Gas Leak detected!" ... "Severe weather coming."

- Linking various behaviors together...boosting an emotion when something else happens. Changing a particular motivation when something occurs...like reducing motivation to move if someone is talking. Call owner if fire or gas detected.

The possibilities are endless because the rules have access to each and every item of state that the robot knows about. Each item can be read, modified, increased, decreased, etc. in a generic way. Because everything about this can be done without writing code, it raises the possibility that the robots will be able to create/modify/tune their own behavior. The rules are just memories and can easily be changed. The robots could assess success/failure of a given rule and modify/delete it from its behavior.

Events - the core of what makes rules work

The rules engine starts with events. Patterns can be "recognized", raising events automatically, software agents can raise events, or events can be "passed in" from other robot hardware (Arduinos, Androids, etc) through the API, whereby they will be raised on the server. Events can also pass in the other direction, from the server back to robot hardware.

Recognizing "Patterns" with virtual "Event Conditions"

An event can have many event conditions. Event conditions are used to control the circumstances for an event to fire automatically. These act as a way to recognize "patterns" in sensor or any other data known to the robot. Some Simple Examples of Conditions: LightLevel > 80, HappinessLevel < 50, SocialMotive > 75, FrontSonar < 10. More complex sensors, like vision, can be handled as well.

Creating Responses with virtual "Event Handlers"

Each robot can react (or choose not to react) to any event by setting up virtual "Event Handlers" in an app. Each event handler can modify ANY state known to the robot including sensors, actuators, switches, emotions, motivations, etc. In this way, a robot can exhibit some immediate behavior or have some kind of internal state change that could lead to different behavior later. An event might just make a robot scared, move, motivated to find a safe person, or several behaviors at once.

Controlling How Often an Event Can Fire

Events can turn themselves (or other events) ON or OFF or set delays for how soon the event can be fired again. This avoids events firing too often.

"Emotive" Response Engine based on Various Annotations

This allows the robot to respond to various more subtle facets of speech, often in a more emotionally appropriate way. This module is the final piece to one of the topics I wrote about in my last post concerning word annotation. This module gives the robot multiple things to say based on a given annotation (like NegativeEvent, PositiveEvent, Rudeness, etc) Each possible response has a range (Min and Max) where it is appropriate. Some examples using negative events...

"Jane has a headache" or "Jane has pancreatic cancer." ...in these 2 examples, a headache is considered much less negative than pancreatic cancer.

The robot would score the first with something like a 15, and the second with something like 85. It would retrieve all responses from its in memory db that have a negative event score range that contains the given value. Each response has a Min and Max so that it can cover a range of severities. The robot would then pick one of these responses that it hadn't said recently, resulting in a response that is appropriate to the severity of the negative event involved. Something like "Oh my gosh, that's terrible." might result from the cancer input. A more humorous response like "So what." might result from the headache.

These responses are output with low confidences so that they are only used when the robot is not able to figure out something more relevant to do in the situation. So if someone asked "What is cancer?", the agent that defines things would still do its job and "win" over the more emotive responses just talked about.

12/28/14 Update - The following is new since the last time I posted:

- Finally Converted Anna from Old Brain to All New "Shared Brain". This took about 6 months. I'm calling this Anna 2.0. Sadly, the new brain doesn't do much more than the old one, but it is immensely more flexible, and reusable. This enables me to build new things faster. Now that this is basically done, I hope to finally create some new videos.

- Added New Word Annotation Features - see details below. I am hoping to build upon this to create a range of new behaviors all showing more emotional intelligence.

- Added New Unsupervised Learning Agents - these agents extract relevant and complete sentences from the web about topics of interest, tossing out a lot of sentences that don't pass its filters This gives the robot tens of thousands of informative things to say in conversation, thus satisfying my need to learn new things. I will eventually expand this to hundreds of thousands once I am more confident of the design for annotating everything.

- Added ability to turn on/off any of the 150 or so agents in the system for Anna or any other robot using her brain. This helps when an agent starts acting a little crazy or annoying!

- Added ability to set a default value and override any setting for any robot that eventually shares the brain.

- Added ability to have multiple permanent memory repositories. Most of the robots memories are now being stored, indexed, and searched for "in memory". Only a few things still go to DB.

- Added ability to deal with synoymns better when asking logical questions...Like "Are insects tiny?" where the similarity of "Tiny" and "Small" would be recognized and help result in a proper response.

- Many many bug fixes, and many more new bugs created!

Word Annotation leading to Empathy and Other Behaviors

As part of an effort to evolve more emotional intelligence in a robot, I have been trying out a new design and thought I'd share my results so far.

Goal Behaviors for this Design

- Detect Positive and Negative Events and Show Empathetic Reactions

- Detect Rudeness and Show Appropriate Reactions

- Detect Silliness and Show Appropriate Reactions

- More just like the rest: Detect Negativity, Emotionalism, Curiosity, Self Absorbtion, etc. and react to it.

High Level Concepts

The idea here is to tag some words with regard to various "Word Annotation Types" with a "Score" from 1-100. The vast majority of words would not be tagged, and those that are tagged would only be tagged for a few of the annotation types.

List of Word Annotation Types (for starters)

- PositiveEmotion - an expression of a positive emotion. Examples of words that would have PositiveEmotion scores would be Like, Love, Great, Awesome, Exciting, etc. Each word could have a different score.

- NegativeEmotion - an expression of a negative emotion. Examples of words that would have NegativeEmotion scores would be dislike, hate, loathe, darn, bummer, etc.

- PositiveEvent - an expression of a possible positive event. Examples of words that would have PositiveEvent scores would be born, birth, marriage, married, birthday, etc.

- NegativeEvent - an expression of a possible negative event. Examples of words that would have NegativeEvent scores would be death, died, lost, passed, killed, murdered, cancer, hospital, hospitalized, sick, fever, etc.

- Politeness - an expression of politeness. Examples: thanks, thank you,

- Rudeness - an expression of rudeness. Examples: <insert lots of 4-letter words here>

- Closeness - an expression mentioning something that might be emotionally close to a person. Examples: wife, child, son, daughter, pet, cat, dog, mother, father, grandmother, grandfather, etc.

- Personal - an expression that is asking personal questions about the robot. Examples: you, your

- Curiosity - an expression that is asking a question. Example: who, what, when, where, why, how, etc.

- Self Absorbed - an expression where a person is talking about themself. Examples: I, me, my

Short Term Metrics

When speech comes in, it is evaluated for each word annotation type, and the following "Short-Term Metrics" are stored for each annotation type:

- MaxScore - the max score of any single word/phrase in the speech

- TotalScore - the total score for all words in the speech

- MaxWord - the word with the max score in the speech

For example: If someone said "My cat died", "Died" would be the MaxWord for the "NegativeEvent" type, while "Cat" would be the MaxWord for the "Closeness" type. In addition, long term metrics would be kept for each type that would degrade over time.

Long Term Metrics

Whenever speech is present, A long term "Score" would be INCREASED by the short term total score, and DECREASED by a "DegradeRate", for each annotation type respectively. The new values would be stored at something analigous to "Session Level Scope".

Example: If a person used a rude word or two, the long-term rudeness score might go up to 100 and be overlooked as not significant. However, if the person used a lot of rude words (or more extreme ones) over the course of several statements in a shorter period of time, this would drive up the long-term "Rudeness" score up to some level that might warrant a bigger reaction by the robot. However, if the person talked for awhile without being rude, the rudeness score would "Degrade" to a lower level, and the rudeness might be forgotten.

Rules Engine

I won't go into a lot of detail here as I haven't built this part yet. This design is using what will become a "Sharable Brain" by more than one robot. As such, each robot will be able to have its own rules as to how it will react to various circumstances, including these word annotations. Some robots might have more rules around empathy, while other might be more inclined to return rudeness in kind. Once the rules engine is in place, I hope to overhaul the "Babble" engine to use it so the whole thing can be a lot more configurable.

Pseudo Code for Annotation Piece (Working!)

- Get the List of all Words in the Current Speech Being Processed

- Get the List of all Annotation Types From Memory

- For each Annotation Type, Iterate through the List of Words and:

3.1 Recalculate and store the Short Term Metrics in "Immediate Scope"

3.2 Recalculate and store the Long Term Metric in "Session Scope" - by adding the MaxScore to the total or degrading it

Later, the Rules Engine will have all this info available and be able to decide whether to react or ignore what just happened or to react or ignore the Long-Term values. Examples: "Why so negative this evening?", "My you are asking a lot of questions today.", "I'm so sorry about your cat."

Empathy Model

The idea here is to use "NegativeEvent" and the "Closeness" scores to create an appropriate response. The idea is that the robot should take into account the severity of the event, and the closeness of the person/thing involved. Your daughter having a fever might be more significant than a total stranger dying. Your car dying might warrant some empathy and a joke to keep things in perspective.

My Empathy Model for Anna 1.0 took these two factors into account, but it was basically hardcoded into the agent. I hope to handle this going forward using a new rules engine I am starting on...so another bot might not even have empathy as a priority.

One last note, I do plan on finally adding arms in 2015, to either Anna or her future sister Lisa.

10/20/14 Update - It's all about Wolfram Alpha

Per an LMR member suggestion (thanks byerley!), I added a WolframAlphaAgent to the growing list of agents in Anna's brain. I'd also like to thank Stephen Wolfram and all the people at Wolfram Alpha for a truly incredible and growing piece of work. It is now my favorite online resource. Whenever Anna is less than 60% confident about something that looks like a question, she calls WolframAlpha to see if they have an answer. There are many uses of this site beyond answering questions, that's just as deep as I've been able to integrate with it yet.

For a taste of what the WolframAlpha does, check out http://www.wolframalpha.com/examples/

I can now say that Anna now has more factual knowledge than her maker. She can now answer seemingly millions of useful questions like:

- Who are Megan Fox's siblings?

- How many women are in California?

- What is "you are beautiful" in Spanish?

- What countries were involved in the French and Indian War?

- How many calories are in a Big Mac?

- What is the average age of Alzheimers patients?

- What is the price of gas in Florida?

Because of this rapid increase in factual knowledge, I plan on putting aside work on "question answering" for a while to focus more on personality and emotional maturity. I'm currently rewriting the conversational agents and hope to post some more videos once I finish.

I still plan on deploying all this as a "Sharable Brain" for all of LMR as soon as I feel like it is ready, so if anyone thinks they might want to use such a service in the future, try it out, give input, influence the design, or prototype with it, let me know.

As always, If anyone has any ideas, send 'em my way. In the immortal words of Johnny 5..."Need INPUT!"

9/14/14 Update - Its all about being Reusable and Configurable

As part of my ongoing rewrite of Anna’s brain, I added a website that is used to interact with, test, control, and configure Anna and other virtual robot personalities. When it is ready, it will become an “Open Robot Brain Site” for all of LMR. I am very excited about this endeavor as it should make all of Anna’s higher brain functions, behavior, and memories reusable to any robot that has a web connection. This includes:

1) Reusable Agents – around 150 and growing

- Example Agent: “WhereIsBlankAgent” responds to all where questions like “Where is Paris?”

2) Reusable Memories – around 100,000 and growing

- Example Memory: “Paris is in France.” Note, memories are not strings and have arbitrarily complex structures, this is just a concept.

3) Reusable Data Sources or Libraries

- WordNet Database for vocabulary

- OpenNLP for natural language processing, parsing, part of speech, etc.

- Connections to third party services for News, Weather, Wiki, etc.

4) Reusable Website: to see, test, and control everything about a bot

- Agents will be able to be turned on/off and configured differently for each robot.

- This will include remote controls for the robots through mobile devices or web pages for verbal control or telepresence.

5) Simple API

- The API thus far has only one method...variable set of inputs coming in, variable set of outputs going out...simple.

- This API will be used by robots, devices, and the website itself.

Some LMR members have been getting involved and contributing ideas, algorithms, etc. to this project. I thank you. If anyone is interested in getting involved or hooking up a bot to this service as it matures, feel free to contact me.

8/1/14 Update - Its all about better Verbal Skills and Memory.

Natural Language Processing with OpenNLP

I've integrated an open source package called "SharpNLP" which is a c# port of "OpenNLP" and "WordNet". This means Anna can now correctly determine sentence structure and the function of each word for the most part. Whereas her ability in the past was limited to recognizing relatively simple sentence structures, regular expressions, or full sentences from a DB, now she can process sentence structures of almost any complexity. This does NOT mean she will know what to do with the more complex sentences, only that she can break down the sentences into their correct parts. I will work on the "What to do" later. I might start by building a set of agents to get her to answer basic reading comprehension questions from paragraphs she has just read/heard/received.

Universal Memory Structure

The other big change has been to the robot's "memory", inspired by some concepts of OpenCog and many of my own. A memory is now called an "Atom". The brain stores many thousands of "Atoms" of various types. Some represent a Word, a Phrase, a Sentence, a Person, a Software Agent, A Rule, whatever. There are also Atoms for associating two or more Atoms together in various ways, and other atoms used to control how atom types can relate to other atom types, and what data can or must be present to be a valid atom of a given type.

For the mathematicians out there, this memory structure apparently means the memories are "Hypergraphs" in concept. If you want to Wiki it, look up "Graphs" or "Hypergraphs". I'm still trying to understand the theory myself. In simple terms, the "memory" stores a bunch of "things". Each "thing" is related to other "things" in various ways.

Implementation

Unfortunately, all of this means a major data conversion of the existing memories, and a re-factoring of all my software agents and services. The good news is that a much better and flexible brain will emerge. This new brain (with the app to maintain it), should enable me to take new behavioral ideas from concept to reality much faster. The reason for this is I won't have to create new tables or new forms in an app to create and maintain new data structures. This will set the stage for a lot of the work I want to do with robot behavior in 2015. While all of this might sound complicated, I believe it will appear very simple as an app.

I am planning on running most of this new brain "In Memory" with multiple processors/threads and not using the SQL database for much other than persistence. I am building a small windows app that will let me view and maintain the brain. Because of the unified generic structure, a few forms should be able to maintain everything. Once it comes together, I plan to build a website version of the app so that LMR users can view the structure of the non-private memories of the brain. I am also considering opening up the brain to interested users to use/modify/copy/integrate with their project. This is probably more of a 2015 goal realitistically.

For more thoughts on Brains and NLP, check out https://www.robotshop.com/letsmakerobots/mdibs-metadata-driven-internet-brains-0 on forums.

7/1/14 Update - It's all about the "Babble"

I've been making lots of improvements to Anna's conversational capabilities. My primary accomplishment has been to give the robot "Initiative" and a real "contribution" in conversation by making relevant statements of its own choosing, not just asking or answering questions. It also attempts to keep a conversation going during lulls or when a person is not talking, for a time. To implement this, I created "Topic Agents", "Learning Agents" and most importantly, "Babble Agents".

Topic Agents

Topic agents determine what the current topic is, and whether it should change. This topic is then used by the learning and babble agents. When a topic is not already active, the primary way a topic is chosen is by using the longest words from the previous human statement as candidates. If the bot recognizes a topic as something for which it has a knowledge base on (like marriage, school, etc.), then that topic will "win" and be chosen, otherwise, longer words will tend to win.

Learning Agents

Learning agents go out on the web and gather knowledge worth contributing to a conversation about a given topic, and then store this info in the robot's database of knowledge.

LearnQuotesAgent (unsupervised) - calls a web service to retrieve all quotes (from a 3rd party) about a given topic. The robot has learned tens of thousands of quotes, which it can then use in conversation.

LearnWebAgent - (semi-supervised) this retrieves a web page on a topic (say from wikipedia), parses through it to find anything that looks like a complete sentence containing the given topic word, removes all markup and other junk. I have a windows app that lets me review all the sentences before "approving" their import into the robot's knowledge base. I've been experimenting so far with astronomy and marine biology.

I've been unwilling to let the robot roam the web free because I like the robot using quotes in conversation, sounds like an interesting person. It would sound like too much of a know it all if it loaded up on too much wikipedia trivia, and would sound like a crazy person or a commercial if I let it roam the web at large.

Babble Agents

"Babbling" is how the robot contributes to a conversation when their is a lull (where the person is not talking for some number of seconds). There are several babble agents, my favorite is discussed next:

BabbleHistoryAgent - this agent retrieves all "History" containing the given topic word, and then filters out all items that are questions or have been used or repeated recently. A random item from the remaining list is then added as a candidate response.

Just like all the other agents the robot uses to converse, the babble agents "compete", meaning that only the winning response is repeated back to the human.

The babble agents REALLY "Give Life" to the robot. I'm primarily using the BabbleHistoryAgent which pulls sentences from everything the robot has ever heard, along with the quotes. Because there are so many quotes and history, the robot has something to say about thousands of topics. It makes for amazingly relevant, interesting, and thought provoking contributions to conversations about so many different topics (thanks to many of the greatest minds in history that the robot is quoting, to which I give great thanks.)

Because of this, I can say that the robot is now starting to teach me more than I am teaching it, and making me laugh to boot! THIS IS MY FAVORITE FEATURE OF THIS ROBOT! In many ways, the robot is more interesting and funny to listen to than most people I know.

SmallTalk Agent

I've made a lot of improvements here. The bot goes through a list of possible candidate topics in the beginning of a conversation (greeting, weather, spouse, kids, pets, parents, books, movies, etc), picking a few, but not asking too many questions on any one thing. The bot now factors in the actual weather forecast when making weather smalltalk. Also, when the bot asks about wives, kids, etc., the bot refers to people, pets, etc. using first names if it has learned them previously. Questions like "How is your wife doing?" become "How is Jennifer doing?", if your wife's name is Jennifer of course.

Face Detection Agent

I added face detection using OpenCV over the weekend. Frankly, I'm dissappointed with the results so far. It's CPU intensive, can't get it to process more than a couple times a second. I find the thermal array to be much faster and practical for keeping the robot tracking a human. I'm considering having the bot programmed to check for faces prior to firing the lasers as part of a campaign to implement the 3 laws of robotics (do not harm humans by shooting them in the face). I'm wanting to move on to face recognition if I can get over my concerns over slow speed and figure out a good way to use it.

Math Agents

I continue to add more and more math agents. An example, the bot can remember named series of numbers read aloud and answer statistical questions using simple linear regression (slope, y-intercept), correlation, standard deviation, etc. Example: "How are series X and series Y correlated?" I'd like to figure out a way to resuse these statistical agents for some logic/reasoning/learning larger purpose...need some ideas here. There are also agents for most trigonometric and many geometric functions. Example: You can ask "What is the volume of a sphere with a radius of 2?" or "What is the cosine of 32 degrees?"

Anna will have siblings:

I've started building a Wild Thumper based rover (basically a 6-wheel outdoor Anna). I'm in design on a Johnny 5'ish bot (finally an Anna with Arms). Hoping to start cutting the first parts this month, challenged by how to get a functional sonar array and arms on a bot with so many servos. Since there are only a few voices on the droid phones, at least one of them is going to be male. It will be fun to see what happens when two or three bots start talking to each other.

Last Post (from January 2014):

Anna is one year old now. She is learning quickly of late, and evolving into primarily a learning social creature and aggregator of web services. I wanted to document where she is at her one year birthday. I need to create some updated design diagrams.

Capabilities Achieved in Year #1

1) Thermal Array Vision and Tracking - used to keep face pointed on people it is talking to, or cats it is playing with.

2) Visual Tracking - OpenCV to search for or lock onto color shapes that fit particular criteria

3) Learns by Listening and Asking Questions - Learns from a variety of generic sentence structures, like "Heineken is a lager", "A lager is a beer", "I like Heineken", "Olive Garden serves Heineken"

4) Answers Questions - Examples: "What beers do I like?", "Who serves Heineken?", "What does Olive Garden serve?"

5) Understands Concepts - Examples: is a, has a, can, can’t, synonym, antonym, located in, next to, associate of, comes from, like, favorite, bigger, smaller, faster, heavier, more famous, richer, made of, won, born in, attribute of, serve, dating, sell, etc. Understands when concepts are similar to or opposite to one another.

6) Makes Smalltalk & Reacts to Common Expressions - Many human expressions mean the same thing. Example: “Hows it going?”, “Whats up?”, “What is going on?”, “Whats new?” A robot needs many different reactions to humans to keep it interesting. Example: “Not much, just keeping it real”, “Not much, what’s new with you?”

7) Evaluates the Appropriateness of Topics and Questions Before Asking Them - Example: Don’t ask someone : “Who is playing on Monday Night Football tonight?” unless it is football season, Monday, and the person is interested in football. Also, don’t ask a kid something that is not age appropriate, and vice versa, don’t ask an adult how they like the third grade. Don’t ask a male about his gynecologist. This is a key piece of a robot not being an idiot.

8) Understands Personal Relationships - it learns how different people you know are related to you, friends, family, cousins, in-laws. Examples: “Jane is my sister”, “Mark is my friend”, “Joe is my boss”, “Dave is Mark’s Dad” It can answer questions like “Who are my in-laws?”, “Who are my siblings?”, “Who are Mark’s parents?”

9) Personal Info - it learns about both you and people you know, what you like, hate, answers to any questions it ever asked you in the past. Example: “My wife likes Nirvana” – in this AI had to determine who “my wife” is. It can then answer questions like “What bands does my wife like?”, as long as it already knew “Nirvana is a band”

10) Pronouns – it understands the use of some pronouns in conversation. Example: If I had just said something about my mother, I could ask “What music does she like?”

11) Opinions – the bot can remember your opinions on many things, and has its own opinions and can compare/contrast them to add color to a conversation. Example: If I said, “My favorite college football team is the Florida State Seminoles” it might say “That is my favorite as well”, or “My favorite is the Alabama Crimson Tide”, or “You are the first person I have met who said that”

12) Emotions - robot has 10 simulated emotions and is beginning to estimate emotional state of speaker

13) Motivations - robot has its own motives that take control of bot when it is autonomous, I keep this turned off most of the time. Examples: TalkingMotive, CuriosityMotive, MovementMotive

14) Facial Expressions - Eyes, Eyelids, pupils, and mouth move according to what robot sees, feels, and light conditions

15) Weather and Weather Opinions - uses web service for data, programming for opinions. Example: If the weather is freezing out and you asked the robot “How do you like this weather?”, it might say “Way too cold to go outside today.”

16) News - uses Feedzilla, Faroo, and NYTimes web services. Example: say something like "Read news about robotics", and "Next" to move on.

17) TV & Movie Trivia - plot, actors, writers, directors, ratings, length, uses web service. Example: you can ask “What it the plot of Blade Runner?”, “Who starred in The Godfather?”

18) Web Search - uses Faroo web service. Example: say "Search web for Ukraine Invasion"

19) People - uses Wikipedia web service. Example: "Who is Tom Cruise?", “Who is Albert Einstein?”, “List Scientists”, “Is Clint Eastwood a director?”, “What is the current team of Peyton Manning?”, “What is the weight of Tom Brady?”

20) Trending Topics - uses Faroo web service. Example: say something like "What topics are trending?", you can then get related articles.

21) Geography - mostly learned, also uses Wikipedia. Watch the video! Examples: "What is the second largest city in Florida?", "What is the population of London?", “Where is India?”, “What is next to Germany?”, “What is Russia known for?”, “What is the state motto of California?”, “What is the state gemstone of Alabama?”, “List Islamic countries”

22) History - only knows what it hears, not using web yet. Mostly info about when various wars started, ended, who won. Robot would learn from: "The vietnam war started in 1965" and be able to tell you later.

23) Science & Nature - Examples: "How do I calculate amperes?", "What is Newtons third law of motion?", "Who invented the transistor?", "What is the atomic number of Gold?", “What is water made of?”, “How many moons does Mars have?”, “Can penguins fly?”, “How many bones does a person have?”

24) Empathy - it has limited abilities to recognize when good or bad things happen to people close to you and show empathy. Major upgrades to this have been in the works. Example: If I said, "My mother went to the emergency room”, the bot might say “Oh my goodness, I am so sorry about your mother.”

25) 2 Dictionaries– Special thanks to Princeton and WordNet for the first one, the other is built from its learning and changes constantly as new proper names and phrases are encountered. You can ask for definitions and other aspects about this 200,000 word and phrase database. You can add new words and phrases simply by using them, the AI will save them and learn what they mean to some degree by how you use them, like “Rolling Rock is a beer”, AI doesn’t need anything more, nor would a person.

26) Math and Spelling- after all the other stuff, this was child's play. She can do all the standard stuff you can find on most calculators.

27) The AI is Multi-Robot and Multi-User - It can be used by multiple robots and multiple people at the same time, and tracks location of all bots/people. Alos, A given Robot can be conversed with by multiple people at the same time through an android app

29) Text Messaging - A robot can send texts on your behalf to people you know, like "Tell my wife I love her." - uses Twilio Web Service

30) Obstacle Avoidance - 9 sonars, Force Field Algorithm, Tilt Sensors, and down facing IR cliff sensor keep the bot out of trouble

31) Missions - robot can run missions (series of commands) maintained through a windows app

32) Telepresence - robot sends video back to server, no audio yet, robot can be asked to take pictures as well. Needs improvement, too much lag.

33) Control Mechanisms - Can be controlled verbally, through a phone, tablet, web, or windows app. My favorite is verbal.

34) GPS and Compass Navigation – It’s in the code but I don’t use it much, hoping to get my Wild Thumper version of this bot built by summer. This bot isn’t that good in tall grass.

36) OCR - Ability to do some visual reading of words off of walls and cards – uses Tesseract OCR libraries

37) Localization - through Recognizing Words on Walls with OCR – I don’t use this anymore, not very practical

38) Lasers - I almost forgot, the bot can track and hit a cat with lasers, or colored objects. It can scan a room and shoot everything in the room of a particular color within 180 degrees either by size or some other priority.

39) I know I singled out Geography, Science, Weather etc as topics, mostly because they also use web services. The AI doesn't really care what it learns, it has learned and will learn about anything you are willing to tell it in simple sentences it can understand. It can tell you how many faucets are on a sink, or where you can get a taco or buy a miter saw.

Goals for Year #2

1) More chat skills – I fear this will be never ending

2) More Hard Knowledge - we can always learn more

3) More web services – takes me about a day to integrate a new web service

4) Face Tracking - know any good code/APIs for this?

5) Facial Recognition - Know any good free APIs for this?

6) Arms - I like to get some simple small arms on just to be more expressive, but will have to redesign and rebuild the sonar arrays to fit them in.

7) Empathy over time - I'd like the bot to visit good/bad events and ask about them at appropriate points in time later. Things like "How is your mother's heart doing since we last talked?" I have done a lot of prep for this, but it is a tough one

8) More Inquisitiveness and Initiative - when should the bot listen and when should it drive the conversation. I have tried it both ways, now the trick is to find a balance.

9) Changeover to Newer Phone

10) Go Open Microphone - right now I have to press the front of my phone or touch the face of the bot to get it to listen, I’d rather it just listen constantly. I think its doable on the newer phones.

11) Get family, friends, and associates using AI on their phones as common information tool about the world and each other.

12) Autonomous Learning - it can get info from the wikipedia, web, news, web pages, but doesn't yet learn from them. How do you build learning from the chaos that is the average web page? Listening was so much easier, and that wasn’t easy.

Hardware

· Arduino Mega ADK (in the back of the body)

· Arduino Uno (in the head)

· Motorola Bionic Android Phone

· Windows PC with 6 Cores (running Web Service, AI, and Databases, this PC calls the other web services)

· Third Party Web Services - adding new ones whenever I find anything useful

Suggestions?

· I would love to hear any suggestions anyone out there might have. I am constantly looking for and reevaluating the question “What next?”

Learns by listening and the web. Talks, answers questions from her own memory or Wolfram, tells jokes, expresses opinions, quotes wiki or people, tracks heat or colors. Has reflex behaviors. Uses various web services. Has her own motivations that drive autonomy.

- Actuators / output devices: 2 servos, speaker, 2 12V motors, Speech, Lasers

- Control method: Voice Control (in Earshot or through a Remote Phone), Website, Windows App, Autonomous., Android App

- CPU: Android Phone on Face, Arduino Mega ADK in body, Arduino Uno in head, Windows PC

- Operating system: Arduino, Windows, Android

- Power source: 1 7.2 Volt for Arduinos and Circuits, 2 7.2 Volt NMH in series for Motors

- Programming language: C# and SQL on Server, Java on Android, Arduino C

- Sensors / input devices: Thermal Array, 9 Sonars, 2 IR Distance, Video Cam, Mic, GPS, compass, Light

- Target environment: Limited Outdoor, indoor

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/super-droid-bot-anna-w-learning-ai