Robbie-Robot (Have to change that name!) is basically "intended" to be a mostly autonomous rover using a Raspberry PI for processing sensor data from the arduinos, commands from a Web Console, and providing instructions back to the arduinos for roaming. Very *very* much in it's infant state, this project is hoping to use TINYSLAM" with limited capability IR and sonar sensors. To deal with the limited range and sensitivity of these sensors, we are cheating, by providing a pre-existing imagemap of it's surroundings (monochrome bitmap of the floorplan)

Semi-Autonomous Roaming - Data Collection

- Actuators / output devices: left and right DC drive motors, pan and tilt servos for, stereo mics, webcam

- Control method: WIFI connected to PHP/MySQL command/monitoring console

- CPU: Raspberry PI plus two Arduinos

- Operating system: Linux

- Power source: 18v litium/ion

- Programming language: Python, PHP, Arduino sketch

- Sensors / input devices: front and rear ultrasound on a servo, four way IR proximity, stereo audio input, 640x480 webcam, GPS, compass, accelerometer, temperature, humidity

- Target environment: indoors mainly

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/robbie-robot

can u describe your SLAM and

can u describe your SLAM and post the code ?

I’ll be posting all existing code over the next few weeks…

This is the part where I admit having trouble with the tinySLAM ( http://openslam.org/tinyslam.html ) implementation I’m working on.

I currently have to augment it with course grained position information and use that as an index to determine where I might be within that particular zone. I’m seriously getting frustrated with sonar as a ranging device… I know… I’ve read dozens on articles on this same complaint.

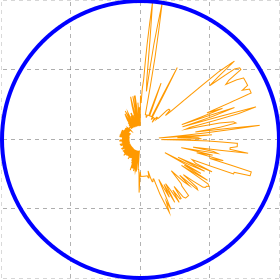

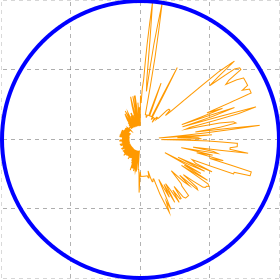

Right now, I have front and rear facing sonar on a servo pod. I measure distance in front and back through a 180 arc. the problem is that with the conical shape of the sonar sensing… I do not know how to “normalize” overlapping scans to get an average.

Here is the best I’m able to do at the moment… Each square is 1meter.

I'm thinking of treating myself to a Kinect for Christmas...

Wow that thing is jammed

Wow that thing is jammed pack! Can’t wait to see what all you do with it. I haven’t heard about TinySLAM before. Think I’ll give that a read.

Oh, I was thinking it was

Oh, I was thinking it was slam for cheap sensors, but it looks like it requires a lidar, it is just written in a few amount of lines.

Sorry, haven’t seen a good SLAM for cheap sensors

I’m using tinySLAM as my base, and have bastarized… er… modified it in the hopes of getting my maxSONAR sensors to do the work. It means a fair bit more travel to find walls and edges to use for localizing, and because of the poor resolution/conical shape of the sonar ping, it is definitely my current biggest obstacle.

I’m working on a few smoothing / normalizing processes which makes localizing to large obstacles better… but them damn chairs…

I’ve already broken off front IR sensor twice, and just about sheared off the webcam with a chair leg crossmember…

I’ll put in another week or two on THIS redition before I cave and try the “parallax Lazer Line / Butchered Webcam” method… ultimately I want a Kinect on a servo pod… Just ran out of money on this project…

Some pictures of the rebuild in progress…

QRD1114 encoder with inkjet printed encoder wheel. 2.49mm per transition... not great, but It'll have to do.

Almost time for the new inaugeral run...

We need More Power Captain!

I'm using a DC-DC converter to drop to 5v, so as not to waste too much to heat. Seems to work well, but I'll know better once I'm tested for a bit.

First dry run of this new re-build "untethered" tomorrow morning...