Hardware vs Software

I agree software PID will be much weaker than adding hardware gyro/compass. I mentioned PID as one of the recovering lost capabilities due to giving my bot a frontal lobotomy. At the height of my former bot’s life, it made me very happy without precision location. The PID just made it seem a little less random, when it was wandering with random avoid and escape turns.

It implemented a Rodney Brooks “subsumption architecture”, had a few goals (“keep alive” was the only meaningful goal), expressed rudimentary awareness of temperature, light, sounds and humans, expressed synthetic emotions (based on “estimated remaining life”, time since last required escape, and alone or human present) and truly non-functional mood states. It did all this in less than 32kb of code and with a very “in the moment” strategy.

Having four processing cores, a Gig of memory, nearly unlimited storage, wireless connectivity to unlimited knowledge sources gives me huge pause for “What Do You Want To Do Today?”. I am torn between recovering my basic robot function before I start exploring the new capabilities, and leveraging the things others have done, or embarking to add something to the “hobby robot” toolbox.

I have already tested on-board speech recognition with the CMU Sphinx engine (element14 gave me free Pi3!) RoadTest Review of Pocket Sphinx on Pi2 vs Pi3 and I have tested each item of my hardware individually, but I don’t have any experience computing with the Ultrasonic sensor, or the Camera. I want to create a few basic “sofware sensors” from the PiCam and ultrasonic distance to replace the hardware sensors my bot lost in the lobotomy - left and right light intensity, as well as left,center,right close obstacle detection, and the human present/moving sensor.

Additionally, I really want to add “wall and door recognition”, “corner recognition and park out of the way” behaviors. I have seen people posting track an object, and follow an object examples, but these capabilities are not high on my “wish list behaviors to integrate”. Finding and recharging when “estimated life” gets low are high on my wish list and will probably be the next hardware upgrade I give my robot. A standardized robot recharging dock and robot dock contactor, that can be set for USB standard 5v at 2amps or just solve the physical problem and let me put my RC battery charger to the dock would be very helpful.

By simulating the GoPiGo fwd(), us_dist() and [pan]servo(angle) APIs, I was able to re-use someones “ultrasonic distance, servo scan, display map” software. That brought a real high - both that I could re-use someone elses code, and feeling apart of a community. I lived through digital component standardization, and standard language libraries, to published web services. The hobby robot community has suffered greatly from the lack of standarized platform and interface definitions. ROS addresses this at the professional and academic level, but the hobbist and school grades 1-12 are still faced with recreate everything from the ground up.

When I started programming professionally (Intel 8080, Motorola 6800) I didn’t even want an operating system. If it worked I wanted to know how and what it did, and if it didn’t work, I wanted to know the problem was my code. One of my first jobs was to write the runtime code for a new compiler. The woman writing the compiler didn’t even want to know what was actually running on the processor. I could not understand where she was coming from. Now I want to be more like her. (The desire to know/understand something about everything can be a curse.)

Dreaming a bit, I really want an RDF/OWL brain in my bot, with natural language query/response generation tied to text to speech and speech recognition, with visual object recognition and integration into the RDF. I want my bot to recognize me, and use me to increase its functional capabilities in areas it discovers it is deficient, learning only what it can use, and using its hardware, knowledge, and software to its fullest.

Oh yea, and I don’t want to have to write all that, and I don’t want it to cost more than $500.

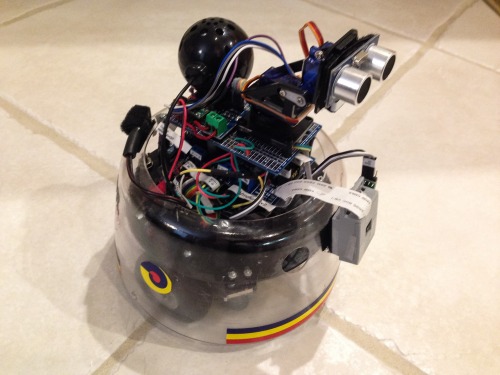

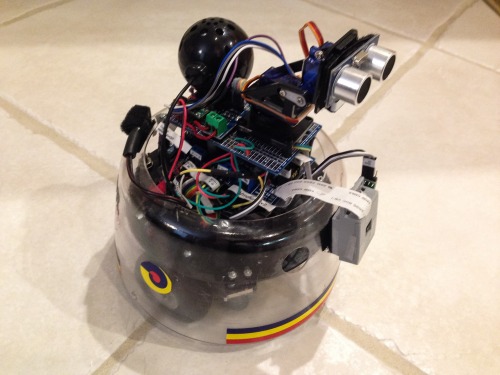

Here’s my robot today: