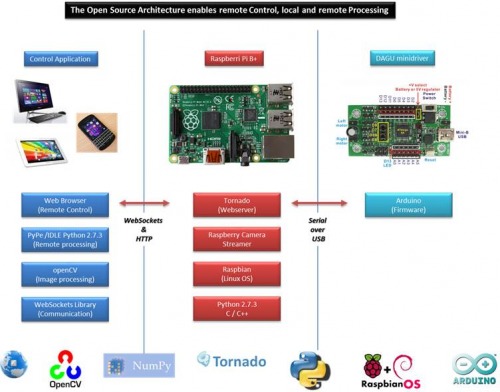

I was asked to start a blog. Don�t expect anything else than beginners level! A couple of months ago I bought a Raspberry Pi out of curiosity and soon after that I bought my first robot kit: the Pi Camera Robot of Dawn Robotics. (http://www.dawnrobotics.co.uk) Great stuff to start with! Especially for someone completely new in robotics, it offers a platform for exploration while it�s also already a kit that can be played with. I added some sensors and started learning and playing: Python, a bit of A rduino and C/C++, openCV and Numpy. All new stuff to me. After the first small working scripts, I baptized the bot RB-1 and it looked like this. After a couple of months I changed the chassis. I�ll explain in a next post. Personally I appreciate this kit very much. The image of Dawn Robotics provides a well working infrastructure platform. Keeping Arduino and lower level stuff like PWM�s away for a while until I was up to it. The WebSocket implementation enables also remote processing. So, I could code and run my scripts on a large-screen-windows-PC. That�s far more comfortable than working on the Raspberry itself. I certainly appreciated it when I started to play with openCV. I was able to display any window and as many as I needed while experimenting and debugging the scripts. That�s almost impossible at the Raspberry itself. Dawn publishes a blog, explaining their infrastructure and software. I made their scheme a bit more fancy ;-0)

In this blog I will share subjects I tackled. Just to provide other starters an easy information entry. Most topics can be covered by many good postings all over the web. I just try to structure that info. In the coming posts I�ll explain my code for finding and reading signs and explain how I dealt with topics as object tracking by color, isolating objects in video frames, comparing images and controlling differential wheels. And of course I�m looking forward to suggestions on improvements!

There are many valuable blogs and sites on the web, but I would like to point out a few I appreciate very much:

http://www.dawnrobotics.co.uk ��Supplier of the bot and its operating environment. Rather good documented and responsive help by Alan Broun.

http://roboticssamy.blogspot.nl ��Inspirational source. The SR-4 of Damuel Matos surely triggered my ambition

http://www.pyimagesearch.com �Instructive site of Adrian Rosebrock on Python openCV. Great examples and explanation when starting with video images.

Next post: find sign by color tracking

Looks for a blue colored sign, reads it and act upon

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/follow-and-read-signs