Hello LMR,

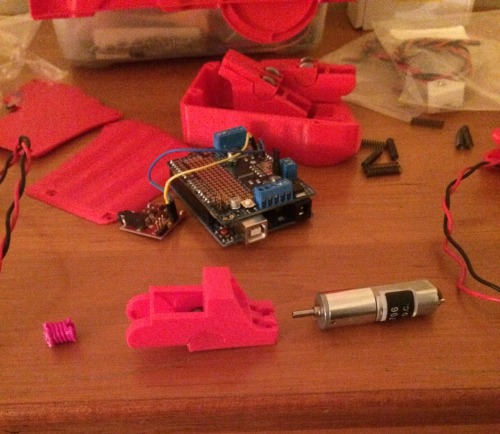

This is a project I have been working on for quite some time. It is a 3d printed motorized prosthetic hand that is controlled through muscle movement. The design is open source - the Dextrus prosthetic hand. I decided to use this open source design as opposed to others because it had all motors inside the hand itself. The majority of other designs I found had the servos based in the forearm and ran cables down to the fingers. Right now, the hand consists of four dc motors geared 62:1 and is controlled by an arduino uno with an adafruit v2 motor shield. I went with Adafruit's motor shield because it was the only shield that was capable of running four motors and was fairly cheap - only $25. The hand is all 3d printed. Each finger consists of its 3d printed parts, bearings and a dowel pin that acts as the joint and a strong cable.

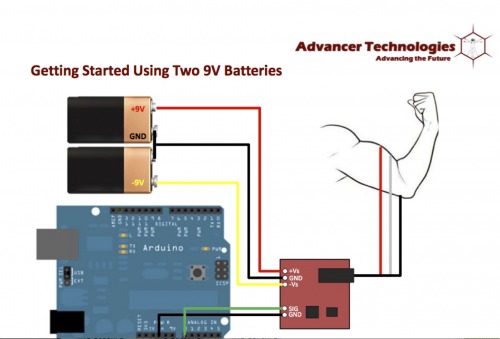

I looked into a variety of ways to control the hand in a costly manner. One method I looked into was EEG sensors to read electrical activity in your brain. This looked really cool but wasn't practical because the sensor just read general brain activity and I wanted to specifically pinpoint the signal used to control muscles. The solution is an EMG sensor. An EMG sensor detects the electric potential generated by muscle cells when these cells are electrically or neurologically activated. For this project I am using Sparkfun's EMG sensor kit which costs $50. The EMG board requires two 9 volt batteries in order to work. The sensor has three surface mount electrodes two on the muscle you want to read and the black one on a bone for ground. The muscle I am trying to read is the flexor digitorum superficialis muscle. It is a large muscle that runs along the bottom of the forearm from all the bones at the elbow to the four fingers. It serves to flex or curl the fingers. In the video I have a program that reads the EMG sensor and sends the data to processing via serial and graphs it. I am looking at the graph to see if there is a large enough difference in the spikes of data per finger flex, to identify which finger I am flexing. However the differences weren't large enough in order to identify which finger I was flexing.

When the hand boots up the arduino takes 10,000 samples of the users’ resting electric potential in their muscle and averages it which becomes the threshold value. Then the regular loop reads the sensor value and opens or closes the prosthetic hand depending on if it is above or below the threshold value. Once the user is able to get the sensor value to read above a set threshold, the hand will close and when the sensor value reads below the threshold the hand will open. An issue that I ran into and still working on is powering the system. I am currently using a 7.2 NIMH battery but I am looking into lipo. The program works, its just that I am only able to control one finger at a time. I am working on adding the thumb.

In order to get a better sensor reading/control of the hand I am looking into using the Myo armband. This commerical product contains multiple EMG sensors, an accelerometer, gyroscope and a magnotormer. I really like this armband because its software is open source and their developers already have some pre-programmed hand gestures that it can recognize. Also the armband runs on bluetooth and connects directly to a computer for data. But I have seen some people on the Interent able to get the arduino to read the armband using a bluetooth chip. So I'm going to look into that more in depth to see if its a viable option.

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/cost-effective-prosthetic-hand-1