Updates Feb 15th '14

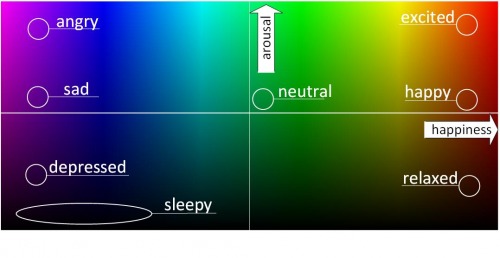

- Inspired by MarkusB and discussions with mtripplet and DT, added basic emotion simulation and mapping of emotional state on facial and verbal expressions. Positive verbal conversations and doing tasks will increase CCSR's happiness and arousal. Adverse environment and negative conversations (e.g. insults, disapproval) will decrease happiness. Happiness and arousal will naturally degrade over time, simulating sleep and boredom.

- Added 2 8x8 matrix displays otional state.

- Added 10mm RGB LED representing CCSR efor facial expression emulation. Eye movements and shapes are updates based on current emmotional state. The LED dynamically updates an HSV color value, the 'Hue' is proportional to the current 'happiness' state of CCSR, and the 'Value' is proportional to the current state of 'arousal'

- Added continuous voice-recognition capabilities. CCSR now autonomously litstens and reacts to valid verbal communication.

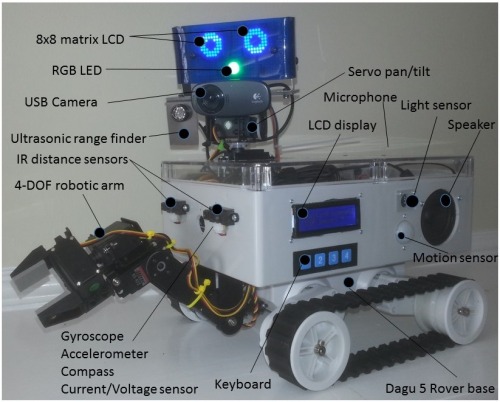

CCSR (pronounced 'Caesar') is a prototype robot to play around with and learn about the main fields of robotics such as computer vision, sensors and actuators, navigation, natural language processing, artificial intelligence and machine learning. All previous CCSR updates, as well as older videos are tracked on this blog. The sourcecode of the main CCSR application and Natural Language Processing (NLP) module (nlpxCCSR) can be found on github.

CCSR can understand basic human language, and is integrated with a web-based knowledge engine (Wolfram Alpha), so it can answer questions on various topics. CCSR strives to be a social robot by modeling and expressing basic emotions through speech and facial expressions such as eye shapes and movements and an HSV color representing real-time emotional state.

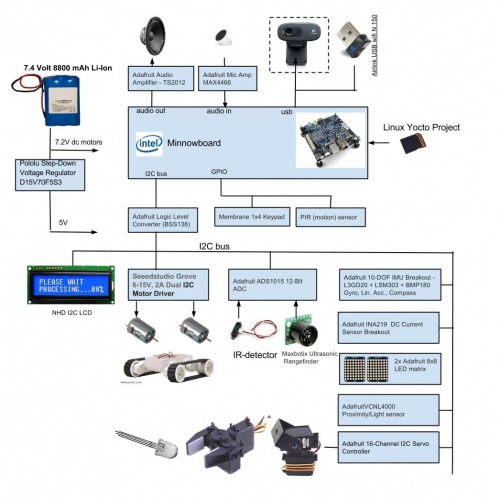

CCSR is based on a Minnowboard SBC using an Intel Atom processor. It runs Angstrom Linux using the Yocto kernel, and a set of packages such as OpenCV for computer vision, espeak for speech synthesis and mjpeg-streamer for video streaming over ip. Compiling Angstrom for Minnowboard to add desired kernel modules such as wifi is described in detail here. Gyro, accelerometer and compass are used for basic navigation, a current/voltage sensor keeps track of stalled motors and low battery. IR sensors are used for basic obstacle avoidance, and a sonar range finder is used to map the environment and to provide depth to visual observations.

There's an ambient light sensor, as well as temperature and pressure sensors. USB wifi on the Minnowboard allows telemetry and remote control simply by ssh (e.g. Putty) from any other computer, I use a simple cmd-line interface to interact with the robot process running on the Minnowboard. I use linux pthreads to model basic independent 'brain' processes such as vision, navigation, hearing, motor and speech. I used this great opencv example to implement object tracking using color separation. There's an LCD display and keyboard running through some menu options for control and status.

CCSR has a 4-DOF (degree of freedom) robotic arm, built with Hitec servos, Lynxmotion servo brackets and a 'Little Grip Kit'. Shoulder servo is a HS-645MG, elbow, wrist and hand servos are HS-422. All servos are driven by an Adafruit I2C Servo Controller. CCSR can use the arm to pick up small objects.

CCSR is built on a Dagu Rover 5 chassis and a Polycase project box. Most of the sensors are super-convenient Adafruit breakout boards, various components come from Pololu, Robotshop, Lyncmotion and other places. Details on the components used in CCSR can be found in the diagram below.

Voice Recognition capabilities are added using the Google Speech to Text API. A custom Natural Language Processing (NLP) module (nlpxCCSR) in Python gives CCSR some basic capabilities to interact with human langage, kind of like Siri, as well as some basic Machine Learning. nlpxCCSR is based on pattern.en from CLIPS, a very cool python package for sentence analysis. Pattern.en can do POS tagging, chunking, parsing, etc, but also contains stuff like verb conjugation, pluralization, and contains WordNet, a lexical database you can query for word definitions and other useful info.

To interact with human speech, CCSR records a sentence using Alsa, and writes this out as a .wav file to disk using libsndfile. A bash script posts this file to the Google speech2text API using curl. Google returns a .json file containing (several guesses of) text. This text is passed to nlpxCCSR, which interprets the sentence and synthesizes a response. This response is passed back to the main CCSR process, which uses espeak to vocalize the response. nlpxCCSR can generate pure verbal answer to voice input (e.g. 'how are you', or 'what is the weather today'), or it can generate a CCSR action if the speech is interpreted as a robot command (e.g. 'turn 180 degrees' or 'pick up blue object'). nlpxCCSR will maintain an internal memory, and will do a simple form of Machine Learning by storing properties that it learns about (e.g. when interpreting 'the cat is in the garden', nlpxCCSR will memorize that the concept 'cat' is located 'in the 'garden'), and will try to answer queries based on its own knowledge. But if unable to do so, it will pass the full query to WolframAlpha API (cloud service), and passes the most appropriate 'pod' (wolfram answer), back to the CCSR process.

CCSR models basic emotions using a 2D happiness/arousal space based on this work. CCSR expresses emotional state through facial expressions (eye shape, 'nose' color, head movement, etc) and verbal expressions.

Eye movements and shapes (sad, angry, excited, etc) are dynamically updated based on current emotional state, and an RGB LED (it's 'nose') is driven by PWM signals from the I2C servo controller and dynamically updates an HSV color value; the 'Hue' is proportional to the current 'happiness' state of CCSR, and the 'Value' is proportional to the current state of 'arousal'. Positive events such as successfully completing tasks and receiving encouraging verbal feedback will increase CCSR's happiness and arousal. Adverse environment and negative verbal statements (e.g. insults, disapproval) will decrease happiness. Happiness and arousal will naturally degrade over time, simulating sleep, boredom, and an innate urge to initiate activities to increase happiness/arousal.

Eye movements and shapes (sad, angry, excited, etc) are dynamically updated based on current emotional state, and an RGB LED (it's 'nose') is driven by PWM signals from the I2C servo controller and dynamically updates an HSV color value; the 'Hue' is proportional to the current 'happiness' state of CCSR, and the 'Value' is proportional to the current state of 'arousal'. Positive events such as successfully completing tasks and receiving encouraging verbal feedback will increase CCSR's happiness and arousal. Adverse environment and negative verbal statements (e.g. insults, disapproval) will decrease happiness. Happiness and arousal will naturally degrade over time, simulating sleep, boredom, and an innate urge to initiate activities to increase happiness/arousal.

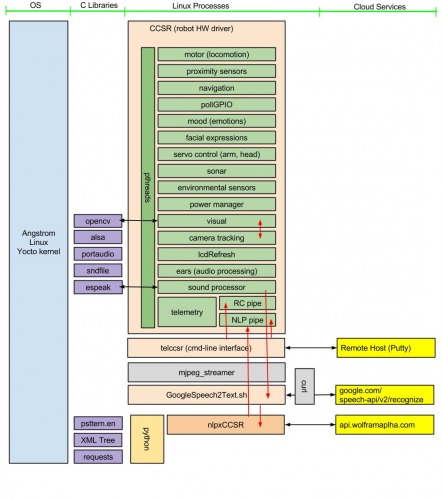

A detailed overview of the CCSR software architecture is shown in the picture below:

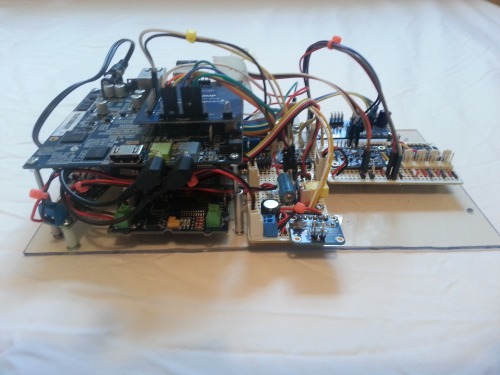

The CCSR main board is show in the picture below. I used Adafruit perma-proto PCBs for the custom electronics.

A simple Linux-based robot platform to learn about AI and ML

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/ccsr

Thanks!

Thanks!