Update: 03/14/2014

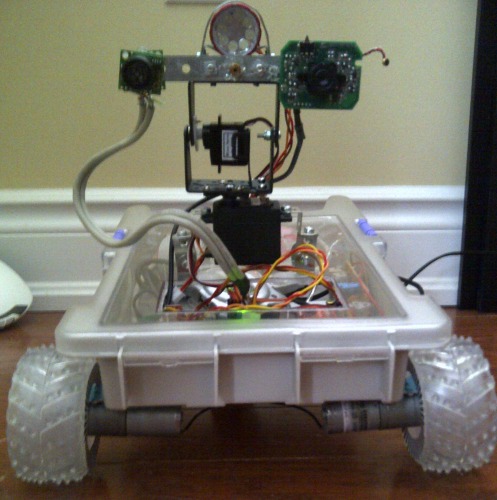

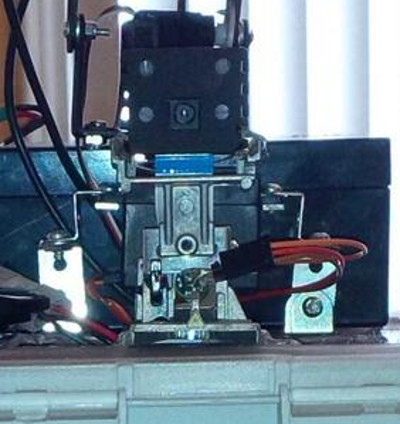

I've finally mounted the awesome Open Source Laser Range Finder (OSLRF01) onto Bottwo with a panning servo to provide mapping functionality.

I was previously attempting to do this with the Sonar, but was stymied by limited range and cone size.

The narrow beam and greater sensing distance of the LIDAR will give me the ability to accurately map out a room in near real time, and then use the other sensors for closer proximity measurements.

How much is too much?

Yeah, I heard that! Yes, I've got four Sharp IR Sensors, two front and back for collision detection, two left and right for following walls at a specific distance.

I've now got two more short range IR sensors front and back facing the floor... so we don't fall down stairs again.

The purpose for keeping the front and rear panning MaxSonar is simply to fill the near field void that the LIDAR does not cover. Because of the distance between the optics, the OSLRF cannot see closer than 1/2 a meter. Also, like IR sensors, Laser is not fantastic at identifying thin objects like chair legs. So I use the Sonar to sweep the near-field for collision avoidance as well.

I hope to have video up soon of this in action.

Update: 03/01/2014

I accidently left Bottwo "online" last night... Usually, he is offline for charging or during my development, but there are small windows of time where other's have logged in to drive him around the house remotely. Last night was apparently one of those nights...

Someone drove him to the edge of my basement stairs, and tested his "slinky" function.

Luckily, I had not yet mounted the Scanning Lidar on him, and the only things that broke were the camera/sonar pan/tilt, and the sealed gel battery ripped off it's cable... (and a dent in the hardwood floor... don't tell my wife!)

Tonight, after repairs, I will be adding a front and rear Sharp IR GP2Y0D810Z0F floor sensor... When my "Path Planning" algorith is completed, I should be able to disallow motion into such areas.

UPDATE: Videos added.

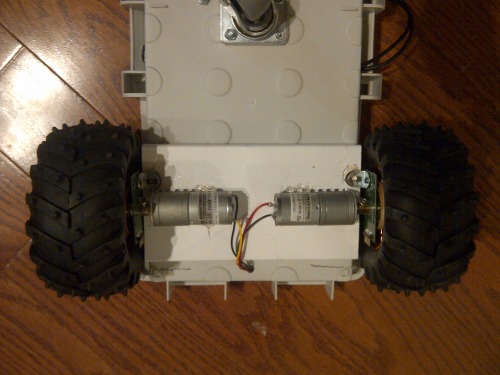

This is my second bot. I just started it a couple days ago, and expect it will be a few more before I submit a video. This one has low speed (50rpm) high torque motors.

My first one ran too fast for the wheel edge encoders, and if you drove him slow, he would stall under the weight.

Botoo (bot-2) will be equipped with:

- The I2C Sonar Pod that I'm working on.

- A set of standard Sharp 2Y0A02 IR sensors on front/rear/left/right ( I may also put two more on 45 degree front-right/front-left).

- A pulsed line lazer with Webcam for Paralax ranging, and ....

- I just bought a Kinect

The purpose of this bot is to develop and refine routines for identifying landmarks (walls, doors, furniture) to allow for better interpretation of ranging data.

Update: 14/01/04

Got power supplies, Raspberry PI, and Arduino UNO up and running, and running simple sketches to tune wheel encoders.

Update: 14/01/07

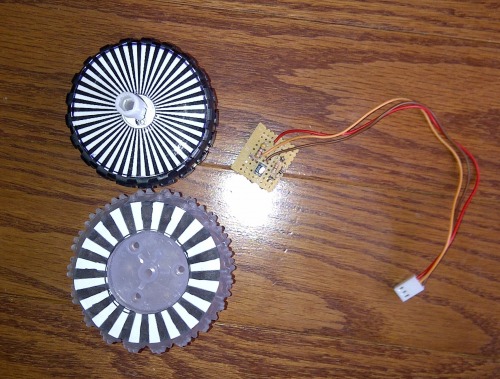

So, this is the oldschool laserprinted encoder wheel and QRD1114 that I'm using on Robbie... I will admit to wasting more time on this little POS circuit than any other piece of this build.

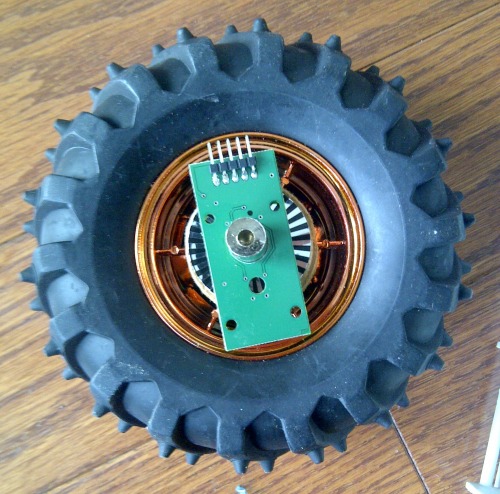

So, I treated myself to a commercial set of encoders from Solarbotics. As well as the typical quadrature encode funtions, they have a CLK pin pwm modulated and a direction pin. (Also have a serial out with distance/velocity, but...)

I had to enlarge the hole by a few thou to get it over the hub of my new wheels. Not what Solarbotics intended, im sure...

Fine print warned me against using it on anything other than their GM 2/3/8/9 gear motors... My skull's too thick for that to register though...

And yes!!! that is hot glue holding it all together. Once I get the alignment validated.... then we'll put in the screws!

Update: 14/01/10

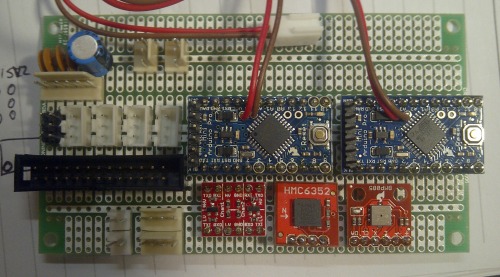

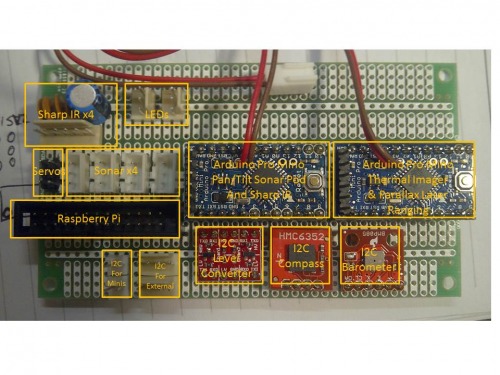

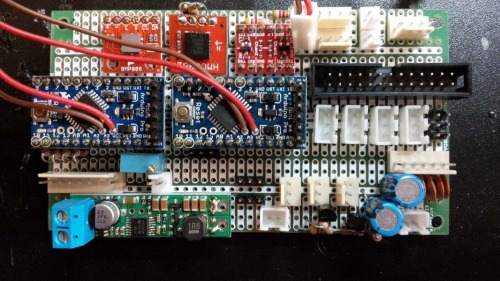

Telemetry control board - 1/2 completed...

Update: 14/01/16

Apologies for the slow progress on this. Three kids under 7 means little time to myself or my projects. :)

I'm all wired up now, and working on my code. If I were to admit to having any skills whatsoever in coding, I'd have to say PHP is my comfort zone. However I2C capabilities on the Raspberry Pi are pretty much non existent in PHP.

I found this https://github.com/tbrianjones/raspberry-pi-i2c-bus/blob/master/peripherals/i2c_bus.php as a good start.

I'm expanding upon this, using the Adafruit python I2C bus code as a template.

I need to read/manage:

- HMC6352 compass module

- ADXL345 3 axis accelerometer

- BMP085 barometer and thermometer (also provides altitute via algorithm)

- Arduino UNO motor driver / wheel encoders

- Arduino Mini Sonar Pod and IR proximity

I would love to hear from anyone who has had any experience in PHP on the Pi....

Update 2: 14/01/16

It's been a rather productive, yet expensive day. I somehow shorted out and destroyed my 18v Lithium ION motor battery. Awesome!

So, I'm improvising with 8 AA NiMH rated @2100 mah... we'll see how that does for now.

Here's is a picture of it's first "un-tethered" voyage....

... and yes.... it hit the stack of DVDs. apparently I was scanning right over top of them.

Video to come soon. (Is this the part where I admit to my lack of skill at making/editing videos?)

Update: 14/01/26

I've replaced the dead 18v Lithium Ion battery with a standard 12v gel cell. Easier to charge, weighs a bit more, but... whatever...

I get bored easily, and have too many little things that I jump around between. Lately I've been working on various routines for "self preservation". Nothing extraordinary, just typical things like if the battery gets below a certain point, come back to base to charge . The latest one was regarding wifi connectivity on the Raspberry Pi. The routine would evaluate the wifi connection with the WebServer (commands coming in/telemetry going out) and if it hasn't connected in a while, or the wifi signal is too low (small usb dongle inside chassis... bad idea...) the rover would seek out a stronger signal. Sounds great in theory.

So I went downstairs this morning, to find the rover huddled in my living room directly below the wifi router.... battery dead as a doornail. Upon reading the logs, it appears that I accidently connected the routine that would send him back to the charging station on low battery with the new one that would attempt to correct wifi issues. Battery got low, so he looked for a stronger signal! Makes sense to me...

btw.... I said something like "Awwwww... it looks like he was trying to get a better signal..." in hearing distance of the wife... She just looked at me, and said "He?..."

Update: 14/01/27

Let's call this update "I'm no mechanical engineer!"

If you look at my pictures, this is a two wheel differential drive, with a trailing caster wheel..... The caster is small, providing a slant to the chassis, which I kind of liked the look of, so didn't think to or bother to correct.

I've been wondering why turning has been "lurchy", as well as transitions from forward to reverse....

As it turns out, I should have just looked underneath during such "transition". The offset caster, as it rotates, because it's pivot plane is on an angle, has to actually lift or drop the chassis.... including the rear-of-center mounted gel-cell battery....

Here is the "slope" of the chassis moving forward...

Here is the "slope" of the chassis moving in reverse....

I simply raise this issue to help others that may come across this. Tonight, I will either be adding a spacer to lift the chassis, or preferrably installing a larger ball caster.

Update: 14/02/18

Just some new pictures...

Profile (Ain't he cute?)

Head on... notice the Laser Line level and RaspBerry PiCam front and center for future ranging...

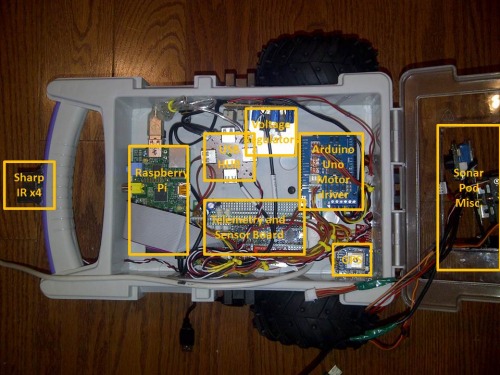

And this is the glue that ties the Pi to all of the sensory input....

Evaluates combinations of ranging sensors - Efficiently identifies and tags landmarks (yeah.. right...)

- Actuators / output devices: two 50rpm 1:250 all metal gearmotors. 2 pan/tilt servos

- Control method: semi-controlled. Raspberry PI runs Webpage for control

- CPU: Raspberry Pi for main control, Arduino UNO for motor/encoders, and I2C sensors, Arduino FIO for Sharp IR and MaxSonar sensors

- Operating system: Linux, Arduino

- Power source: 18v 4000mah LiPo for motors, Dual 5v USB 8000mah LiPo packs for electronics.

- Programming language: Arduino, Python, CLI php

- Sensors / input devices: MS Kinect, wheel encoders, Sharp IR *6, MaxSonar *2 (on servopod), Parallax Line laser/webcam

- Target environment: indoor for now...

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/bottoo-platform-to-test-ranging-sensors-and-algorithms