I am working on an AI implementation since 14 months, as my daughter was born. I have notebooks full of observations of human behavior from a newborn to a child. I am still trying to formulate all those observations mathematically. This kind of research field is called mathematical psychology. It's quite too complex and difficult and too early to show it here, but I thought about a much simpler implementation of AI in the meantime and stumbled over the so called variable structure stochastic learning automaton (VSLA), which is similar to to the approach David L. Heiserman describes in his book How to Build Your Own Self-Programming Robot, nevertheless more advanced in some regards.

Usually I am starting with a 3-D drawing of the robot, building then the mechanical parts, then the electronics and program the robot at the end. This time I am following a reversed process. I started literally with 'doing the math' behind the AI program to evaluate system requirements and its possibilities. Fortunately an Arduino is sufficient for this project. For a basic demonstration of a learning process no additional memory is necessary. Russell has also started an AI project here, using a Micro Magician Pro and he kindly provided (or better: will provide) me a Micro Magician Pro too, so we can directly compare our research results.

So far I have solved all mathematical issues, arisen by the math function restrictions, common microcontroller IDE's have. I have written a program simulation based on my mathematical considerations with Mathematica and it works as intended. Next step is to write an interactive code in Arduino C. You just need your keyboard and the serial monitor. You are the robot's environment. After the interactive program has chosen an action you decide each time by pressing '0' or '1' on your keyboard if the action was favorable or unfavorable. After a while you will see how the robot has learned to avoid unfavorable actions. You can change your response any time and the robot will adapt its behavior to the new situation.

I will post the code in three parts. Every code works for itself; you can experiment with it and figure out how it works. The first code will be the 'algorithm of choice', the second one the 'updating rule T', the third one the entirely interactive program.

The math behind the concept is trivial. Just a little knowledge about elementary algebra, set theory, probability theory and limit calculation is necessary. I have listed some links that might help you below. As I am using quite a lot of mathematical notations and equations and LMR has no formel editor I have attached the concept study as a pdf for better readability.

- Variable structure stochastic learning automaton

- Probability theory

- Limit of a function

- Rational numbers

- Natural numbers

- List of mathematical symbols

Update October 13/2012

First test runs with the AI program were a success. In the current mode the robot learns to find the best strategy escaping from obstacles (see video). I have attached the program I used for these experiments. Against my previous assumption I could reduce the program size for a simple obstacle avoidance learning behavior to 15086 bytes after deleting all printing commands and other small modifications.

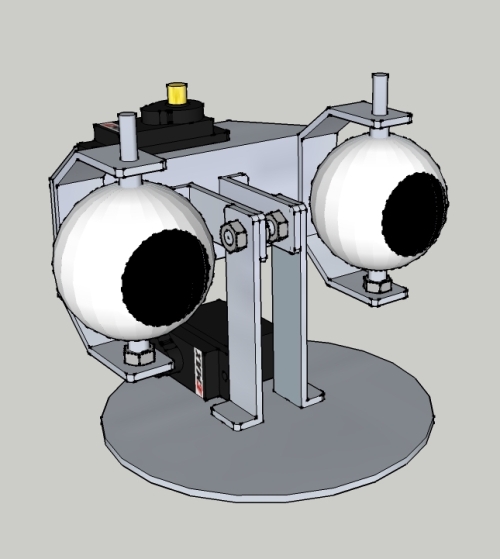

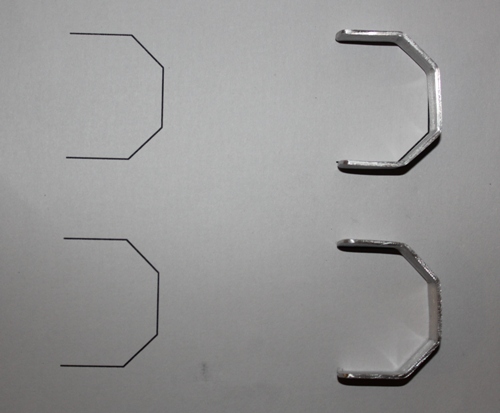

I'll try now to simplify the complex nested if-else statements and arrays and build a library, so n actions can be easily generated for more complex learning. Furthermore I'll work on the head unit of baby bot and speech. So far I have done a design study of the head and fabricated eyes (Christmas tree balls) and eye holders. The head will be equipped with two photo diodes to measure the light conditions in a room precisely.

Update October 11/2012

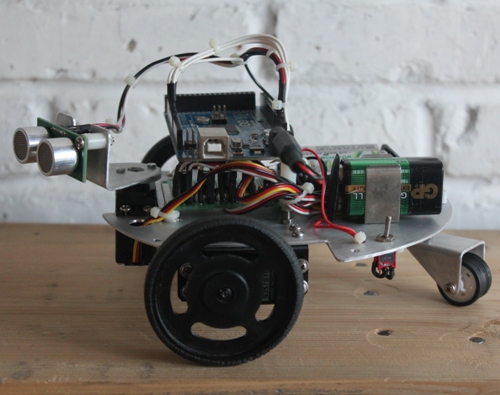

Basic testing platform for the AI program finished. The robot is controlled by an Arduino Mega 2560 in the moment. The Flash memory of the Micro Magician Pro (32 kB) seems to be not sufficient. I am expecting a program size of approx. 40 to 50 kB for a simple obstacle avoidance learning behavior and I want to have some backup memory for other experiments.

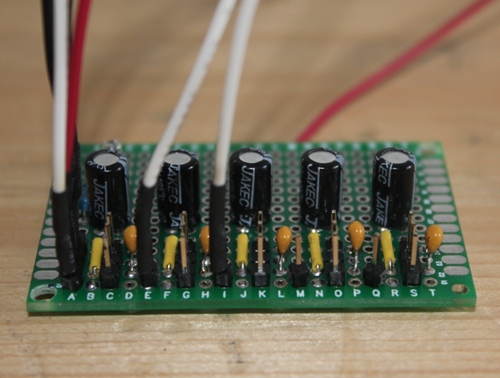

Unfortunately most micro controllers still have no 3 pin headers to connect the servos directly, so I made a small breakout board. Every servo has its own decoupling capacitors (100 uF/100 nF). I furthermore added a power indicator (LED) as I am using two power supplies (9 V for the Ardunio and sensors, 4.8 V NiMH/2000 mAh for the servos). Both power supplies have separate ON/OFF switches.

Update October 10/2012

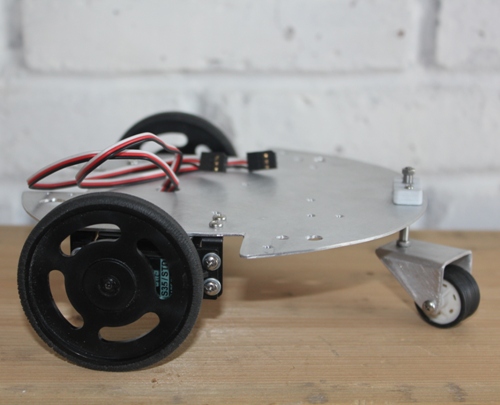

Some further work on the 2WD base done. The free wheel on the rear is from a toy car which I 'borrowed' from my daughter...

Update October 9/2012

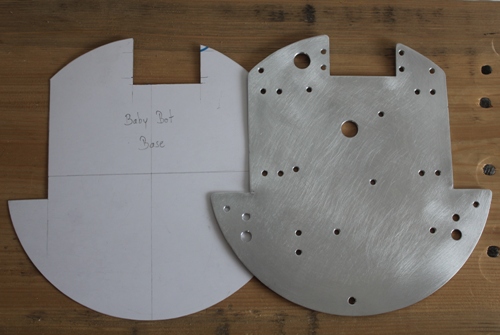

Managed to fabricate the base plate of the robot today. The base plate is made from 1.5mm aluminium sheet. I started with a carton stencil to see if everything fit well on the base, before I cut the aluminium sheet with tinsnips and Dremel.

Update October 8/2012

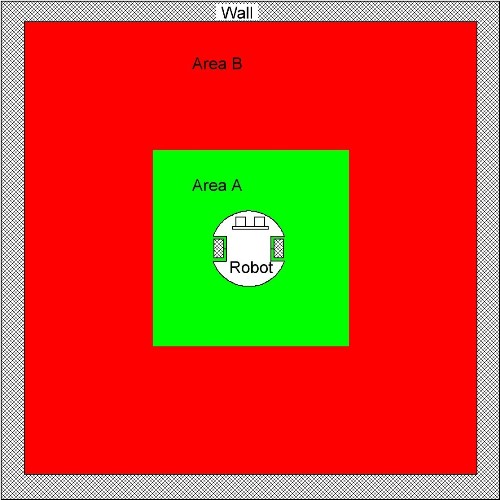

In first experiments the robot shall learn to avoid obstacles. A proper feedback from the environment and its processing is certainly important. After considering some other models, I came up with following set-up:

The environment is divided in an area A and an area B. Area B is in a certain range to obstacles and should be avoided. Area A is the safe area. The robot shall now learn to avoid area B. The robot only learns if he is in area B. If the robot is in area A, it only makes random moves without updating the probabilities, otherwise it could lose its memory what it has already learned from the environment.

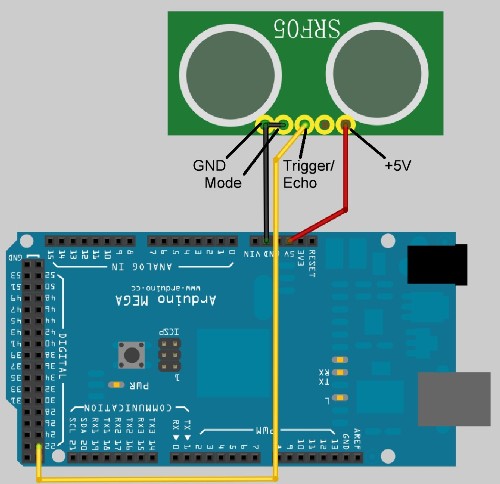

A SRF05 is used for distance measurement, connected in single pin mode to the board (Arduino Mega 2560 for the moment):

I have written a short program sketch as follows to convert the distance readings into a binary response from the environment. I am using the raw data (pulse length in microseconds) to get a better resolution.

int ping(int e) //declare function for SRF05 reading { int result; pinMode(e, OUTPUT); // define digital pin as an output pin digitalWrite(e, LOW); //pin must be LOW before setting the pin HIGH to trigger ranging delayMicroseconds(2); //keep pin for for 2 µs LOW digitalWrite(e, HIGH); //set pin HIGH delayMicroseconds(10); //keep pin for 10 µs HIGH digitalWrite(e, LOW); //set pin LOW pinMode(e, INPUT); //define digital pin as an input pin result=pulseIn(e, HIGH); //read out pulse length return result; }int echopin=22; //define digital pin on Arduino for ping trigger/echo

int saferange=1276; //at this range every action is favorable

//just chose action randomly

//but not update probability values

//otherwise robot will lose memory of what it

//might has already learned

int distance_i; //distance at a time t

int distance_j; //distance at a time t+1

int beta; //binary feedback from the environmentvoid setup()

{

Serial.begin(9600);

}void loop()

{

distance_i=ping(echopin); //check distance

if (distance_i>saferange) //is robot in safe area?

{

//just play

Serial.println(“safe area”); // print result

Serial.println(" ");

}

else

{

//switch to learning mode

//chose action and perform

delay(500);

distance_j=ping(echopin); //check distance after performed action

if (distance_j>distance_i)

{

beta=0; //action was favorable, update probabilities accordingly

}

else

{

beta=1; //action was unfavorable, update probabilities accordingly

}

Serial.print("distance_i = "); // print result

Serial.println(distance_i);

Serial.print("distance_j = ");

Serial.println(distance_j);

Serial.print("beta = “);

Serial.println(beta);

Serial.println(” ");

delay(300);

}

}

I’ll now start to work on the hardware side, starting with the base of the robot.

Update October 6/2012

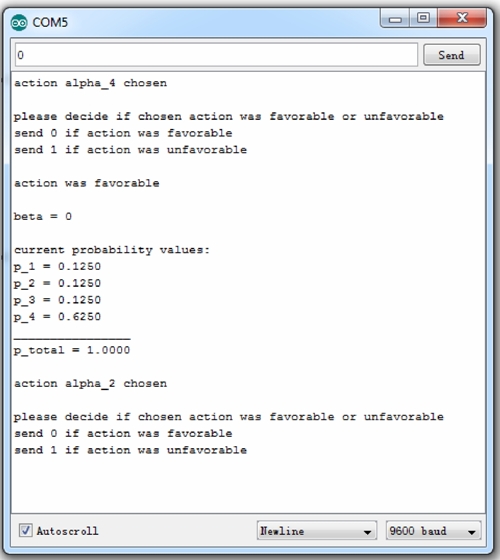

Interactive AI program finished and attached. I have set the default learning parameter a to 1/2 in the code, but you can change it as described in my previous update. Just send ‘0’ or ‘1’ via serial monitor, if a chosen action was favorable or unfavorable and see how the virtual robot learns to chose the right action over time.

Update October 5/2012

We’re getting closer to a working AI program. I have finished the updating rule T program part (see attachment). Also this part works for itself, if you want to experiment with it. Just send ‘0’ or ‘1’ via serial monitor, if a chosen action was favorable or unfavorable and see the updated probability values.

You can furthermore change the learning parameter a by hand, changing the values for the denominator v and numerator u in the code:

//

// Declaring constants

//float u=1.0; //define numerator of learning parameter. u must be a natural number >0

float v=2.0; //define denominator of learning parameter. v must be a natural number >0

float a=u/v; //define a as a fraction of u and v. a must be >0 and <1

The learning parameter a defines the rate of convergence. If the value of the learning parameter is too small, it takes a long time till the robot learn a task; if the value of the learning parameter is too large, the robot might interpret data from the environment wrong.

During programming I faced a problem with “over rolling” variables which define the probability fractions. I solved this problem by implementing the Euclidean algorithm to find the greatest common divisor (gcd). The denominator and numerator are then devided by the gcd to reduce the fraction at the maximum. Even with the Euclidean algorithm it’s still possible that the variables “roll over” if no gcd > 1 can be found (for instance if the denominator approaching a prime number which is greater than the maximum capacity of the variable). I’ll add a solution for that later if necessary.

Next step is now to merge the two program parts to the final interactive AI program, which simulates the machine learning of the robot. It then very simple to modify this program that it is workable for a real robot. Besides I am working on an external hardware real random generator design and according sampling to replace the primitive pseudo random function random() which is only suitable for testing and demonstration purposes.

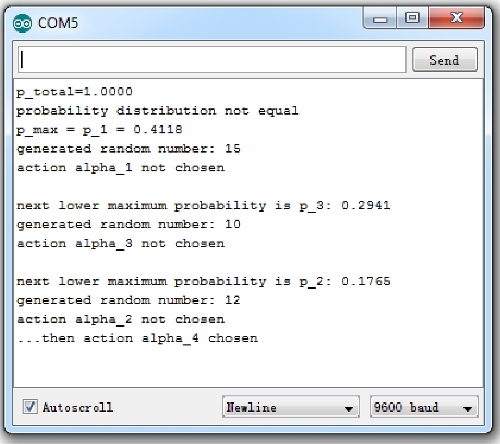

Update October 2/2012

I finished a first version of the ‘algorithm of choice’ program. The program is too long to post it, so I have attached it as a zip-file. You can experiment with the program. Just download it to your board and open the serial monitor. You can use the default probability values p1 to p4 or change the according t1 to t4 and y1 to y4 values to get new probabilities (then you have to download the updated program sketch again to your board). By pressing the reset button on your board, a decision is made again, maybe with a different result, even the probability values are the same.

//

// Defining probability values

//**************float t_1=7.0; //define denominators and numerators of probability values

float y_1=17.0; //these values must be changed by hand at the momentfloat t_2=3.0; //later the updating function T overtake this

float y_2=17.0; //all t must be natural numbers ≥ 0, all y must be natural numbers >0float t_3=5.0; //all fractions t/y must be ≥ 0 and ≤ 1

float y_3=17.0; //the sum of all fractions must be always equal to 1float t_4=2.0;

float y_4=17.0;//

//

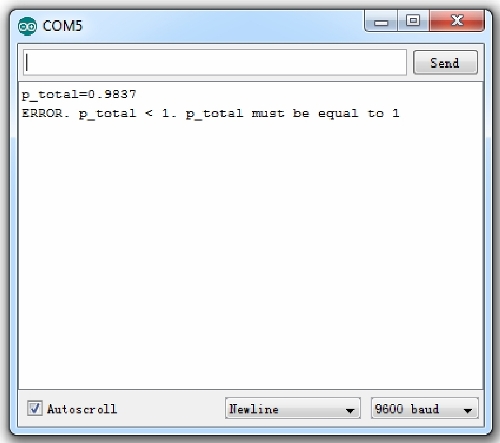

Take care, that the sum of p1 to p4 is always equal to 1, otherwise you get the following error message. Please also follow the other comments in the program sketch part as listed above.

Here is a tip how to find four (or more) fractions where the sum of all fraction is always 1:

1. Start by writing down a fraction where denominator (bottom of the fraction) and numerator (top of the fraction) are equal, e.g.:

![]()

2. Build a sum of 4 natural numbers which is equal to the shown numerator and replace it , e.g.:

![]()

3. Split the fraction as follows and you’re finished:

![]()

If you managed that or just use the default values, you should get something like that:

Next step is to write the ‘updating rule T’ algorithm program part. This program part will update the probabilities p1 to p4 then automatically according to the updating rule T each time a performed action has been considered as favorable or unfavorable. After that I will merge those two programs together to the final AI program.

Learns

- Actuators / output devices: Two servos modified for continuous rotation, One servo for the ultrasonic range finder

- Control method: autonomous

- CPU: Arduino Mega 2560

- Operating system: Windows 7

- Power source: 4 x 1.2 V NiMH/2000 mAh, 1 x 9 V

- Programming language: Arduino C

- Sensors / input devices: SRF05

- Target environment: indoors

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/baby-bot