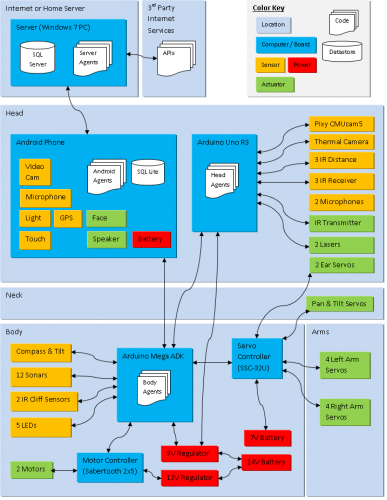

This is Ava. Ava is a friendly internet connected home companion robot that mostly likes to talk and learn new things. To do this, she uses natural language processing, several databases of with millions of memories, and hundreds of software agents. While she has some IQ in that she can answer many kinds of questions from her memory or using many web services, I plan to focus on improving her EQ (Emotional Intelligence Quotient) in the coming months and years. This involves emotions, empathy, curiosity, initiative, comprehension, and an extensive DB of personal data and social rules.

8/24/18 Update - New Calculation Engine, Neural Nets, Tensorflow

Inspired by demej00 and his self balancing bot using a Neural Net, I decided to take the plunge myself. This is what I did.

Generic Neural Net Engine and Model(s)

To learn, I studied with an example of a 3-layer NN on arduino and watched hours of Youtube videos. I then created a generic object oriented N-Level Neural Net engine in c#, with a database where I can define the hyper parameters, layers, training data, persist the model weights, etc. This required me using a lot more calculus than I had since taking it in High School. Done. Now, I an create a new NN, with zero coding, wire it up to some inputs and outputs, train, persist it, and use it for predictions. Pretty cool I think.

Generic Business Rules Engine

Ava uses "Virtual Business Objects" to model underlying database entities. These objects have various events (OnLoad, OnSave, etc) Ava has a generic business rules engine that can be invoked on any of these events. If business rules exist (also defined in tables with no code) ...they are evaluated and any resulting actions are executed. Any business rule can have pre-conditions and post-conditions. This makes them conditional (like If...Then logic) This engine allows Ava to do all the things that happen in typical business software packages...without code.

Generic Calculation Engine

This is part of the business rules engine. A calculation is merely something that accepts named inputs and return named outputs. This is a generic interface so that many different types of calculations can be supported. The idea is to simplify and abstract the concept of calculations from their implementations and make the interfaces robust and reusable. I tied this into my business rules engine, so I could control timing and pre-conditions for executing and sequencing calculations. There is a generic piece for mapping inputs and outputs as the names of things in the context of an object will be different from the names of things inside a resuable calculation module (like a script, spreadsheet, proc, etc)

So far, I have built modules to do the following types of calculations:

· Code Calcs: The calculation is performed in a C# class that implements a Calculation Interface.

· Database Calcs: This agent calls stored procedures or functions to perform calculations. The arguments are mapped to the parameters on the procs.

· Excel Calcs: This agent calls spreadsheets to perform calculations. The arguments are mapped to named ranges in the workbooks. This means I can use models using the Solver Add-In ( a non-linear optimizer ). I can obviously use are excel formula or spreadsheet that anyone has written too.

· Internal Neural Nets: This agent invokes the neural net models that I previously described. The arguments are mapped to the input layer.

· Wolfram Alpha Calcs: This agent calls Wolfram Alpha to perform calculations. The arguments are mapped to variables in some underlying formula stored in a db. Since Wolfram supports deploying calculations as a URL with args passed in, I will also support that mode when the need arises.

· Python (using Iron Python): This agent calls python scripts to perform calculations. The arguments are mapped to variables in the python script. I use the IronPython library for running python from C#. IronPython is not perfect, for example, I can't use numpy with it. Thats why I am working on the Jupyter option as well.

The following are calculation types I am still working on:

· Tensorflow Models: I have been spending most of my time learning Tensorflow lately, many hours a day. Ultimately, I plan on using multiple Tensorflow NN models in my bots for things like Word Vectors, Sentence Similarity, Image Recog, etc. I am currently learning by using Co-Labs Jupyter Notebooks and the manyu examples from SeedBank. If you are at all interested in NNs, I recommend starting here.

· Co-Labs Jupyter Notebooks: This option is used as a gateway to Tensorflow. It seems to simplify or eliminate my python compatibility issues. The idea works like this...convert a Jupyter notebook to run on a web server and accept args through the url, passing back results as json. I have prototyped this for a simple case and it works. The next step will be to try it on a full-size Tensorflow model.

In summary, I wanted the ability to use any engine, anywhere, to perform advanced calculations. I wanted totreat them all genericly, and be able to run my own internal neural nets, as well as much larger off-the-shelf or new Tensorfow neural nets and graphs. In the end, all these calculations get mapped back to user interfaces where they can be viewed abd/or to robot actuation. I am concentrating more on AI and apps for now, so I'm not using it for actuation...yet.

6/24/18 Update - New Face, More Context, Outlining, Data, Relevance

I have been busy making many improvements. This update is long...

New Local Panda Brain, Web Server, Queue, and Remote

For the last year or so, I was interacting with Ava mostly through a web page. Last week I hooked up her new "Panda" lower brain to her "Laptop-based and soon AWS-based" upper brain. The Panda brain runs a new animated face. It also runs a new web server API of its own so that remotes can relay commands to the bot or get information. These commands are stored in a MSMQ queue. I built a new Android based remote control which I can carry around...I don't intend to touch the bots face anymore.

I talk to the remote, it uses google to convert the speech to text, and then relays the text to the Panda by calling a new API running on a web server on the Panda. This Web Server then stores the text to a MSMQ queue that can be read by the windows program (also running on the Panda in a different process) which produces the face, difference engine, etc. This windows program attempts to process the text (in a chain of responsibility pattern)...if it can't process it locally, it passes it to the higher brain (running on AWS or a laptop) where the real verbal horsepower happens. Under this model, movement, internal state changes, complex gesturing and other functions can be handled locally. The local brain can support a limited vocabulary and scripting in english to support movement and state changes, things like "look north" or "what is your location" can be handled locally, while more complex verbal functions like "Compare helium and neon." are handled by the upper brain. The local brain can better handle movement and state changes because it is running and iterating continuously, animating the face, changing motivational and emotional states, servo joints, etc., as part of its new goal seeking difference engine. Movements need to be smooth and for some things, velocity/acceleration need to be controlled.

For the more complex verbal stuff, the upper brain responds back to the Panda with text and context, and the Panda talks via a windows program. I have the Panda moving its mouth to match the words and syllables using "visemes". Anna and Ava never did this before. I should be able to start making videos again of verbal interactions with the face (on a tv).

I suppose all of this probably sounds complex, but there is logic to the madness born out by experimentation. Some of these pieces are needed because of the limitations of various platforms. The Panda running windows is good at doing some things, like iterating continuously, maintaining state, movement, a face, a communicating with arduinos via I2C. The Panda can also listen continuosly, but is not good at translating complex speech to text. The phone remote is good at complex speech to text (meaning entire sentences) and allows me to be at distance from the bot. The upper brain is good at running more complex thoughts, data assimilation and organization, and responding without slowing down the local brain. The upper brain is transactional in that it is only called when necessary, it is not meant to iterate continously. The upper brain has about 15 databases to manage. The upper brain would probably be too much for a Panda. When I get the new Panda Alpha however, I may combine both onto one Alpha. I like the low/high combo as it might give me the ability to share the low brain with others, and let the high brain run on AWS.

Whats Missing?

For now, all the pieces mentioned are talking to each other. There are a few big problems remaining...I now have to get the Panda into the Bot, and re-write the backbone communications that would allow the Panda to talk to the other onboard arduinos through I2C, probably having to revisit all the arduino modules I have. I will also need to put a new HDMI display in place of the android phone. In order for this to not look funny, I will probably have to design a new wider head...more 3D printing. sadly, this will take time.

Stimulus/Response Engine

I have upgraded and standardized her stimulus/response engine. I will sum it up like this...a bot has many agents, each agent can respond to many patterns. Each pattern can have 1 or more named arguments. Each agent can have multiple "outcomes" like success, invalid inputs, yes, no, failure, etc. Each of these outcomes for each agent can have multiple ways that the outcome can be expressed in language. These expressions usually have arguments and context associated with them like topics, paging, emotion, tone, movement, etc.

More Context

In order to improve contextual understanding, I upgraded my storage of history to hold a context of name/value pairs that can be queried. For starters, the bot maintains information on what it believes the general and specific topic is at any point. For example, if you are talking about Boston, and you ask "What are the parks?" ...the bot needs to identify what the topic is/was most recently (Boston). If you say "What is the source?" or "Are you sure?", it needs to refer to the history to find the last statement with a context item of interest (Source, Confidence), and respond accordingly. Paging is implemented the same way. If the bot tells you a paragraph on the history of boston (it will have many more from wikipedia alone) ...if you say go on, continue, next, etc., it will maintain its position in the context so it can resume a narrative. Paging is useful in many contexts...like reading a book, wikipedia, reading email, news, etc. My intention as this point is to also maintain emotional, mood, and tone context by aggregating emotional responses in the very recent and recent interaction history.

Web Data Extraction, Outlining, and Relevance

I have written a new routine to injest, remove useless markup, outline and extract categorized text from web pages from which talking and narrative question answering can be done. For starters, I tried it on wiki. As wiki provides typically 4 levels of headings attached to its articles, the outlining process works very well. This is what supports the ability to answer questions that are not facts or comparisons, but more content related (delivered a paragraph at a time). If I ask about the mating of bats or the economic effects of taxes, I get a relevant narrative. At 5 Million pages in Wiki, and lets say 100-200 paragraphs (that can be categorized) on all the topics anyone might actually be interested in, this gives a lot of material that can be used to answer questions or to interject in conversation. This new algo can do its thing quickly without the need to store any of the data locally. There is still more work to do here to improve the hit rate or relevance level if the term being asked about doesn't match a term in the outline (lets say a user asks about "schooling" for a person and the outline uses "education") The results thus far are really good in my opinion. It will only get better as I apply synonyms/aliases to this query process. From a user perspective, her response rate went way up and the relevance of her speech in certain types of questions/discussion went up by at least an order of magnitude.

6/11/18 Update - Ava is finding similarities and differences, forming paragraphs.

The crux of this update involves getting Ava to be able to compare and contrast any 2 objects (of any object type found anywhere on the web), talk about an object or objects intelligently and in paragraphs, answer questions about them, prioritize, summarize, recognize unusual circumstances, etc. Perceiving similarities and differences is fundamental to thinking.

I had to build the following skills to achieve these goals:

- For starters, I needed some data about many different things. I built a generic ability to injest xml, json, and text from multiple sources and generically break the structure down and convert it to something that is stored in a relational database. Think of this as breaking down the results of any API call into uniquely identifiable elements. This could be data about anything...people, places, things, elements, animals, movies, etc. Because there are many APIs and some of these APIs have data on many different types of objects...this gives an ability to form a knowledge base around many types and populations of things. Many of these topics are academic at this point, which makes her very useful for educational purposes.

- Ava allows metadata be added (through a website that she creates) to all the unique data elements that are found. This metadata provides names, aliases, comparisons, context, and other attributes for each unique element found. Aliases allow you to refer to these things in different ways. Comparisons give the AI hints as to the various ways a given element (like size, weight, etc) can be compared. Context can be added so that the AI can recognize things like (If I see a "full name" element, I know I am dealing with a person and should capitalize their name)

- Upon request, Ava can then build an array of thoughts about an object. In this case when she encounters numerical values, she can compare that attribute of that object as compared with her known population of similar objects...determining mean and standard deviation, and saying things like "The crime rate is above average." She will calculate these metrics internally herself. In addition, metrics can be added from external sources...like an average age for people.

- Ava can compare two objects and build an array of thoughts capturing the results of these comparisons. This can handle word comparisons, value comparisons, greater than, less than, etc. Additional comparisons can be added and applied to any attribute of any object. This allows her to determine the degree of difference of any 2 objects on a similar dimension.

- Ava can express information, similarities, or various types of differences in multiple ways with different sentence structures and level of detail. This allows her communication to be more human-like. In the future, this will also allow me to choose the way to express something (with more or less casualness or specificity) based on the context of the situation or the preferences of the person being talked to.

- From all this data, Ava can then form paragraphs be selectively filtering the thoughts that were assembled in the previous steps. Filtering would be used to get everything, differences only, etc. This allows her to build coherent (mostly) paragraphs based on the topic or question at hand. While the robot might have 100 relevant thoughts/answers around a topic or question, she will prioritize them and provide a few at a time, allowing a user to only here more if they express interest in various ways like "tell me more".

- Ava can also answer specific data point questions about whatever has been injested like...Who/what/where is the ______ of/in _______? Expressed in many ways. Like what is the crime rate in Florida? Who are the spouses of Kim Kardashian? This aliasing previously discussed allow many terms to be used.

Here is an example of getting her response to "Tell me about Brad Pitt"

American actor regarded as one of the world's most attractive men, receiving significant media attention as such. Received attention after playing a cowboy hitchhiker in the 1991 movie Thelma & Louise. Owner of a media production company, Plan B Entertainment. Began his acting career with guest appearances on television shows. Involved with humanitarian issues and charities.

Nothing fancy here, just general info so far, which she decided was suitable to move to the top when talking about people. At this point, I said "go on", and she responded...

The parents are Jane Etta Pitt, and William Alvin Pitt. The spouses are Jennifer Aniston, Angelina Jolie, and Angelina Jolie. The children are Shiloh Nouvel Jolie-Pitt, Pax Thien Jolie-Pitt, Zahara Marley Jolie-Pitt, Vivienne Marcheline Jolie-Pitt, Knox Leon Jolie-Pitt, and Maddox Chivan Jolie-Pitt. The height of Brad Pitt is 5' 11".

Here is an excerpt where I asked her to compare two things...Alabama and Florida...

Alabama and Florida have a different capital, Montgomery and Tallahassee. The population of Alabama is less than that of Florida. The population growth rate of Alabama is +0.45% per year, while the population growth rate of Florida is +1.35% per year. The population density of Alabama is less than that of Florida. The annual deaths is lower for Alabama.

Upon asking here to "Tell me more", she responded...

The annual births of Alabama is less than the annual births of Florida. The land area of Alabama is less than that of Florida (50650 square miles vs 53620 square miles). The water area of Alabama is less than that of Florida. The farmland area of Alabama is less than the farmland area of Florida. At 492.1 ft, the average elevation of Alabama is greater than that of Florida at 98.43 ft. The number of households of Alabama is less than that of Florida (1.831 million vs 7.14 million). They have a different number of businesses, 382350 and 2.01 million. The state bird is different, yellowhammer and mockingbird. They have a different state flower, camellia and orange blossom.

There are still grammar and punctuation issues to work out but she is getting many things right and improving daily. She will answer differently each time using different sentence structures, figure about whether to capitalize, use "a" or "an", and many other things. Also, she can do this for anything that she can acquire data on. Here is an example from the question "How do nitrogen and carbon differ?"

The atomic symbol is different, N and C. They have a different atomic number, 7 and 6. Nitrogen and carbon have a different atomic mass, 14.007 u and 12.011 u. At 3, the valence of nitrogen is less than that of carbon at 4.

This last one shows me starting to work on comparisons using means (new as of this morning) ...it will only get better!

At 173.5 people per square mile, the population density of Georgia is below average.

9/18/17 Update - Ava will be Coming Back to Life

This project gathered dust for awhile but is going to slowly come back to life. The following are the highlights I am working on and planning right now.

- Remove all dependence on USB...tired of having issues with it.

- Swap out Arduino Uno in head with Arduino Uno Wifi. This is going to give me an ability for the server to call the robot, rather than the robot continually polling the server...did a proof of concept on this, looks good so far.

- Use Bluetooth to communicate from body mega adk to phone in face...seems to work if I don't push too much data.

- I am going to stop pushing all the sensor data (massive because of Pixy, Thermal, Mics, Sonars) to the server. I am going to have the server call the robot instead (via Uno Wifi), only when it is needed (when someone wants to look at it). This is another way I am trying to lessen my need of USB/BLE, and try to get something that I can work with moving forward.

- Move gesturing code from phone to microcontroller to remove all the necessary chatter to move all the servos, to reduce bandwidth over bluetooth...this will put more work on the Mega and be harder to maintain.

- I am redesigning all the databases from the ground up...moving away from a small number of generic tables to a large number of specific tables with a lot more referential integrity. This is now feasible because Ava can create the UIs for me to maintain everything, something she couldn't do until this year. This should allow me to scale better.

- Install the brain on Amazon Web Services, so it will always be available...this is going to cost a lot, that's life sometimes. My AWS account runs a WebFarm of 1-4 servers and 1 DB Server, so this is going to force me to redesign how I cache things and refresh them, as there is no guarantee that each server will have the same version of something cached unless I redesign it.

- My current codebase has an ability to run recurring background processes. I hope to utilize these for some kind of dream state learning, cache cleaning, syncing?, archiving, etc.

- I am genersizing the metadata defining the hardware interface so that other people's robots can use the brain API, even if their bot has completely different servos/actuators than mine.

- Add more context awareness to small talk engine so the bot can act differently in situations that are more formal, less formal, etc., depending on the situation and language, and so that others can create robot specific smalltalk.

- While the old version had some abilities to do multi-language, the new one is getting it more designed in to every aspect. I expect to be able to better handle things like the fact that grammar is different when you convert from Chinese to English, for example, something the translation sites do not handle.

- I would like to document the brain in pseudo-code as simple as possible, so a Raspberry Pi version could be written that closely matches the core features and could run locally on a bot, with data downloaded from server. My Pi skills are lacking though, so this will take time.

- When all this is done, sadly she will be less capable than before, but I believe she will be in a better position for the future. Sometimes you have to take a step back to move forward.

2/20/17 Update - Memory Upgrades, Cyber Security Features

I have been making significant upgrades to the server part of her brain, most notably her memory system. Whereas in the past she used a generic database to store over a hundred different types of memories, she can now incorporate other databases of any structure, and form her own memories about the structure of those databases, and use them as is. Because of this, I now plan on creating separate fully normalized databases of her existing memory sets for various portions of her brain...like her verbal features. She can automatically talk to any new database and figure out how to search, retrieve, update, delete data and relationships by interrogating the dbs system tables. She can also learn structural relationships in the data that do not explicitly exist with the help of a human trainer.

Automatic Web User Interfaces - Ava is now the world's fastest and cheapest web developer!

She also has the ability to generate intelligent user interfaces on the fly for any database she knows about, interfaces which while very good, can also "learn" and improve as people use them and give further input...training. This has many commercial applications as this basically drives the cost of producing web database applications to near zero...no coding...Ava just does it. Whether it is search, forms, parent child, whatever, she creates whatever is needed at runtime for whatever data structures are being looked at. I plan to add verbal features on top of this new capability so she can learn to talk and answer questions about newly incorporated databases with little training.

Shodan API Interface - Access to the Internet of Things

She now has the ability to find millions of other computers, devices, (potentially robots on day) on the internet by using the Shodan search engine via an API. Shodan crawls the internet 24/7 and catalogs open ports and much more detail that can then be queried.

Google Maps Javascript API Interface

She now can map various other memories (like computers found on Shodan) on nice map displays that can be sliced and diced for various data purposes.

GeoNames Data

She now has access to hundreds of thousands of locations like countries, administrative divisions, cities, etc. that can be used for mapping purposes.

National Vulnerability Database

She now has access to an extensive database of all the worlds known security exploits and related metadata. This, combined with her Showdan access and mapping, makes for a very powerful Cyber Security robot.

Android USB Recognition Issues

I have been having a lot of trouble getting the Android phone to recognize the Arduino Mega ADK consistently and startup. Because of this, I haven't had the full Ava running very much to make more videos...been spending most of my time working on server "brain" features anyway.

7/25/16 Update - Physical Complete Milestone

I am proud to announce, Ava is now "physically complete", so I can now focus on software and making her smarter, which is my real passion.

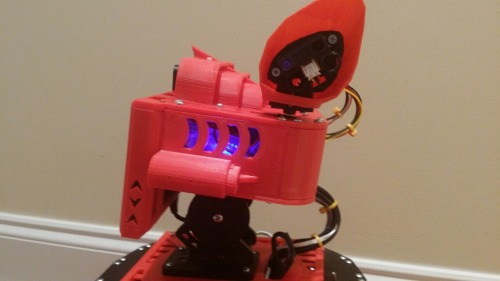

After two months of having the head torn apart, I was so happy to close up the head and take some new pictures (below). I ended up using I2C to communicate from body to arduino Uno in brain. The 3 Arduinos, phone, computer, SSC-32U, Motor Controller, Pixy Cam, Thermal Cam, and many other sensors are all working together! The Nano puts off blue light (from the side of her head), and the Uno gives off red light (from the top of her head glowing through the thin plastic), so in the dark she looks really cool! Note the new IR Tracker camera on her right cheek.

If anyone is doing Android Speech-To-Text and not able to get it working on later versions, its a Google problem, but I have a workaround so contact me if you want.

7/7/16 Update

I have been on a quest to finish hooking up all the head sensors. Its been a huge challenge to get various sensors to work together without interfering and crashing one of the processors.

1. Installed Arduino Uno R3 in head to handle all the head sensors except the thermal camera and phone, communicates with Mega ADK through SoftwareSerial. I am trying to get a 2nd SoftSerial connection to work with a Nano, without success.

1.1 Installed and Tested Pixycam to Uno and wrote software interface to pipe data back to Mega through Uno for color blobs (color, x, y, width, height. I will coordinate this data with compass and neck servo to build a radar map of color blobs found around the bot.

1,2 Installed and Tested 2 Microphones in ears and wrote software interface to pipe data back to Mega through Uno for volume level. Will need to do a lot more signal processing to recognize patterns or timing difference to determine approximate direction of sound, or trigger voice listening on a particular event like a loud sound. To insall mics, I finally had to learn to solder!

1,3 Installed and tested 2 sharp IR distance sensors in ears and wrote software interface to pipe data back to Mega through Uno. Will need to correlate this data with compass, neck servo, and ear servos (timing will be complicated), to build a radar type map of all the distances. The ears move quite quickly and will be taking in a lot of data very quickly.

1,4 Tested new IR tracking camera and wrote interface. This sensor outputs x,y coordinates of up to 4 IR sources in its view. I eventually would like to build a localization system of IR emitters (at power outlets), so that the bot can locate itself precisely in a room. I did this before with visual objects (OCR) so I feel like the technique will work. There is no room for the IR Tracking camera in the head so I hope to mount it under one of the lasers in the cheeks.

2. Installed Arduino Nano in head (very tight on space now). This Nano outputs data via SoftwareSerial.

2,1 Installed and Tested Thermal Camera (16x4 Thermal array sensor) to the Nano. I had to dedicate the Nano to the thermal sensor as I lost more than a week trying to combine it with anything else on the Uno. Out of simplicity I was tempted to give up on having this sensor and the Nano to support it, but I really need this sensor for tracking people/pets.

Still have to work out link with Nano and rest of bot...out of UARTs, SoftSerial, and I2C is not an option.

3. Designed new custom red side skirts to replace the stock lynxmotion tri-track ones, also new parts for back of head (to allow acccess to Uno ports) and other mods to head to support all the sensors.

5/11/16 Update - This update is all about Ava's new brain transplant...from a very large PC to a very small LattePanda SBC. I have transplanted all her brain software to the Panda. In the photo below, I have keyboard, monitor, mouse, network, and power cables hooked up, If I install it inside the bot (it now runs outside via wifi/http), I will only need power and maybe a few wires to the onboard Arduino headers.

I got the Panda with 4GB RAM and 64GB eMMC. It runs Windows 10. I also installed Visual Studio 2015, SQL Server Express 2014, and IIS (Web Server). It also has an onboard Arduino which has all the pins to allow it to talk directly to the other boards on the bot. There are APIs to talk to the Arduino from .NET, but I haven't used those yet. Right now I have it running a website and communicating through http to the android phone as it did with the server previosly. I could switch to bluetooth or use a TxRx wired connection into the Mega in the body. Still much to figure out about the best way to integrate the Panda into the rest of the hardware on the bot. Right now, I am happy to report that the Panda can run my brain software...although I haven't tested SharpNLP yet. While it is slower than my desktop PC, it works and stays well within the memory and CPU constraints of the board. This is something considering I am running 15 .NET projects and caching tens of thousands of memories. My next challenge will be to get the Panda into the bot and power it with 2Amps+ through a mini USB jack.

The old brain software was designed to support multiple robots at the same time, and thus complicated by having to maintain separate state for each bot/session. With a little redesign, I could make it run dedicated to a single bot and simplify/optimize things in the process. The onboard arduino also raises many new possibilities for having the Panda (the higher level brain) talk directly to other arduinos, motor controllers, sensors, etc. on the robot directly. Previously, the brain could only talk to the phone, which passed messages through USB to the Mega and got delegated from there. Another possibility is to get rid of the phone altogether and use an HDMI touch display hooked up to the Panda.

11 new head and ear sensors...7 installed, 4 to go. This took some quite some cramming...

The little button sticking through is the button for training the Pixy cam.

12/27/15 update in words...

- Designed and printed new pieces for the top of the head, sides of the head, front and back compartments, grills, bumpers, ear sensor holders, head sensor holders, laser holders. Still need new rear head panel to allow access to head arduino USB port.

- Installed Pixy Cam, Thermal Camera, and Long Range Sharp IR distance sensor in head, still need to install IR receiver and transmitter, and wire up all head sensors to arduino.

- Installed Microphone and Sharp IR distance sensors in ears. Still need to install IR receiver in each ear and wire up the ears.

- Got autonomous ear movements working

- Got autonomous arm gestures working whilte talking.

- Got autonomous head movements while talking working...until she broke her neck...waiting for some new servo horns.

- Got the arms to move down out of the way of all the sonars while driving forward or reverse and return when not driving.

- Got her weather features working so she can forecast and talk about the weather.

- Got her to put famous movie lines and or religious verses into her speech when turned on...which is weird when she quotes a verse one second and then cracks an off color joke about religion in the next! Sadly, her news features are not working...looks like feedzilla is no longer online. I need to find a new free news API. Recommendations?

- I am working on a new tracking agent that keeps track of all known objects, heading, elevation, size, type, color, etc. I plan on using this to drive autonomous head movements soon. The idea is, she will tend to look at people when they show up, talk, or see is talking, but after a short period of time, her focus will wander to other objects in the room.

As soon as her neck is repaired, I plan on making some videos.

12/3/15 Update

- Got the Mega talking to the Servo Controller through serial interface.

- Got the Mega talking to the Motor Controller through Sabertooths Simplified Serial Interface.

- Got all the servos moving and calibrated...mostly.

- Installed the compass, had to rearrange the insides to get the accuracy up, moved power distribution and switches from front to back (bummer). Thanks goodness I had 3D printed interchangeable parts I could swap front/back, this was easy.

- Built all the wiring harnesses for the 12 sonars and installed. Tested the full sonar array for the first time and everything looks good! I should be up and driving in my new 12 sector force field algorithm very soon! Between the servos and sonars, the amount of wiring is getting up there, thank goodness for zip ties, labels, and Polulu build your own cables.

- Built a mechanism for defining and storing "poses" on the server and a way to download them and execute them on the bot on demand. Started off by creating a few dozen poses for various body positions or positions of particular body parts. Each pose can reference 1, a few, or all the servos at the same time, with a speed for each. I built poses for things like "Ears-Front-Quickly" or "Head-Nod-Down" or "Point-Left", which moves all servos in a coordinated action. Things were erratic at first until I worked out my coordinate system and calibrations correctly for each servo.

- Build a mechanism for defining narrative "missions" on the server. A narrative "mission" is a series of verbal commands to be performed simultaneously, in sequence, or a combination of both. Among many other uses, this can be used to animate the robot from one "pose" or position to another, while talking or doing other things like playing music. My favorite part is, I can write a mission as a paragraph like this example for doing a little dance...pose Default. say lets dance. wait 3000. pose Point-Right. say take it to the right. wait 4000. pose Point-Left. say now take it to the left. wait 3000. pose Default. say now stretch it out. wait 3000. pose Arms-Up. say take it up high. wait 3000. pose Drive. say now set it down low. wait 3000. say great job. pose Default. mute be happy. say great job. wait 2000. say that was fun.

- Using the narrative missions, I'm defining animations for lots of behaviors like nodding head yes, shaking head no, ear movements, etc. I intend to start tying the ears and head movements to the sonar array and emotions, and get her moving her arms and gesturing when she talks, which is a lot now.

11/13/15 Update

- Added 1st version of 3D Printed Head. Note, these are the aesthetic ears, not the camera ears, which I am still designing. Laser pods will be added on each side, and a sensor pod will be added on top to hold the thermal camera and IR emitter and detector among other things.

- Installed new Sony 8-Core Phone for Face and on board brain.

- Got first version of new android app written and exchanging messages with Arduino Mega ADK via USB. It was built with Android Studio and is updated to use OpenCV 3.0 instead of 2.4.5.

- Got new version of voice remote app working. The remote appm runs on another phone or tablet and is used to communicate with Ava or control her so I don't have to touch her face.

- Got face part of app to automatically scale to smaller or larger devices. Also made app configurable to tie into any brain on my Lucy shared brain API.

- Made first conversation, listening, emotional, eye dilation, and color tracking tests. Talked with Ava on smalltalk and a few other topics. She has reached "First Talk" milestone! Also tested question answering and Wolfram Alpha integration. Had to turn off curiousity, motivation, and weather features for now.

- Some new pics...

10/29/15 Update

- Added new Ava Logo in Red...I think I need to make it bigger!

- Added new Sonar Panels in Black and Red with Sonars Installed

- Added New Compartment and Cover in Lower Front to gain space for power supplies, hold driving lights, and reduce boxy shape.

- I chose new Sony 8-Core Phone for Face with Side Power Jack instead of 4-Core Moto G with end jack. This should let me use USB instead of Bluetooth for Arduino to Android interface, as jack is in a better location not to block lasers.

- Purchased 2 new Pixy CMUCam5 Sensors for Ears and am in process of testing them out to see what they can do. The ears will have to be made larger to fit them inside.

10/19/15 Update

Added the arm and the catlike ears over the weekend. Still working on designing the head...it will be red and not square! Cramming all the sensors in will be the challenge. Planning on putting a Pixy CMUcam5 in each ear.

Say hello to my little friend. Ava will be my second robot. Ava will be a sister to my other robot, Anna,, with some notable improvements. Almost all of Ava's body is 3D printed, about 50 parts and counting. Ava will have 2 arms with 4-5 DOF each, and 2 articulated ears (like a cat's) with 2 DOF each. In addition, the entire head will pan and tilt, so that the robot can better interact with people and also localize by identifying markers on the ceiling. Thus far, I have concentrated on the 3D design and printing of the body. I have already written most of the software for Ava, as this robot is reusing most of the code base from Anna and the shared internet brain I call Project Lucy. This means she will talk and have a personality from day one, she just won't know how to use her arms and articulated ears for a bit. I plan to utilize her for entertainment and to voice control my TV and household lights. Think of her by my side, hanging out, watching TV, answering questions, telling jokes, and changing channels for me. I have most of the software for this written already, so this is not much of a stretch, its really just a start.

I'll post more as she comes together.

Makes Conversation, Answers Questions, Explores

- Actuators / output devices: 2 motors, 12 Servos, 1 speaker

- Control method: Voice, radio control, internet, autonomous

- CPU: LattePanda SBC

- Operating system: Windows 10, Arduino, Android

- Power source: 4S 14.8v 4000mAh LiPo Battery with 4 switching regulators for lower voltages

- Programming language: Java, C#, Arduino C

- Sensors / input devices: Video Cam, Thermal Cam, microphone, Light, GPS, compass, 2 IR Distance, 12 SRF04 Sonars

- Target environment: indoor

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/ava