Since October 2007 I developed new object recognition algorithm “Associative Video Memory” (AVM).

Algorithm AVM uses a principle of multilevel decomposition of recognition matrices, it is steady against noise of the camera and well scaled, simply and quickly for training.

And now I want to introduce my experiment with robot navigation based on visual landmark beacons: “Follow me” and “Walking by gates”.

Walking from p2 to p1 and back

I embodied both algorithms to Navigator plugin for using within RoboRealm software.

So, you can try now to review my experiments with using AVM Navigator.

The Navigator module has two base algorithms:

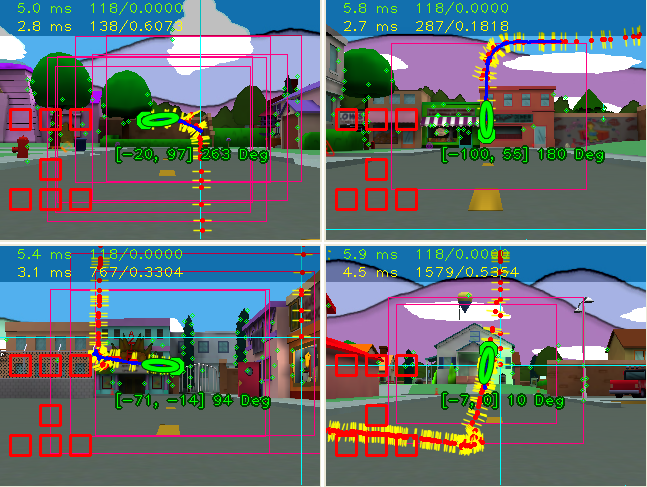

-= Follow me =-

The navigation algorithm do attempts to align position of a tower and the body

of robot on the center of the first recognized object in the list of tracking

and if the object is far will come nearer and if it is too close it will be

rolled away back.

-= Walking by gates =-

The gate data contains weights for the seven routes that indicate importance of this gateway for each route. At the bottom of the screen was added indicator “horizon” which shows direction for adjust the robot’s motion for further movement on the route. Field of gates is painted blue if the gates do not participate in this route (weight rate 0), and warmer colors (ending in yellow) show a gradation of “importance” of the gate in the current route.

- The procedure of training on route

For training of the route you have to indicate actual route (button “Walking by way”)

in “Nova gate” mode and then you must drive the robot manually by route (the gates will be installed automatically). In the end of the route you must click on the button “Set checkpoint” and then robot will turn several times on one spot and mark his current location as a checkpoint.

So, if robot will walk by gates and suddenly will have seen some object that can be recognized then robot will navigate by the “follow me” algorithm.

If robot can’t recognize anything (gate/object) then robot will be turning around on the spot

for searching (it may twitch from time to time in a random way).

Now AVM Navigator v0.7 is released and you can download it from RoboRealm website.

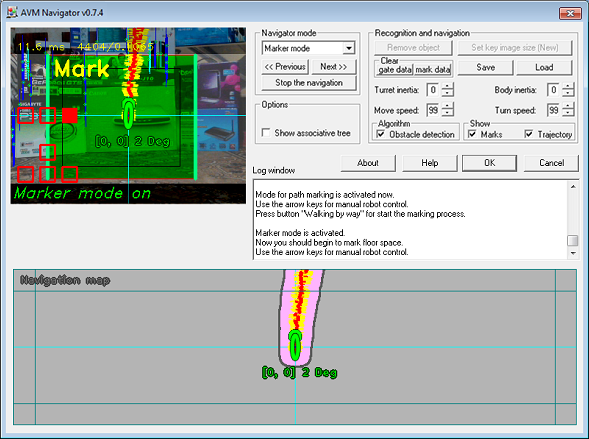

In new version is added two modes: “Marker mode” and “Navigate by map”.

Marker mode

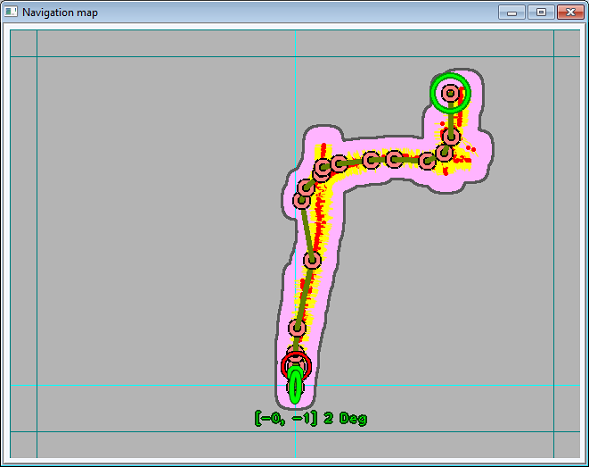

Marker mode provides a forming of navigation map that will be made automatically by space marking. You just should manually lead the robot along some path and repeat it several times for good map detailing.

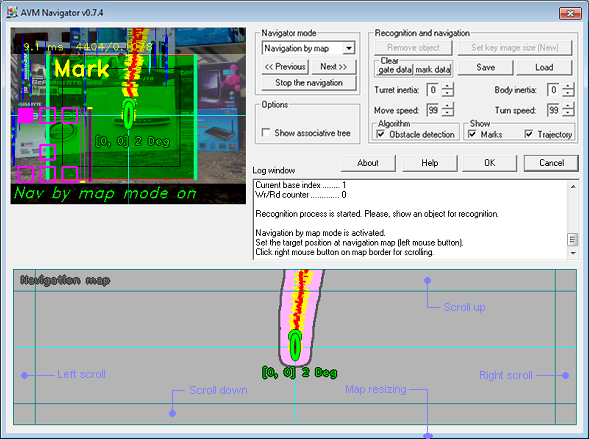

Navigation by map

In this mode you should point the target position at the navigation map and further the robot plans the path (maze solving) from current location to the target position (big green circle) and then robot begins automatically walking to the target position.

For external control of “Navigate by map” mode is added new module variables:

NV_LOCATION_X - current location X coordinate;

NV_LOCATION_Y - current location Y coordinate;

NV_LOCATION_ANGLE - horizontal angle of robot in current location (in radians);

Target position at the navigation map

NV_IN_TRG_POS_X - target position X coordinate;

NV_IN_TRG_POS_Y - target position Y coordinate;

NV_IN_SUBMIT_POS - submitting of target position (value should be set 0 -> 1 for action).

Examples