Intelligent Machines?

Can a machine think? Certainly not a new question. It has been debated endlessly since the electronic computer, the "electronic brain," was invented some seventy years ago. I'm going to present an argument, based on elementary science and accepted research, that says we can build thinking machines, but not just yet. Then, I'm going to ask, "what's stopping us?"

Much of writing is based on or at least inspired by the work of James Albus and his book Brains, Behavior, and Robotics.

What does it mean tho "think?" Is it something magical? Is there some law or laws of physics yet to be discovered that makes us think? Is there a soul? If you think there is something magical or unknown, outside the known laws of physics, then it is unlikely you will accept my argument. But I encourage you to read on anyway. You still may get something from it. But I will discuss the idea with the understanding that we know enough of the fundamentals to understand the brain, and therefore thought, even if we don't know all the details yet.

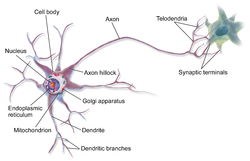

What is "thinking?" It's a process that goes on inside our brains. We certainly don't fully understand that yet. But we do have a fair understanding of how the brain works at a low level. The main reason we don't understand more is complexity. Brains are made of neurons, which are connected together in a network. The connections are called synapses. The numbers of these neurons and synapses is staggering. Very simple creatures like sea slugs have over 10,000 neurons. A human has around 100 billion! That's a lot. But what's worse is that they aren't arranged in nice, neat arrangments like a crystal lattice. They are grouped on bunches that perform certain functions, but within each bunch the connections appear random. And, they aren't the same from individual to individual. The connections also change as you learn. Then, each cluster is connected to other clusters in much the same way. And there are a lot of these connections. Each neuron typically has hundreds or thousands of connections, or synapses. It is estimated the human brain has about 1.5 x 10^14 or 150 trillion(150,000,000,000,000) synapses. But we are getting ahead of ourselves.

There are many types of neurons that serve different purposes. But most of them, if not all, have been pretty well mapped as far as how they work. For the most part they are more alike than different. The differences are mostly in what they are used for. In general, a neuron has an output "axon" that can send out a single electrochemical pulse to other neurons when it "fires." It has a single stored voltage threshold. It has some number of synapses, typically in the hundreds, that input the pulses from other neurons. Each synapse has a multiplying weight; if the synapse receives a pulse it is multiplied by the stored weight of the synapse and then that values is stored. The stored value will drain away over time, like the charge on a capacitor, but it provides a buffer that allows incoming pulses to work together even if they don't arrive at the same time. All of these stored input values are added together inside the neuron and if the total is higher than the neuron's stored threshold, the neuron fires, sending a pulse out on its axon, into the synapses of other connected neurons.

We don't know all the details of neurons, but we do have a pretty good grasp of how they work. Good enough to simulate them with a computer. You are probably aware of projects that rely on Artificial Neural Networks. They have accomplished some amazing feats. They can recognize images and sounds, translate languages, and perform other activities usually thought of as intelligent. But I would argue that they are NOT intelligent. Not yet.

So we have established that we can simulate a neuron, the basic building block of the brain. And we have established that we can group these simulated neurons into useful arrangements and program them to do impressive things. We have decided to accept that though is an activity of the brain, the neurons and their connections, and that the brains activity is solely based on known physics without any unknown outside influence. I made the claim that we can build intelligent machines, but then I claimed that even though we have build neural networks that can do amazing tasks, they aren't intelligent.Why not? What are we missing?

Neurons and therefore brains are pattern matching machines. When the inputs of a neuron match the pattern it is programmed for (by the weights and threshold) it fires its output indicating a match. Groups of neurons match different, but relaed, patterns. Neruons in the eye and the parts of the brain used for vision match visual patterns of objects. It isn't quite this simple, but consider a group of neruons that match an image of a snarling dog. The output pulse triggers fear and a fight or flight response. Another example is when you smell an apple pie baking. The specific pattern of chemicals that contact and fire the neurons (nerves) in your nose match the stored pattern of "apple pie" and trigger a hunger as well as an association with childhood memories of holidays spent at your Grandmother's house. You might even start to drool! The point is that matching patterns causes behaviors. As the brain gets more complex, the patterns and behaviors become more complex.

The brain of humans evolved from siimpler animals. The lower levels of a human brain are very similar to the brains of much less intelligent creatures. As you move up the intelligence scale, the brains add layers. At the very lowest levels are the basic control systems needed for survival: heart beating, breathing, hunger and eating, reproduction, etc. Very simple animals, such as the seal slug mentioned above, have these behaviors.

If you move up a couple of levels, you find ability to do more advanced things. Hunting and stalking pray. Recognizing landmarks and safe or dangerous places. Building nests or burrows. Moving up further you get to creatures that can use tools, then creatures that can build tools. And eventually "abstract thought." Nature builds from the bottom up, starting with the simple, then making it more and more capable and complex. And Nature has a very strong tendency to make things the simplest way possible that still gets the job done.

Notice that ALL of intelligence comes from interacting with the environment. Some things are obviously products of the envirnonment. Sensing touch, or smells, or light, or sound. Recognizing a wolf is an extension of sensing light or dark. Recognizing the sound of a predator's footsteps is an extension of hearing sound. But what about playing chess? Or contemplating what intelligence is? Playing chess is an extension of learning to fight ans survive. It's still dealing with the environment, but now the environment has become virtual, mostly inside our minds. As the brain becomes more complex it becomes capable of "modeling" the environment outside, much like a computer simulation. And that is a useful tool for survival; we can "think about" different scenarios, playing "what if" games before exposing ourselves to possibly dangerous situations.And what about something seemingly totally abstract, like our contemplations of "what is intelligence?" If a caveman say a rifle he probably would have no idea what it was or how useful it could be to him. But once he understands it, he can make very good use of it for survival. Our brains and our thoughts are our most powerful tools. Understanding them is helpful to using them effectively. So it is a natural extension of survival, at the highest levels, to try to understand how our most powerful tool works. The brain is part of the environment, just as light and sound and smell.

As Albus points out in his book, artifical intelligence research could take two paths: bottom up and top down. As we've seen, Nature uses bottom up to build intelligence. But by far the most common research by humans has been top down. Let's build a chess playing program, or a program that understands natural language. In our brains the upper levels used to play chess or understand language draw much of their power from the underlying levels. If I say to you "grab that cup" a whole series of smaller actions must take place before you can grab the cup. You have to know what a cup is, you have to know what it means to grab. You have to use your muscles and vision to look around your environment and find "that cup" whatever that might mean. You have to coordinate your muscles and your vision to move toward the cup and reach out and grasp it. You probably also have a good idea from the environmental context what I want you to do with that cup. If you start a program to understand natural language from the top down, eventually you are going to have to program in ALL those activities and many more. It's silly to think that you can accomplish everything the brain does with 1/1000 or even 1/1000000 as much power. Nature is very efficient. But that is exactly what researchers using the top down methods have attempted to do. So, although some modern systems fake intelligence to some degree, I think it is safe to say they aren't intelligent. And it's pretty easy to call their bluff.

On the other hand, we understand neurons well enough to emulate them. We understand mostly how neurons are put together to form the brain. We accept that the brain must follow the laws of physics as we know them. So, if we put together enough neurons in the right patterns with the right parameters, we should get a brain. And if we copy a working brain exactly, it should perform exactly as the one we copied. Instant intelligence!

What's remarkable is that it has been done! In 2014 a group built an artificial neural network that perfectly mimicked the c. elegans worm. This is a simple creature, with only 302 neurons. But it worked! That's very strong evidence that we are on the right track. Of course this creature is very simple. It's not likely to play chess or understand English.

The worm project was four years ago. Why haven't we built a human brain yet? Now it's time to go back to that complexity. The human brain has roughly 100 billion neurons. On average, each neuron has about 1,500 synapses (connections.) That's a total of 150 trillion synapses. To model a neuron we need to store the number for its threshold. We can probably use a 16 bit integer. For each synapse we need to model it's stored value and it's weight. Again, we can probably use 16 bit integers for both of those. Also, each synapse needs to know which of the 100 billion neurons it is connected to so we need to store that number. A 32 bit integer can only distinguish about 4 billion, but we will pretend we have found a way to limit the numbers so that will work. In total each synapse will need about 8 bytes of storage and each neuron will need 1,500 times 8 plus 2; let's call it an even 12,000 bytes. We have a hundred billion neurons. Each one will need 12,000 bytes.That means we need 12,000 * 100,000,000,000, or 1,200,000,000,000,000 bytes (1.2 Petabytes!) just to store the neuron parameters! I don't know about you, but the PC I'm writing this on has 8 Gigabytes ( about 8,000,000,000.) I would need 150,000 of these PCs just to store the neuron parameters! And let's say you found a way to study the brain and map a complete neuron, with its 1,500 synapses and all their connections and weights. And you can do each one and create its model in your computer in 1 second each. It will still take you over 3000 years to build your model! We won't even get into how slowly the program would run. I think we've got our work cut out just building it.

But all is not lost. There are currently some really big computers that have enough memory and speed. And thanks to Gordon Moore we double the number of circuits we can put on a chip about every two years, so eventually we will probably have enough power on our desks. In 1980 a typical PC had around 32 Kilobytes. In 2000 it was around 32 Megabytes. Now, in 2018, we are hovering around 8 to 16 Gigabytes. Each of those is roughly a factor of 1000 increase, following Moore's law's prediction. In 2040, a little more than 20 years from now, we can expect a typical PC to have around 32 Terabytes. That's getting in the range for some rudimentary intelligence -- on a desk! Another twenty years, 2060, should give us PCs with enough memory and power to simulate a human brain. What those computers will look like is anyone's guess -- but hopefully they won't be running Windows! It is rather simple to build neurons. You can do it with an Arduino. With really big computers, we can build enough to simulate a human brain. The rest is just a simple matter of programming! Programming a neural network consists of setting the weights and thresholds and making the connections between the neurons. That isn't easy, as we've seen. But as usual we will put the computers we have to work on the problem. As has happened so many times already, the computer will be applied to automating the task and make it feasible. It's only a matter of time and research dollars. The benefits are too great for it to not happen.

So, we will have intelligent computers. Most likely within the foreseeable future. What's going to stop it from happening? In two or three generations, the computers may be putting us to work!

Thanks for helping to keep our community civil!

This post is an advertisement, or vandalism. It is not useful or relevant to the current topic.

You flagged this as spam. Undo flag.Flag Post