This is a robot based on the concept of embodiment and emergence, a concept seemingly proposed by Rolf Pfeifer. It is also based on a presentation I gave in 2011 on the "undictated/liberate-ive" approach to AI. To put simply, none of the behavior of this robot is preprogrammed; instead the behavior is emergent out of collective response of a bunch of cellular automatons programmed to mimic behavior of neurons (I named this NCA, short for Neural Cellular Automatons). The NCA reacts to sensor values it is fed with, and provides feedback loop to itself through embodiment (e.g. its movement affects IMU sensor readings, result of which itself is fed into NCA). It's a purely deterministic system that eventually settle into an equilibrium, which can be disturbed by means of shock (extreme sensor values) to the "organism".

The idea is to build the robot as biomimetic as possible in terms of both hardware and software. Thus no wheels or mechanical arms/legs whatsoever. "Worm" design was inspired by the fact that the first creature to have its neural structure fully mapped is the worm C. elegans. After numerous iterations, from being just a worm, it reached the current design that follow after both worm and a brain-brainstem structure. Current design has "slots" (will elaborate more on this later) to allow expansion of its physical form into other creatures. Thus essentially it's a brain-brainstem that can be plugged into other bodies to transform it into other robotic creatures. Adding skin will work too.

Purpose of the robot? Animal-assisted-therapy for kids at places where real pet animals are not viable. The usual pet robots are full of repetitive preprogrammed behavior and usually lose their novelty once all the routines have been observed. This robot relies purely on emergent phenomenon, thus dynamic behavior. Earlier prototypes (lost the video, sorry) observation indicates, relatively, plus possible personal bias, it should at least be equivalent to keeping a hamster. One of it actually cried a lot, so we unplugged its small speaker, figured out a better way later was to increase the NCA action potential threshold instead.

The whole project is based on Open Hardware and Open Software. 3D design was done in FreeCAD, rendered in Blender, and printed with RepRapPro Ormerod 2 3D printer. Microcontroller is using Arduino. It's meant to be a fully open project, a way of thanking the open community for having given so much. I'll be uploading all the files for this project on GitHub (once I get a grip on using it, soon) and linking it here.

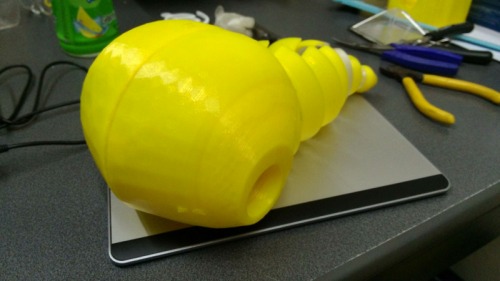

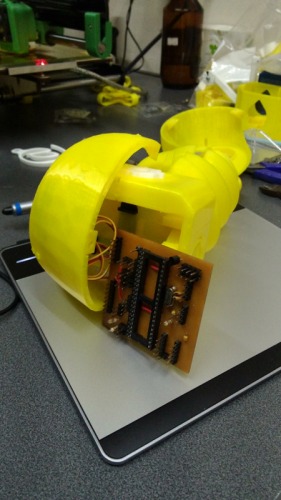

Pictures of actual build:

Build status:

- Physical form: 100% done, printed in PLA (eco-friendly). A close friend (who is a very gifted illustrator) is actually doing cute-ification process to the physical shape. The next 3D print revision will be a lot lot lot better :D

- Electronics: 80% done (ATmega1284P; input: MPR121 breakout board, MPU-6050 on GY-521, LDR; output: RGB LED, small speaker, 3x TGY-R5180MG servos to actuate cable pull mechanism)

- Software: 90% done (left integrating the MPR121)

Currently:

- The robot is built in my (and wonderful partners') office/workshop in Malaysia. Complicating circumstances require that I be in my home country (Indonesia) at the moment. Hence the build is stranded. On the positive side, I get to focus effort on documentation and sharing all existing information to fellow robotics/AI enthusiasts. Basically just doing whatever I can for now, until I'm back in Malaysia. That in addition to a pile of other works that pay the bills... T_T (I really should be going crowdfunding).

- Sorry if I'm ranting too much in my first post, I'm just discovering this place. It's lonely out there in real world, no one to talk to about robotics/AI stuff...

- Will update this post again soon (within a week I hope).

- Thanks for reading this far, you are awesome :)

Update on 13-Jul-2015:

- Uploaded a video: https://youtu.be/-nrMCkI62Js

- Messy source code in github: https://github.com/ronald00chua/NCA-for-UROv00

- Sorry guys I had so much bureaucracy to deal with in the past months and have not been able to progress much... also I entered this project into the "Robot Launch 2015" hoping for investment so I can develop this full time.

- Thank you again :)

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/undictated-robotic-organism-uro-version-00

Most of us will think it’s still too less. I would like to see a video but considering you are even not in the same country I will suffer and wait

Most of us will think it’s still too less. I would like to see a video but considering you are even not in the same country I will suffer and wait