The goal is to design, build and program a robot which is nearly as sophisticated as ASIMO ($1,000,000) or WilloGarage's PR2 ($400,000) from off the shelf components. The project is completely open source, everybody can participate.

Groups

- Design: Ro-Bot-X, cr0c0, MarkusB

- Mechanics: TinHead, JoeBTheKing, Jad-Berro, MarkusB

- Electronic: MarkusB, Jad-Berro, Krumlink

- Software: GroG, AgentBurn

- Web presence: JoeBTheKing

- You?

Inventory

|

Current Milestones

- Assemble brains in a protective case

- Install all software

- Verify working of Battery and Power Supply Unit

- Attach Webcam

- Attach Kinect - OpenCV Kinect update

- Attach Bare Bones Board Arduino Clone

- Design Base

Build Log Most Current At Top

OpenCV / Kinect Progress :

08-24-2011

I created a couple more filters and added some functionality to the OpenCV Service. I have also created a new Service called FSMTest for "Finite State Machine Test". Not much is implemented as I wanted to get the Sphinx and Google speech recognition Services in a useful state.

Now that the housekeeping is done, I have uploaded a quick video of the progress.

What happens :

- The FSM starts up - the Speech recognition services will begin listening for commands (I disabled them currently, because they take a lot of "start up" time which hinders debugging

- I will command it to look for an object

- It will rapidly go through various filters and techniques for object segmentation ... "Finding the Object"

- It will then go through its associative memory looking for a match - if it finds a match it will "Report the Object"

- If it does not find a match it will query for associative information from me. This wil be "Resolving the Object". I will use with Sphinx speech recognition initially, but I am very interested in hooking into the Google speech recognizer Service I created recently.

- This test shows a guitar case lying against the wall

- Segmentation is only done with the kinect. The kinect can range distances and a depth from 4' to 4' 3 inches is segmented off and turned into a polygon.

- The polygon's bounding area is found then a template is created.

- The Matching template filter is put into place, then the object is matched and locked.

- The matching template will be associated with speech recognized words and put into memory, which can be serialized.

- When an object leave the field of vision, and later returns to it, the robot will "Resolve the Object" and its associations. It will be able to "Report the Object" by saying such briliant things as "Why did you put a guitar case in front of me?" .. which in turn may lead to other associations?

Remote Connectivity:

08-15-2011

It is nice to have options. A full X desktop would be nice to have with this project.

It would be necessary to start and use the X desktop from the command line. Here are the step necessary to accomplish this.

- Fedora 15 default will not have the sshd daemon running on boot - to change this option use the following commands

# systemctl start sshd.service # - will start the sshd daemon

# systemctl enable sshd.service # - will autostart the daemon on boot - X11vnc is a vnc server - a nice decription of the various flavors of vnc server is here

https://help.ubuntu.com/VNC/Servers - VNC a number of ports opened on the firewall - 5800 5900 6000 for display 0 - for each display N a new set of ports 580N 590N 600N would need to be opened

- The command line to start the vnc server on the MAAHR brain is

sudo x11vnc -safer -ncache 10 -once -display :0 - This starts the vnc server so a login is a vailable - that is why root needs to start it. You must make sure that display :0 is not being used by a current login.

- TADA ! Fedora Core 15 running remotely on maahrBot

- I will be communicating to MRL directly so I needed to open up port 6161 UDP on the firewall too

- The tower has grown a bit - It now includes the SLA Battery & a Power Supply. The idea is I would like to keep this system on with 100% uptime. So, when the system is charging it can still be online. I don't know when Markus's base will be here, so I'm working on a temporary with some old H-Bridges I created a long time ago.

It's simple, allows extensibility with more decks, cheap, and should be modular enough to "bolt on" to a variety of platforms. - It's "nice" to have a desktop to work on but MRL can run headless and the video feeds can be sent to other MRLs running on different computers.

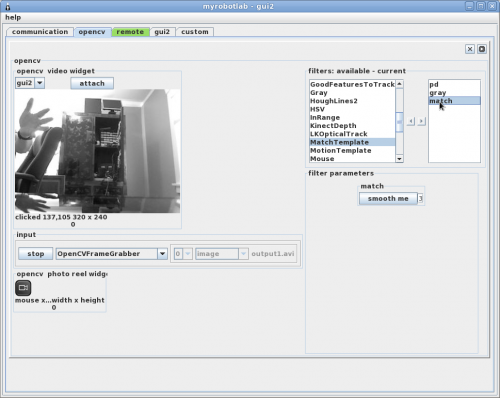

- I downloaded MRL-00013 unzipped and ran the following command line for headless operation, remote connectivity, and opencv$ java -classpath ":myrobotlab.jar:./lib/*" -Dja.library.path=./bin org.myrobotlab.service.Invoker -service OpenCV opencv RemoteAdapter remoteThis starts the OpenCV service and the RemoteAdapter service

On my desktop I started just a gui service instances and connected to the maahrBot..png)

- After refreshing MRL services local the remote services on the maahrBot appeared as green tabs

- The control is the same as if the service was running locally to my desktop - it worked well with a high frame rate.

- A few more little updates and I should be able to switch from webcam to kinect on the maahrBot

Brain Arrives:

07-13-2001

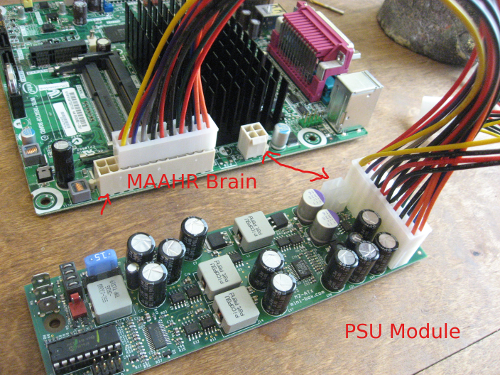

Simples protective Brain Case I could think of is a series of wood decks supported by 1/4" bolts.

I had some scrap 1/4" panelling for the decks. Wood is nice because you can screw in the components anywhere and its non conductive.

Deck 1 - Mini-ITX Computer

Deck 2 - Drive and Power Supply Unit

Deck 3 - Tagged Cover.

Software Install

- Connected Brain to a regular ATX power supply - this is important to do on the install - for example you can brick it if power goes out during a BIOS update

- Jacked in the EtherPort (I don't like wireless during installs)

- Update BIOS (Link)

- Installed Fedora 15 from Live USB - Use Basic Video Mode (Problems with GNOME3)

- Installed RPMFusion and Livna Repos

- Disabled SELinux

- Rebooted

- yum -y groupinstall 'Development Tools' 'Java Development' 'Adminitration Tools' Eclipse 'Sound and Video' Graphics

- _go do something - this will take a while_

- # uname -a returns (have not rebuilt the kernel yet)

Linux maahr.myrobotlab.org 2.6.38.8-32.fc15.i686 #1 SMP Mon Jun 13 20:01:50 UTC 2011 i686 i686 i386 - Installed Chrome

- Started Eclipse - was pleasantly suprised to see it had loaded with SVN support

- Browsed to SVN URL - http://myrobotlab.googlecode.com/svn/trunk/ right-click "check out" - finish - ready to Rock 'n Roll

- yum -y groupinstall Robotics

- yum -y install cmake cmake-gui

- New version of OpenCV 2.3 (they are much more productive now) - downloaded

Todo

make opencv 2.3 build - get latest javacv

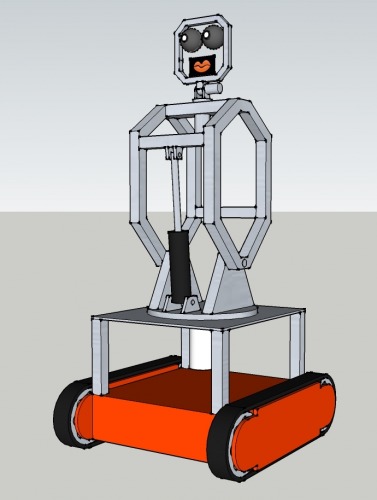

Hi LMR!

This is the MAAHR project. MAAHR stands for Most Advanced Amateur Humanoid Robot. The goal is to design, build and program a robot which is similar sophisticated as ASIMO. The project is completely open source, everybody can participate. I'll sponsor MAAHR with 15000USD for hard-, software and logistic.

MAAHR's specifications so far:

- 2DOF head with 2 cameras and LCD mouth

- 2DOF main body

- 2 x 5DOF robot arms

- 2 x robot hand, capable to lift at least 1kg

- 4 wheeled or tracked base

- Moving objects

- Postures and gestures recognation

- Speech recognation (and distinguishing sounds)

- Synthesized voice

- Recognizes the objects and terrain of its environment

- Facial recognition

- Internet connectivity

A first sketchup draft I did (arms still missing):

If you participate and have an update on the MAAHR robot page, please pm me and I'll send you the password.

In the moment exist 5 working groups:

- Design: Ro-Bot-X, cr0c0, MarkusB

- Mechanics: TinHead, JoeBTheKing, Jad-Berro, MarkusB

- Electronic: MarkusB, Jad-Berro

- Programming: GroG, AgentBurn

- Web presence: JoeBTheKing

Update April 24, 2011 by MarkusB

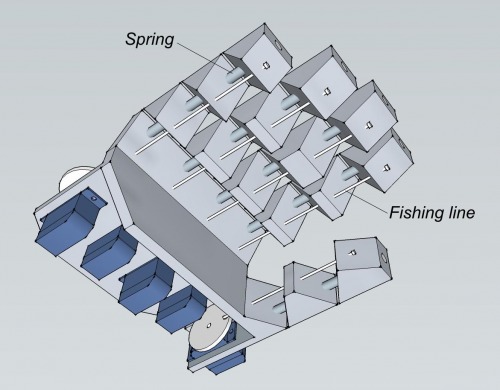

First hand design draft added (see zip hand-1):

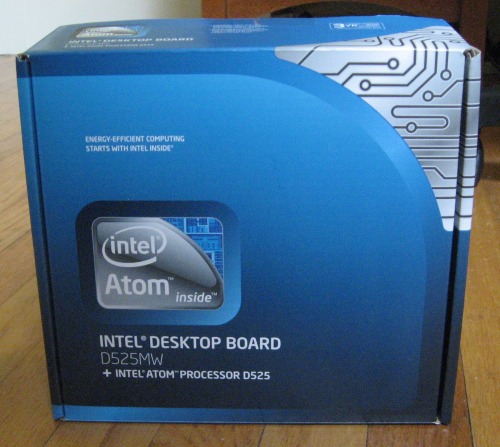

Update 2011.07.02

Markus has sent me funds so that I may purchased the "Brain" from details gathered on this thread - https://www.robotshop.com/letsmakerobots/node/27362#comment-67606 . The brains are speeding to my house and should arrive in the next week.

I have purchased a Kinect - who's drivers I got working and will incorporate it into the Swiss Army Knife Robot Software - "MyRobotLab" as another Service

I wave at you Yay !

Markus will be working on the base. After the brain meets the base, a little programming and some sensors are attached, we should be able to complete the first round of milestones.

These would include :

- Basic local obstacle avoidance

- Basic mapping (encode & Kinect) - precursor to SLAM

- Telepresence - Teleoperation - Control and data acquisition through the Intertoobs

Update 2011.07.07

Lots more to do ... OpenCV Template matching does not work well with Scaling nor Rotation. Rotation will not be much of an issue - as wall sockets typically don't spin on the wall.. Scaling will but can be remedied by collecting multiple templates - at known distances from the bot.. Scale then can be part of the associated memory of the "concept" of what a wall socket is...

I'll have video when I get it developed a bit more....

Update 2011.07.08

good news and bad news - PSU unit arrived (Yay!) / missing cable ! (Yarg!)

Update 2011.07.08 20.35 PST

Alright, an idea has been incubating in my head for a while and I can see how it might work. I've been very interested in the power of "community internet teaching" for robotics. Teaching robots can be an arduous and time consuming job. Much like editing video frame by frame. There was a project called "The White Glove Tracking Project" which used a distributed work force on the internet to do time consuming object isolation for an art project (previous - reference) .

I working on dynamic Haar-Learning and tracking in addition to Kinect object isolation. Once it segments or isolates an object, it will need language input to associate a newly found object with more data. The interaction of people and the robot would be very beneficial. I was thinking what if it used the ShoutBox, Twitter or some other web form to Alert others it had found a new object. Then someone could log into it, examine what it had found and associate the correct word with it, e.g. "Table", "Light", "Chair" or "Ball" - The word with the image data (Haar data) would be associated and saved. This would be similar to the Kinect video with the exception of instead of 1 person teaching the robot, there could be hundreds or thousands.

What should it do?

- Actuators / output devices: Not decided

- Control method: Wifi - Internet, Phone, RF Joystick, Other?

- CPU: Main - Mini ITX Dual Core 1.8 Ghz

- Operating system: Linux - Fedora 15

- Power source: M2-ATX 6-24V Intelligent Automotive ATX Power Supply

- Programming language: Java, MyRobotLab, OpenCV, Sphinx 4, TTS

- Sensors / input devices: IR, ultrasonic, Kinect, Others?

- Target environment: indoors

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/the-maahr-project