MmBot (so named as she was born at Media Molecule) is designed to be a cute robot pet. The key objective is to wander around the office, interacting with people and generally making them go awwwwwwwwwwww wot a lovely robot. Internally this is gonna involve various amounts of intelligence (face recognition and stero imaging), along with a lot of smoke and mirror tricks to give apparent intelligence. Initially it's be powered by an Arduino Mega, communicating with a PC (which does the real thinking), however v2 will contain a raspberry pi.

Update (9th June)

Very quick update - I've finalyl got around to uploading to source control here: https://github.com/wibble82/mmbot

Feel free to take any code ya like and generally do what you want with it, although I take no responsibility for what you do with it etc etc bla bla.

Update (2nd June)

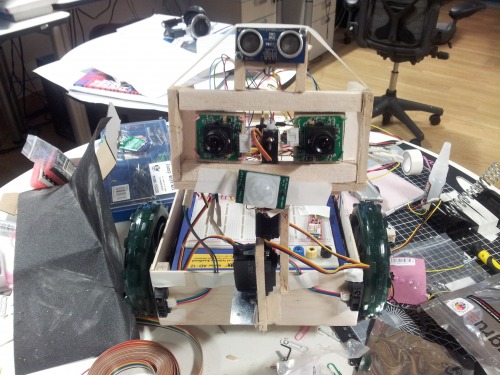

Just about finished hardware side of MmBot, and pondering whether to progress further or begin work on MmBot 2 in anticipation of my Raspberry Pi (which is in the post). Plus I now own a 3d printer so I can print my next robot!

More info here:

http://robotblogging.blogspot.co.uk/2012/05/its-all-hooked-up.html

Update (26th May)

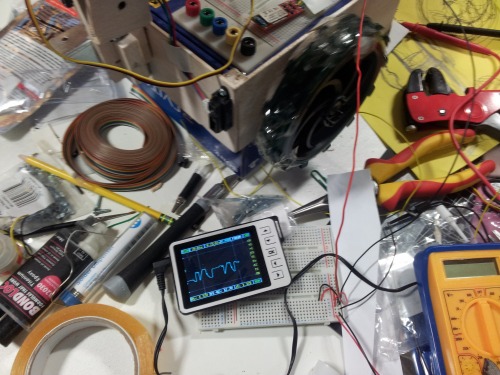

Finished my quadrature encoder and started hooking up a Sabre Tooth 2x5 motor controller, and what an amazing piece of kit! Highly recommended to anybody looking for a motor controller (if you're willing to spend a few pounds). More info here:

http://robotblogging.blogspot.co.uk/2012/05/sabre-tooth-motor-controller.html

Update (20th May)

Got to work on a proper quadrature encoder - see here:

http://robotblogging.blogspot.co.uk/2012/05/quadrature-encoder.html

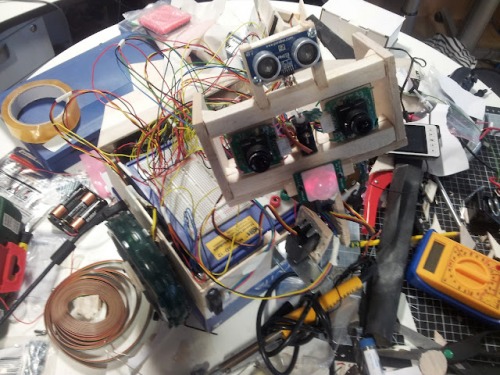

Update (10th May): Added more sensors! Decided the more the better, so I added a few more sensors to MmBot.

More here: http://robotblogging.blogspot.co.uk/2012/05/even-more-sensors.html

Here's MmBot with newly added ultra sound, IR range finders and a motion sensor:

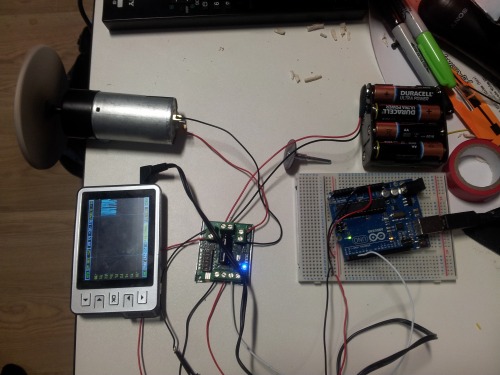

Next, I started building wheel encoders using infra red reflectivity sensors and coloured disks on the inside of my wheels. This shot shows the sensor signal as the wheel turns:

And a You Tube video if you fancy it http://www.youtube.com/watch?v=cX9qb_0jyYQ:

Update (1st May)

Update: Got my first stereo image feed coming from MmBot as you can see above

More here: http://robotblogging.blogspot.co.uk/2012/05/first-sight.html

And if you like debugging: http://robotblogging.blogspot.co.uk/2012/05/debugging-eyes.html

Is cute - a robot pet

- Actuators / output devices: DC motors Stepper motor

- CPU: Arduino Mega

- Operating system: Windows

- Power source: 4.5V, 18V

- Programming language: C++, C#

- Sensors / input devices: Camera Ultra Sound Microphone Acceleromter Compass

- Target environment: Home and office

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/mmbot