Another robot with too many sensors and delusions of skynet.

Environmental Monitoring and Security Patrol Robot

Features that I am aiming for:

- Internal monitoring for data centres, warehouses, open plan offices, hospitals, factories, museums, shopping centres.

- Monitoring of environment, equipment, racks, cables, pipes, people, or things sensitive to humidity, temperature, noxious gasess or vapours.

- Automatic alerting of new obstacles, movement and temperature threshold changes.

- Scheduled checks and reporting on equipment located throughout the area.

- Reponds to building or equipment alerts providing remote eyes, ears and environment reports.

- Navigation and identification based on april tags positioned on walls or devices (similar to QR codes).

- Augment your security staff, minimise your nighttime staff, cut down on the amount of sensors required to monitor your site.

- Non-contact temperature,thermal camera, IR flame detector and humidity sensors provide directional monitoring and video overlay.

- Regular measurements PM2.5/PM10 particulates, carbon monoxide and volatile organic compounds.

- Centralised reporting and environment data analysis.

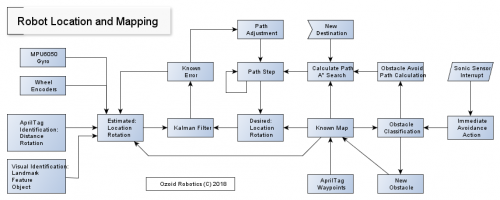

- Location mapping, position identification and auto recalibration.

- Python 3

- OpenCV

- numpy

- pyGame

- Tiled

- mapeditor.org

- SPIDev

- Raspberry Pi Linux + Mint

- VNC

- GEdit

- Notepad++

- Fusion 360

- Cura

- Lulzbot Taz6

- Arduino Libraries for below devices

Fully 3D Printed chassis, body and Wheels (PLA + Ninjaflex)

*3Pcs GY-31 TCS3200 Color Sensor

GY-530 VL53L0X Laser Ranging Sensor

*SDS011 High Precision Laser PM2.5 Air Quality Sensor

*CJMCU-8118 CCS811 HDC1080 Carbon Monoxide CO VOCs

*AMG8833 64 pixel Thermal camera

*Non Contact MLX90614 Temperature Sensor 100cm

MPU 6050 6dof Gyro

Raspberry Pi3

Arduino Mega2560

DHT22 Temp/Humidity Sensor

3x SrHc10 Ultrasonic Transducers

PIR Human sensor

Pi Camera - HD1024

Laser 560nm Red

Flame detector

7x RGB Led

LED Voltmeter

2x 5-12v Motors (Head) + HBridge Stepper Controllers

2x MD25 Motors + Controller (Devantech)

1x small castor

16 channel bi-directional logic convertor

2x DC-DC Buck convertors

12v Sealed LA Battery

Dual pole Dual throw toggle switch

2x small speakers and stereo amplifier circuit

(*) To test, include/fit

**Done/TODO**

All hardware working and control from Raspberry PI tested ok.

April Tag Identification tested ok

Face Detection tested ok

Laser pointer Detection tested ok

Environmental Sensor tested ok

2 way SPI Comms ok

Problem with small steppers not unlocking and getting way too hot

Neck reinforcements

More weight shifted to rear (to avoid tip forward when stopping suddenly)

Environment sensor calibration

Additional Autonomy rules

*Server API (For recieving and sending alerts)

*Mobile App (For Control and Remote view)

Noise interference from speakers (from raspberry pi) - shorten wire and add ferrites etc.

So much more to finish..

The front outer shell with 5 front RGB LEDs across the middle

The head contains two RGB LED eyes with lenses, the piCamera, a laser (mouth), ToF Laser distance sensor (left) and Fire Sensor (right), however this design does not include the thermal camera. There is space above the mouth, but a reprint of the face is required to fit this in.

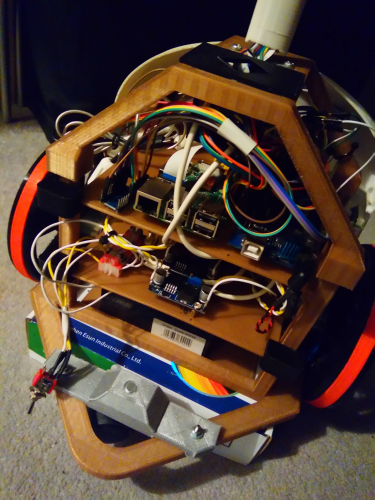

The rear inner view -

Top layer for Arduino, Raspberry Pi and general signal wiring

Middle layer for DC-DC convertors, power wiring and motor controller

Bottom layer for Battery, space for motors and area at the front reserved for colour sensors

At the back is the castor support, above this and inside the shell will be the PM2.5 sensor

The basic chassis, a solid bottom with risers and a top that slots on. shelves printed seperately. This design avoids angles above 45 degrees that would require support on an FDM 3D printer. The top is printed upside-down.

The wheels are modeled on the Audi 5 spoke rotor wheel design. The tires are Ninjaflex Semiflex Lava Orange.

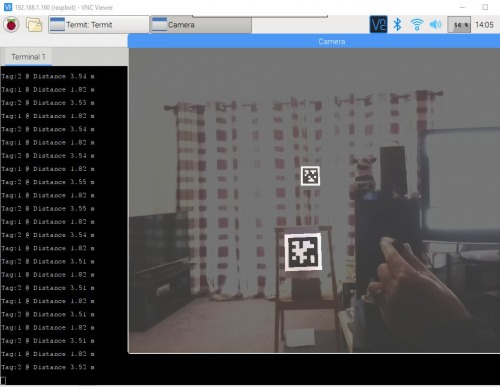

April Tag identification on the raspberry pi and picamera. Distance, angle, rotation and multi tag identification allows confirmation of robot position when using a known map with april tag positions recorded. Uses OpenCV and python. Can capture 5-7 frames per second whith AprilTag identification and image filtering on the Pi. Using a copper heatsink and 5v fan on the Pi

The MD25 Motor Kit was the most expensive item, however i am really impressed with them. I had so much trouble with other motors on previous projects. The controller is great with supply volts and motor current output, the motors have encoders with 360 degrees output too.

I have redesigned the neck to provide better support and avoid the waggle that seemed to occur.

The intention during power up is to align the head using a small forward/reverse motion and guaging the optical flow seen from the camera. This is not yet tested, however an April Tag located in a docking bay could be used as these seem accurate with angle and rotation readings.

A docking bay will be needed at some point to recharge and recalibrate. I suspect the robot could roll onto a multiway brush connector below.

A front small arm or mini wheel may be needed to avoid toppling forward. the weight distribution is just behind the big wheels, but not enough, the required distance for decelleration to avoid tip is quite a bit.

The original wiring used the Arduino directly from the 12v battery, the Arduino regulator got very hot. I added a second dc-dc convertor to allow the Arduino to run at 9v and reduce the load on the regulator. The other DC-DC convertor is set to 5v for the raspberry pi. I could have run the arduino from that as well - however i prefer to use the proper arsuino input that has a polyfuse and the regulator for some protection. Robots i have made in the past have suffered noise created by the motors and it can be difficult to stop this affecting the electronics. The dc-dc convertors i hope will help to seperate device supplies offering some circuit separation and can be separateley fused.

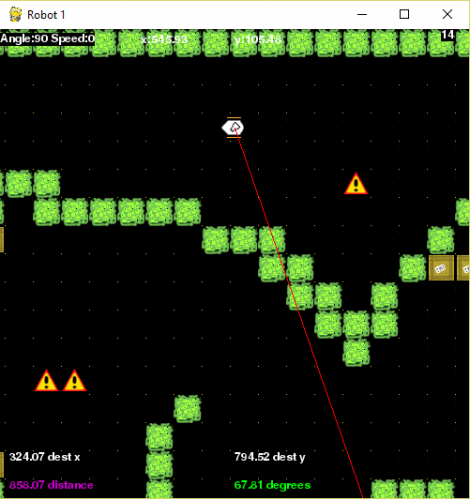

A system for visulisation and mapping has been created in Python with pygame

Path finding using a* or similar will be added to calculate most efficient path

Need to add new objects when detected and synchronise position and map probably also using a kalman filter

The location and mapping system

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/internal-environment-monitoring-and-security-patrol-robot