UPDATE: Added a short video of hexed-bot moving around (on the leash) and sort of following the laser.

UPDATE: Added new video of light tracking training. Hexed-bot's camera eye is following a laser pointer and displaying on the small LCD.

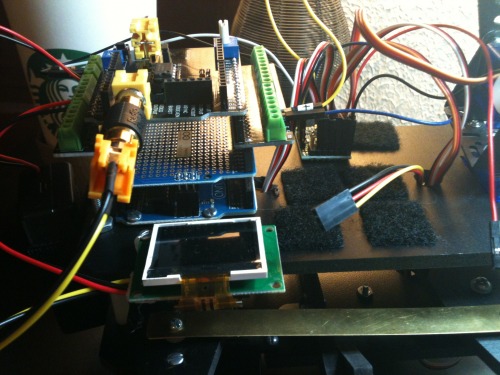

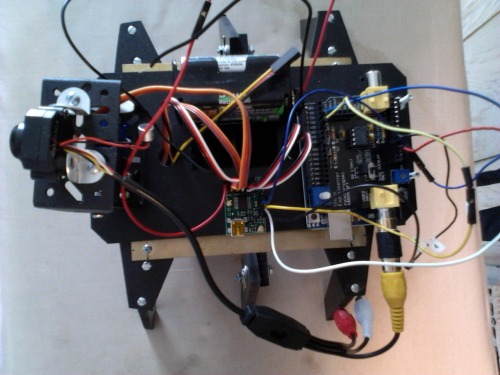

UPDATE: Added some photos of additional shields added for clearance and easier wiring, and my little TV from Adafruit. Adding some output for the video shield seemed to make the light tracking more stable/reliable and, well, it just seemed cool. I probably won't be adding much more to hexed-bot because he's getting a little heavy.

UPDATE: Posted some updated code on github. The GP2D12 sensor and the video shield seem to be playing nicely now. Not fully understanding how the video shield operates in that for it to work the Arduino code needs to switch the on-board ADC off to enable interrupt routines used to capture video. For now, I'm alternately switching the ADC off when capturing the video input according to the shield designer's example code, and switching it on when reading the distance sensor pin...is there a better way? The low level bit flipping is melting my brain.

Capturing the video stream from the camera needs this:

ADCSRA &= ~_BV(ADEN); // disable ADC

ADCSRB |= _BV(ACME); // enable ADC multiplexer

ADMUX &= ~_BV(MUX0); // select A2 for use as AIN1 (negative voltage of comparator)

ADMUX |= _BV(MUX1);

ADMUX &= ~_BV(MUX2);

ACSR &= ~_BV(ACIE); // disable analog comparator interrupts

ACSR &= ~_BV(ACIC); // disable analog comparator input capture

When reading from the sensor, I'm doing this and then calling a function that sets the bits again as shown above:

ADCSRB &= ~_BV(ACME); // disable ADC multiplexer

ADCSRA |= _BV(ADEN); // enable ADC

delay(2);

float val = read_gp2d12_range(gp2d12Pin);

UPDATE: Added some video of hexed-bot's vision/light-tracking training.

UPDATE: Keeping my driver code for Arduino on github: https://github.com/swoodrum/hexed-bot

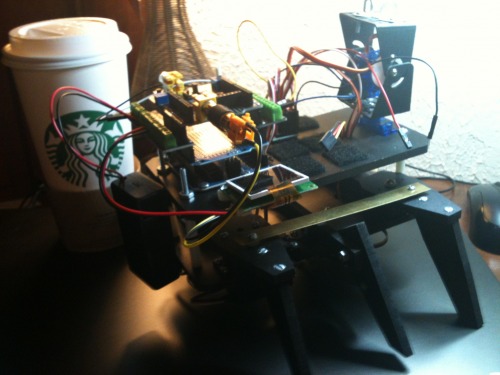

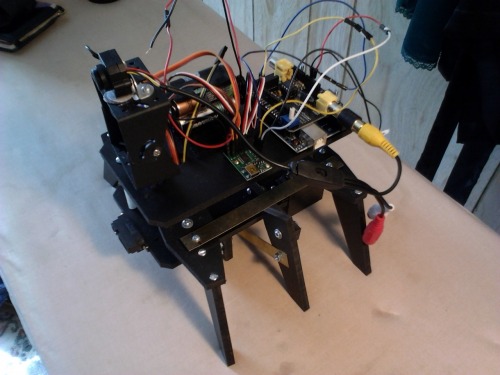

Work in progress. HexedBot can walk forward and backward. Still working on the driver program. Hopefully I'll get the video processing working so that he can follow an object or a bright light, for example a laser pointer. I'll use the Sharp sensor for collision avoidance. I've followed the plans pretty much literally out of the Robot Builder's Bonanza book, and added a second deck to mount the Arduino Uno board, the Pololu servo controller and batteries. The construction material is 6mm Sintra PVC plastic from Solarbotics. Still a lot of work to do, but wanted to share my progress :)

Here's a couple more pictures:

Update: added a video of HexedBot's obstacle avoidance training

Crawls around like a bug

- Actuators / output devices: Parallax standard servos (3)

- Control method: autonomous

- CPU: Arduino, Pololu Micro Maestro Servo Controller, Nootropic video board

- Operating system: Ubuntu (Linux)

- Power source: 4 AA batteries, 2 9 volt batteries

- Programming language: C++, Parallax script language

- Sensors / input devices: video camera, Sharp IR GP2D12

- Target environment: indoor on smooth surfaces

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/hexedbot