BEN: Bright Enough to Navigate

This robot will be a continuation and an improvement on my first robot, EDWARD (https://www.robotshop.com/letsmakerobots/node/36413)

Hardware:

- Raspberry PI for multi-threaded Arduino command and CPU x1

- breadboarded Arduino Uno for hardware control x2/3

- continuous rotation servos for locomotion x3

- omnidirectional wheels for locomotion x3

- servos for arm (x3?)

- ultrasonic sensors for mapping x9

- webcam for veiwer's pleasure x1

BEN's goal will be to move objects from zones to zones. He will also constantly record his surroundings into a map of 1" squares. I think I will eventually use the A* Search Algorithm to route find. BEN will have a remote control mode of operation in addition to autonomous. However, while in r/c mode, he will still never run into walls, and will update his position and map for when he is switched back to autonomous. The robot can be interacted with by using SSH to the PI.

Software can be divided into three parts: the interface that the user interacts with, the main decision making programs on the pi and the hardware commanding programs on the arduinos.

There will be two arduino programs, one on each microcontroller. The first program I have already written. It receives the location of the arm from the pi and then takes readings from every unblocked sensor and returns the results via Serial. The other program takes x/y/z coordinates for the arm and movement directions for the wheels and follows them. This may be split into two arduinos, one handling the arm and the other the wheels.

The interface will somehow combine several things. It will probably be a combination of bash scripts, python scripts and processing programs. It will display the video from the webcam and it will show a graphical map of the robot's known surroundings. It will have controls for when the robot is in r/c mode and give the user an easy way to edit the robots list of goals.

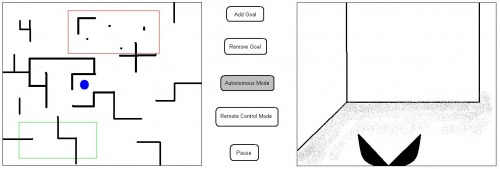

This is a mockup I made of what the interface may look like. To the right is the live video feed from the robot and to the left is the map of what it knows. The blue dot is the robot, and it is trying to move objects from the red zone to the green zone. There are a few option buttons in the middle.

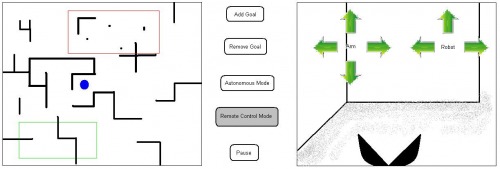

Switching to R/C mode will superimpose controls on the robot's view to control it.

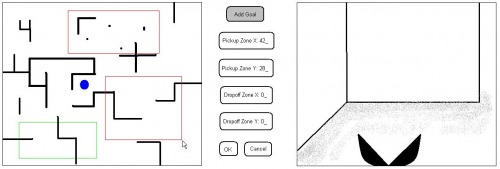

This is what you would get if you pressed the add goal icon. A mouse is drawing a new pickup area on the map, and the exact x/y coordinates can be adjusted by typing in the buttons.

The main proccessing will probably be all C++. It will be composed of many smaller programs, since i am taking advantage of the Pi's ability to be muti-threaded. One program will update the map with readings from the sensors. Another will identify all moveable objects for the robot to pick up. Another will find the best path to the robot's goal. Another program will tell the robot its next immediate movement. That is by no means a complete list of the different programs, and I will work on expanding it.

moves objects from zones to other zones while mapping its environment with ultrasound

- Actuators / output devices: 3 servos with omnidirectional wheels, a 3DOF arm

- Control method: optional r/c via wi/fi

- CPU: arduino uno, Raspberry Pi

- Operating system: Raspbian Wheezy

- Power source: 9v batteries?

- Programming language: C++, Python, bash

- Sensors / input devices: Ultrasonic sensors

- Target environment: Indoors - hard floor

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/ben-bright-enough-to-navigate