Background & Motivation

My goal was to develop a reusable OS that could support my bots or others...anywhere from small or medium bots like my Anna/Ava, to bots reaching the complexity of an InMoov that can also move around. In working on my prior bots, I learned many lessons and had many things from an OS perspective and backbone comms that I would like to improve.

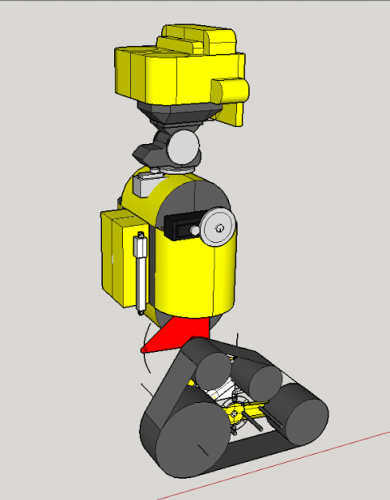

My next bot will be around 30 inches tall and have many servos and 4 linear actuators. It also needs to support a depth camera, an indoor localization system, and a FLIR camera. This OS is going to be the brain for this bot which I am still designing for 3D printing later.

Goals of the OS

- I wanted a simple way to write code for arduinos, SBCs, and PCs, where they could all work together and coodinate.

- I wanted a "spinal cord" backbone with a lot higher thoughput and reliability.

- I wanted it ALL to be able to run on bot, no external web server. For on the on bot setup, I chose a Latte Panda.

- I wanted to have multiple windows forms displays for the face, sonar, emotions, etc. and open/close as needed.

- I wanted the behaviors to be able to grow into sophisticated results while keeping its basic simplicity.

- I wanted the basic memory/DNA of the robot to be generic and something that could be iterated over.

- I wanted to be able to set goals and have the robot converge upon those goals without having to write code for timing.

- I wanted a core design that I could add learning algos, pattern recog, genetic algos, and other features later. I wanted the brain to be able to mutate all its underlying memory values if necessary and try out combos.

- I wanted simple ways to control them or orchestrate complex actions. One of these is voice or written text. I wanted to communicate on my terms, and "talk" to the bot, its services, its very DNA...without having to write code.

Baseline Hardware Setup

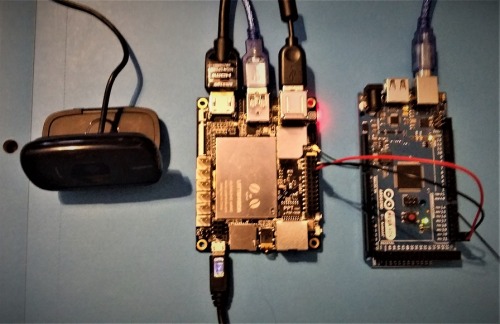

The prototype "brain" is running on a 4G Latte Panda SBC running Windows 10 and SQL Server 2014 Express. The Panda also has an Arduino Leonardo on board. The two sides of the board communicate through serial running a library from Latte Panda on the windows side, and the Standard Firmata library on the Arduino side. The I2C pins on the Panda's Leonardo are connected to an Arduino Mega. The brain commiunicates to the Mega and any additional arduinos through this I2C connection. A bluetooth dongle is connected to the Panda for Keyboard/Mouse. A logitech cam/mic are connected to the Panda for video as well as audio listening. Right now I am using an HDMI TV for the screen, but will add a Panda screen and touch overlay soon that does not use the HDMI. The final bot could support two screens.

The intention is to retrofit this brain into my Anna and Ava robots, as well as put this brain into my upcoming and as yet unnamed larger bot. The larger bot will use an 8G Latte Panda Alpha. I'll also be adding in servo controller, motor controller, and sensor set to this ref hardware setup. Once again...12 sonars, and a whole lot more!

Progress to Date

- Developed a set of standards for communicating commands and data to/from a Panda and multiple arduinos with multiple services running on each platform.

- Developed the baseline windows based brain.

- Developed an Animated Face - Eyes, Mouth, etc. This uses a windows forms app that hosts a WPF user control.

- Got the bot to listen (through the cam's mic) and do speech-to-text. The microsoft speech-to-text needs more training.

- Got the bot to speak using the speech engine.

- Got the animated face to coordinate lips with phonemes using vismemes and events from the speech engine.

- Developed a core set of standards and services for managing communication, memories, database access, and sensors/actuators.

- Developed a lightweight set of verbal services to interpret speech, delegate actions, and generate responses.

- Developed standards for verbally enabling services so they can talk/listen to people or each other. Each service registers its input and output "patterns" on startup and is then called when any of the input patterns is matched. The verbal service will take care of all the language details and call each service with a set of named/value pairs that correspond to the arguments that the service needs to function. In verbal service also handles generating varied verabl output responses from these services...so that the bot can say the same thing in multiple ways.

- Developed and tested a scripting language for orchestrating actions...called "Avascript".

- Developed a database to support brains persistent data needs. The baseline version has the following tables (Service, MemoryItem, Word, Script, WordAssoc, and Quantifier)

- Developed a baseline "difference engine" into the memory engine...more on that later.

- Added services for handling basic human pleasantries like greetings, goodbyes, addition, etc. I plan to port Anna/Ava's skills over to this brain...eventually.

- At this point, she can listen, is polite, and can follow a lot of basic instructions and give responses.

Backbone System

It is critical for any bot with multiple processors to have a reliable way to communicate from one platform to another. This is why I started on this part first, as my prior bot had problems here. Right now I am doing serial/I2C to deliver frequent messages from Panda/Leonardo/Mega and back. This is where I see the highest throughput being required. I will also likely add bluetooth and http delivery, just at lower frequency levels.

Services System

The brain is made up of services running on various hardware platforms. Service communicate by sending commands or asking a script to execute...which gets translated into commands. Every command is like mail that has a zip code that allows it to get to where it needs to go. Each service on the Panda implements an interface. Each arduino must simply conform to some basic coding standards to receive/send messages. Each service can be called at a specified frequency. Services will receive notifications of various events, like when a memory item has changed. Services can do synchronous and asynchronous actions, depending on the need. Services can have their own verbal patterns that they can respond to in verbal or non-verbal ways.

Panda Memory Engine - Its like DNA that you can talk to

The bots primary memory is made up of many "MemoryItems". Each memory item represents some state information that could change at runtime. The system has metadata about each memory that tells it everything it needs to know to handle its behavior, goal setting, verbal functions, and how to route notifications on the Panda and on one or more arduinos. Each memories metadata is defaulted on startup from a db table.

Each memory item can have various english "names" that it corresponds to, valid values, and each one of those values could have one or more names that they correspond to. In the end, the instruction "Set heading to south" can then be translated into bytes over I2C to the right arduino, the right service, with the proper parameters.

Arduino Memory System - Micro-Controller Friendly

While it is easy to have lots of memories (with multiple names) on a windows machine like the Panda, its not practical on an arduino. What is needed is a way to shuffle memories back and forth between Windows and arduinos but not bog the arduinos down with strings or bloated data. What I did was allow each MemoryItem on the windows side to have a set of identifiers that give it meaning on the arduino side, notably an ID. The memoy system on the arduino side can hold only the memories it cares about, in an array, while the windows side can hold memories from all platforms in a hashtable indexed by ID, Name Alias, etc...and use whatever method desired to look it up. The separate ID and array based storage on the arduino side means that items are looked up by integer into arrays...so a constants file in the arduino project is desirable to make everything readable. example: LEFT_MOTOR_SPEED = 1, RIGHT_MOTOR_SPEED = 2, etc.

"Avascript" Scripting Engine - also known as English.

Avascript is natural language english, but could be any language if future by adding translation and alternate grammars. With avascript, commands are simply written or spoken as words, sentences, or paragraphs, and given names. You can simply write a script named "Wake Up" which might say "Set alertness to maximum. 2nd Instruction. 3rd Instruction, etc." or "Blink" with script "Set Pin 13 to on for 1000" It's that simple. Every service, memory item, and script can have multiple aliases so you can say things in multiple ways. "Sleep" and "Close your eyes" could resolve to the same thing. Scripts can call other scripts.

The ability to talk to any service or any piece of memory, without coding, and save it with a name/multiple aliases, is one of the key and fundamental ways I plan to enable the system to grow while remaining as simple as possible.

Difference Engine

I am not a mathematician, so I will explain what I mean in my use of this term. At any given point in time, the bot needs to recognize differences between its current state and its goals, and try to achieve its goals by reducing/eliminating the differences in a graceful manner.

To illustrate the basic need, lets take an example. The bot might have a "Speed" of 255 and want to stop (a speed of 0) If it stopped suddenly, it might fall over or break its neck. In this example, we can set the GoalValue to 0, with a GoalSpeed of 5, and a GoalInterval of 50. This means the bot will speed up or down in increments of 5 every 50 milliseconds, thereby not breaking its neck or falling over...I have done both of these.

To support this, any memory item has a "CurrentValue" and CAN have a "GoalValue", "GoalType", "GoalSpeed", "GoalInterval", and "LockingInterval"

The difference engine will converge all memory items upon their goals using different speeds/models. Basic example...If you say look left, but the bot is looking down and to the right...it will converge the X and Y to the desired angle at a particular speed...and publish incremental changes along the way to the associated services. Right now I am simply converging using a speed and time interval (in ms). I am building it to support other goal seeking models though...like one that halves the difference on each interval, or one that accelerates and decellerates to the goal. The locking interval can be used to prevent one action from stepping on another for a duration of time. If I say look left, I don't want the bots autonomous eye movements to contradict my order in the next instant.

Thats all for now...back to the bots.

This is a companion discussion topic for the original entry at https://community.robotshop.com/robots/show/ava-os-a-very-advanced-operating-system