What exciting possibilities emerge when a robotic arm is combined with depth camera?

There are a variety of desktop-level robotic arms currently on the market. In addition to cameras, they are also equipped with a variety of sensors so that they can behave more "intelligent." But we discovered that no matter how they increased the number of sensing modules, they could not solve a common problem today with desktop-level robotic arms: it is difficult to break through the limitations of a two-dimensional plane.

But this problem can find a breakthrough in Yahboom's DOFBOT PRO robotic arm. So what are the differences between DOFBOT PRO and other robotic arms?

◆●★Difference between 3D and 2D vision robotic arm

Ordinary cameras capture two-dimensional images, just like the photos we see when we take pictures. It can only tell us the color of the object, but cannot tell how far the object is from the camera.

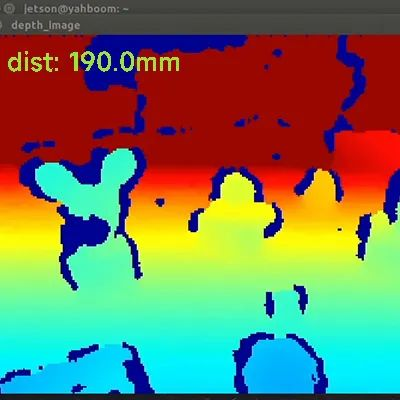

The depth camera can not only capture color images, but also measure the distance from each pixel to the camera, providing a three-dimensional "depth map". The robotic arm can then know the position and distance of the object in space.

3D Depth image:

2D images:

✔Environmental perception

2D camera: can capture a planar image of the environment, containing only two dimensions of length and width, such as planar graphics such as triangles and rectangles, and can be used for simple environmental monitoring.

3D camera: can generate depth images and point cloud images of the environment, with three dimensions of length, width and depth, such as three-dimensional graphics such as cubes and spheres, providing detailed three-dimensional information.

✔Grasping objects

2D camera: It can identify the shape and color of objects and is suitable for simple grasping tasks, but it cannot judge the distance and depth of objects, so it is easy to grasp empty or inaccurate grasps.

3D camera: Not only can it identify the shape and color of items, it can also accurately measure distance and depth, ensuring accurate grabbing every time.

✔Dynamic tracking

2D camera: It can track the plane position of moving objects. It may lose the target due to the influence of environment and light.

3D camera: It can track the three-dimensional motion trajectory of objects in real time to ensure that the target is always locked.

The 3D depth camera on Yahboom DOFBOT PRO can provide the robot arm with clearer depth maps, color maps and point cloud maps, making the RGB data, position and depth information of the target object more accurate.

When DOFBOT PRO wants to grab an object, the depth camera can tell it how far away the target is, what shape it is, and where it is, so it can choose the appropriate grabbing method to avoid breaking it or dropping it.

◆Depth Vision | Arm-camera Integration

The end is equipped with a high-performance binocular structured light depth camera, which enables DOFBOT-PRO to calculate the distance, shape, height, volume and other information of the object in 3D space based on the RGB data, position coordinates and depth information of the target object, thereby improving the autonomy and intelligence of the robotic arm to achieve more AI projects.

3D depth point cloud recognition

DOFBOT-PRO can obtain the depth map, color map, and point cloud map of the detection environment through the corresponding API of the depth camera. Then, obtain the RGB data, position coordinates, and depth information of the target object.

Depth camera distance measurement

By obtaining the depth point cloud data of the object, the distance between the object and the depth camera can be obtained. Then, the object can be located, sorted, and tracked in 3D space.

Regional target volume measurement

By obtaining the depth point cloud data of the object, the shape and height of the object can be identified. Then, the volume of the object can be measured.

◆ Sorting And Gripping In 3D Space

The distance and shape of objects can be accurately measured through the 3D depth camera

equipped with DOFBOT PRO. This means you can use it to identify, track and grab a variety of items, achieving a series of complex and precise operations.

Sorting and gripping in 3D space[machine code/shape/color]

By calculating the 3D coordinates of the machine code in the image, the robotic arm can sort it to the specified location according to the machine code content. (The shape and color sorting process principles are the same.)

Gesture control sorting block

The robotic arm recognizes gestures through MediaPipe, and grabs the qualified blocks based on the results of gesture recognition.

Tracking And Gripping In 3D Space

Hold the block and move it in front of the robotic arm, robotic arm will track the block. When the block stops, the robotic arm will grab it and place it in the corresponding position.

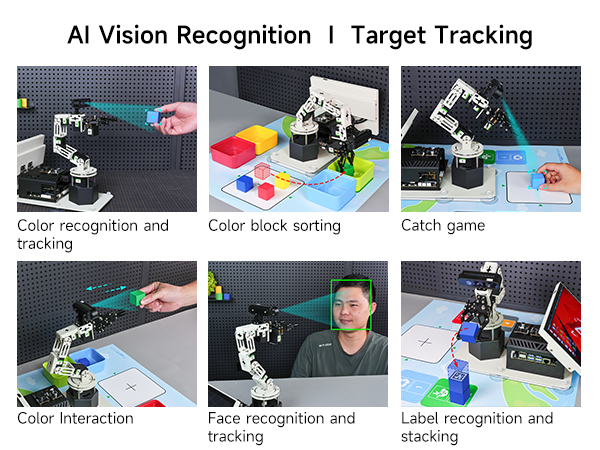

◆ AI Vision Recognition | Target Tracking

Through the 3D depth camera, DOFBOT PRO is able to accept and respond to its surrounding environment, combining AI visual recognition and target tracking functions to achieve a series of complex and precise operations.DOFBOT PRO is able to accept and respond to its surrounding environment, combining AI visual recognition and target tracking functions to achieve a series of complex and precise operations.

◆ Cross-platform interconnection control

Mobile phone APP:

USB wireless remote control:

Web control:

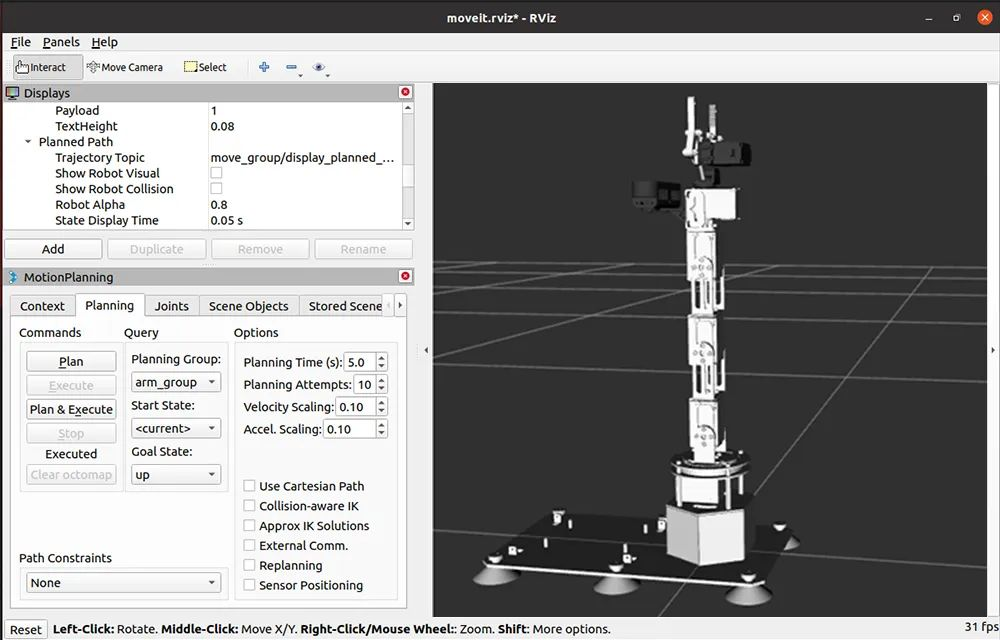

◆ Robotic Arm Movelt KinematicsMovelt Kinematics

DOFBOT-PRODOFBOT-PRO supports Movet simulation, which can control the robotic arm and verify the algorithm in a virtual environment. Effectively reducing the requirements for the experimental environment and improving experimental efficiency.Movet simulation, which can control the robotic arm and verify the algorithm in a virtual environment. Effectively reducing the requirements for the experimental environment and improving experimental efficiency.

MoveIt Simulation Control:

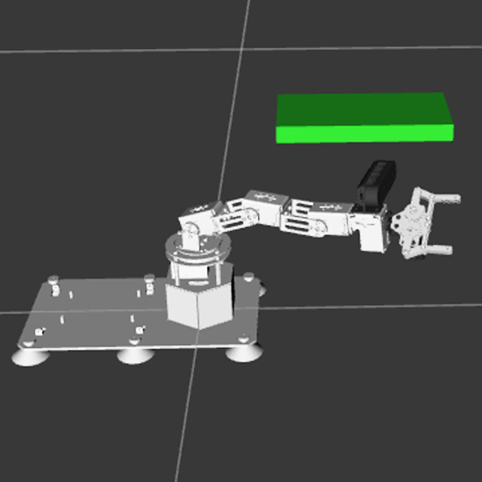

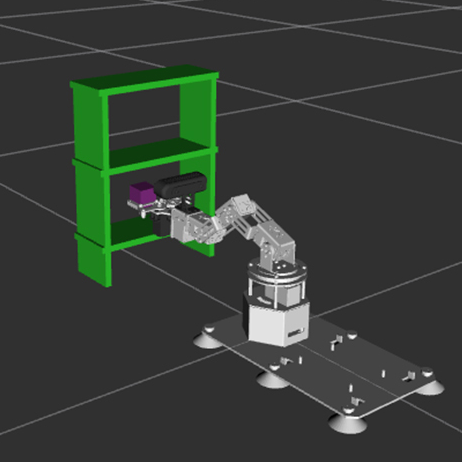

Collision detection:

Space gripping:

Summary

Unique ideas and advanced technology make DOFBOT PRO unique. This exploration process did not happen overnight, but came more from Yahboom’s continuous thinking about the development of desktop-level robotic arms.

As a pioneering existence, the birth of the 3D vision robotic arm is not the end. Yahboom will continue to accumulate and study, integrate other advanced technologies, and realize the true autonomous intelligence of the desktop-level robotic arm! Yahboom will continue to accumulate and study, integrate other advanced technologies, and realize the true autonomous intelligence of the desktop-level robotic arm!

Thanks for helping to keep our community civil!

This post is an advertisement, or vandalism. It is not useful or relevant to the current topic.

You flagged this as spam. Undo flag.Flag Post