SyBorg

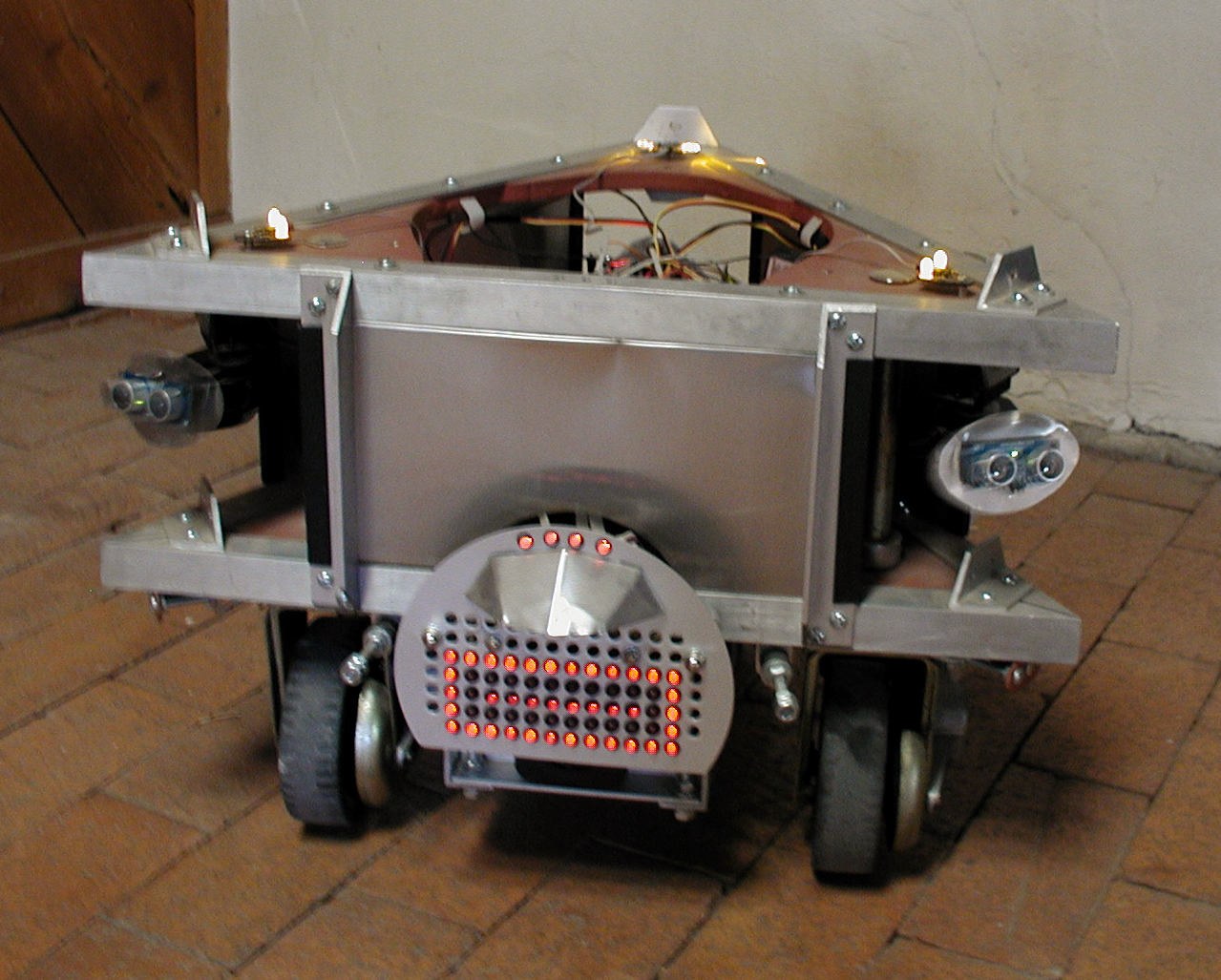

Differentially-steered robot with 12x5 LED front display, sonar "eyes", text-to-speech, self-docking charging, etc. In progress, but mostly done.

The access hole in top is open in the main photo; generally there is another small panel on top of the access hole that contains two IR detectors with a thin "vane" between them -- these are used for detecting direction to the charging-station beacon. The same detectors are used for receiving coded IR messages from the other 'bots in the fleet. Above that is usually a birch table-top -- basically it's like a small coffee table on wheels.

The front display shows many expressions (bitmaps of lips, open/smiling/eating mouths, smileys, etc.), but can also be used as a debugger to show distance on sensors. Additionally it support scrolling text, so that (sometimes) when he speaks the same words show up on the front display. In back (not shown) is an IR ranger on a servo used for detecting both rear obstacles and side obstacles when turning (as the drive wheels are very far forward on the platform, the rear swings "wide" when turning, so the tail really needs it's own sensor).

Under the front "nose" is an IR ranger pointed down at an angle -- this lets the same detector "see" cliffs/drop-offs (distance too large) or objects that are low and close that the sonars may miss. This is a nice setup because it gets around the <4cm limitation of the Sharp rangers -- an object that is *very* close registers a false *long* reading -- which is a cliff to the 'bot -- so the results are the same. Basically, if the ranger's distance is not within a pre-defined "sweet spot", then the 'bot presumes there is a low object or that there is a cliff.

The sonar's are on pan/tilt servo assemblies and can actually point all the way out to the sides -- so their sweep is quite good for general obstacle detection.

A pair of light sensors, an R/C receiver and a real-time-clock round things out (the RTC is very nice -- he announces the time on the hour while showing an analog clock on the front display, reminds me when my favorite radio programs are on, sings happy birthday to members of the household, and with the battery backup, it's a nice why to have some non-volatile RAM with fast access and no write-cycle limitations, etc.).

Basic structure of programming is subsumption AFSM, but with a lot of little tricks like self-tuning setpoints for obstacle detection and the like (a *must* if your 'bot will roam through areas of the house where the materials it encounters change)

Sorry, this photo is actually about a year old and he looks a little different. I've been installing quadrature encoders for velocity control and odometry (theta, x, y, distance/heading to target, etc), so I'll try to get some current photos in the next week or so.

Brief list of electronics:

main micro - Basic Stamp 2p40

peripheral micros - one PIC (A/D, PWM, extra pins), two SXes (servo control, motor control)

peripherals - Emic TTS module (speech), Devantech MD22 motor controller, cheapo R/C receiver, DS1307 RTC, 32kbit external EEPROM for speech strings, control codes and bitmaps of pictures and text chars (front display).

sensors - two Parallax Ping))) sonar units, two Sharp IR rangers, two CDS cells, two Panasonic IR detectors, one high power emitter, two CDS light cells (A/D voltage), one A/D for battery voltage, four Hamamatsu emitter/detector assemblies for encoders, assorted digital inputs for things like positive connection to charging station, etc.

See a brief video here: http://www.youtube.com/watch?v=9PGgkRUSlDY&feature=channel_page

hangs out

- Actuators / output devices: 12v DC gear motors, pan/tilt assemblies for sensors

- Control method: Totally Autonomous

- CPU: Basic Stamp 2p40, SX

- Operating system: embedded apps

- Programming language: Basic, Assembly

- Sensors / input devices: sonar, IR rangers, CDS cells, various digital i/o

- Target environment: indoor