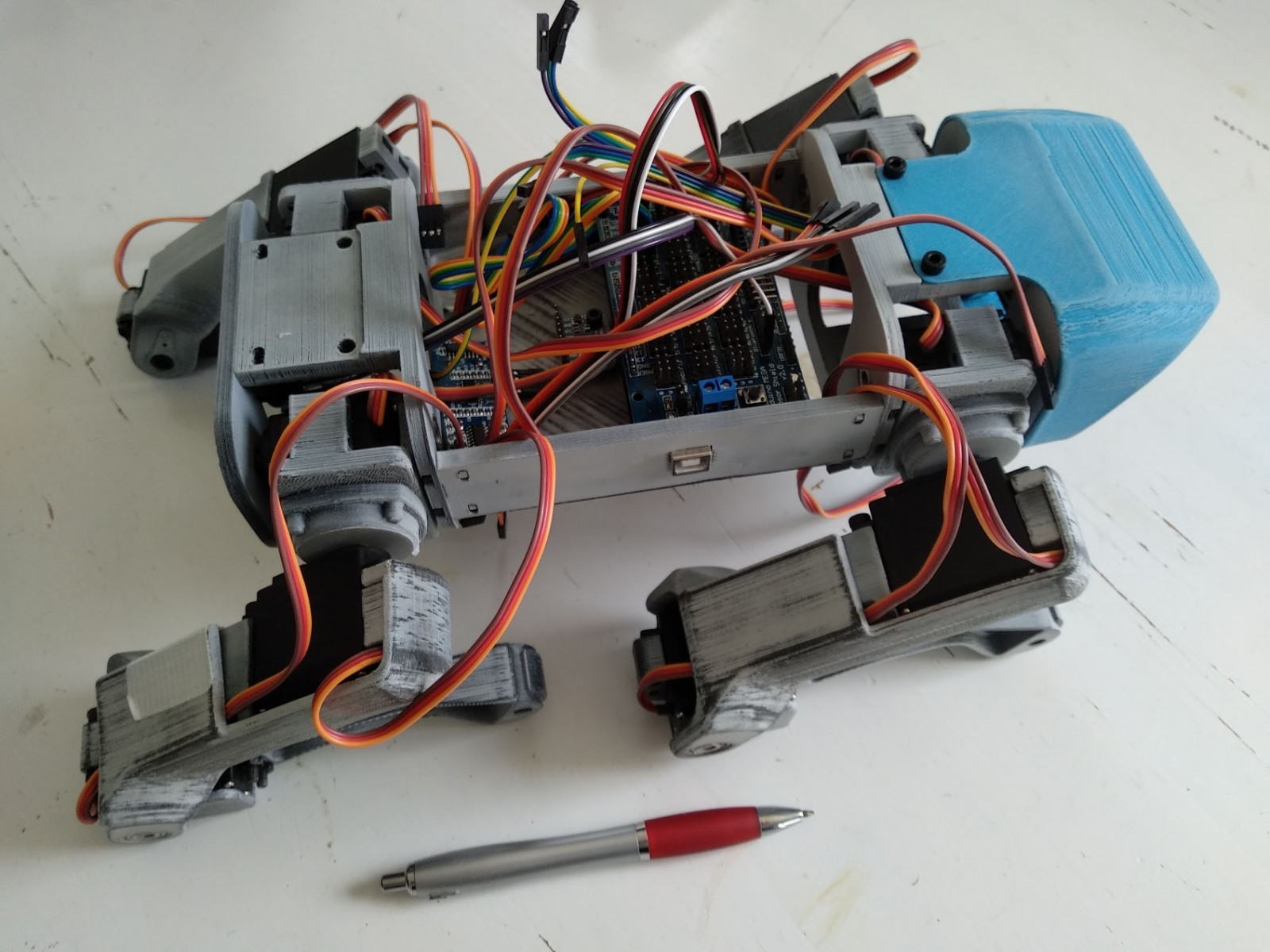

The physical Robot is based on KDY's awesome Thingiverse-Project: https://www.thingiverse.com/thing:3445283

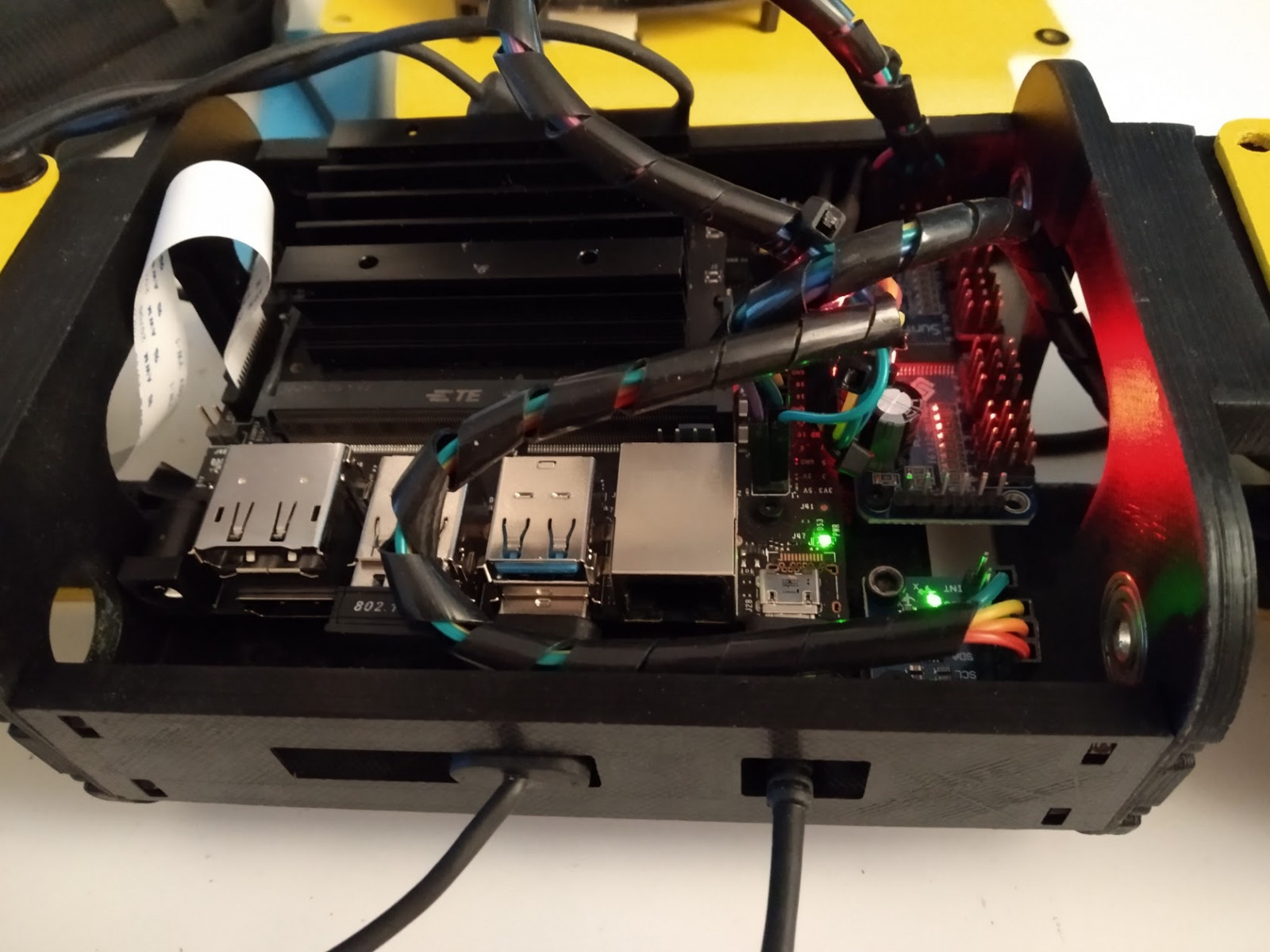

I replaced the original ArduinoMega, which controls the Servos with an NVIDIA Jetson Nano + a 16 Channel PCA9685 I2C-Servo Driver.

Then it has 4 HC-SR04 SonarSensors, two in the Front and two in the Bottom. It also has a IMU MPU-6050.

And i added a RaspberryPi-Cam to the Front which will be used by the Jetson to do simple ObjectDetection (maybe MonoSLAM?).

Here is how it looked after the first sanding and priming and sanding with the first Hardware-Setup (ArduinoMega)

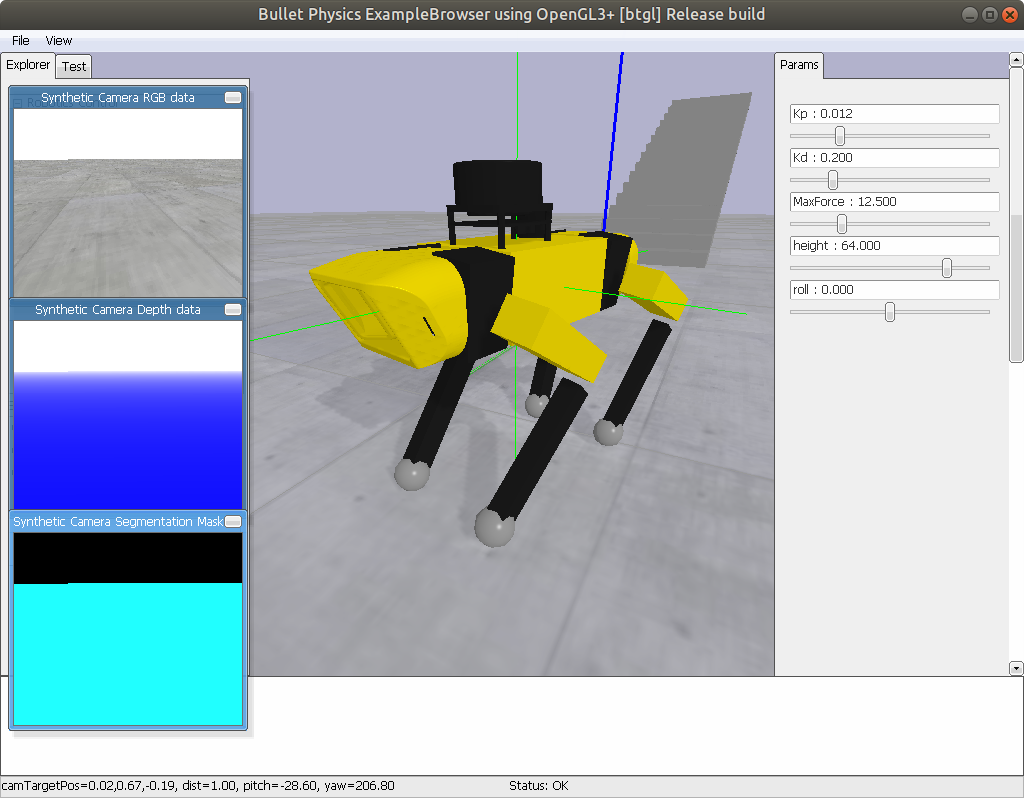

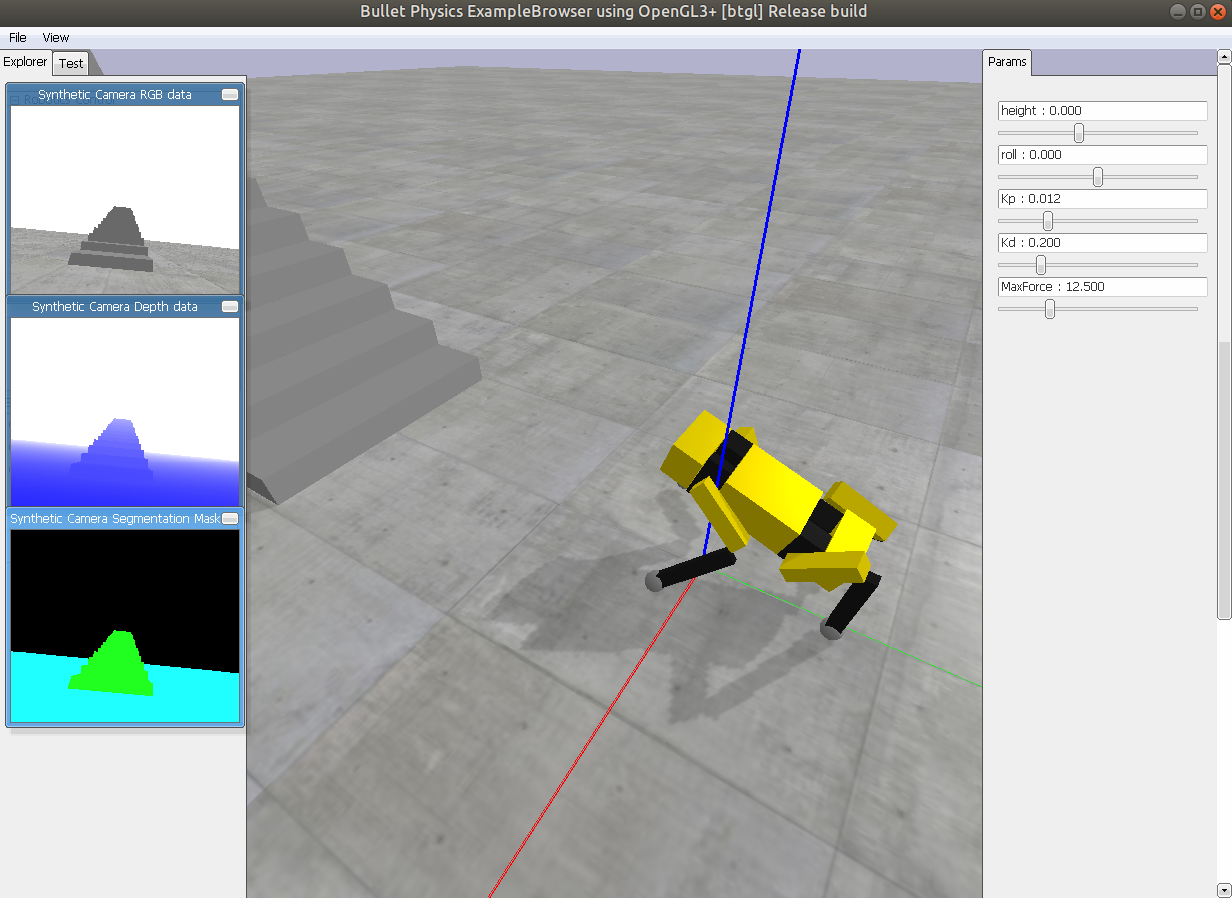

I needed a Simulation for several reasons. I currently use PyBullet to do Simulation for kinematics and RL in the future.

There is no real RL-Training in there yet, but that's my main reason for this Project. I want to understand how ML/RL can support a Physical Robot. Maybe it even learns how to walk some day. See https://arxiv.org/pdf/1804.10332.pdf for Inspiration ;)

no RL yet :( i am still working on Kinematics