MoMo 0.8 - Ranging and Mapping - By Jove, I found my Toolbox !

MoMo is my current mobile software experiment platform. Created out of a previous project (Mobile Laser Platform). The purpose of MoMo is to continue to test and develop a open source software project called "MyRobotLab". MyRobotLab is a multi-threaded, service based Java framework for creative machine control. It effectively "hot glues" other great open source projects like Sphinx-4 for speech recognition and OpenCV for machine vision.

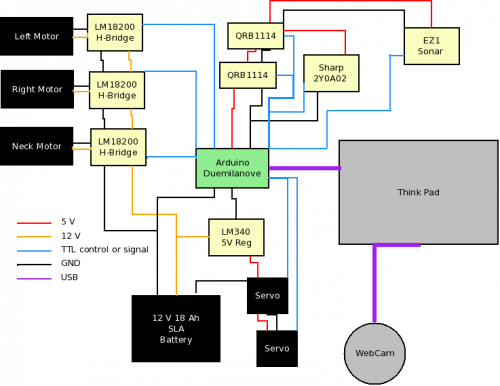

Control

- MyRobotLab Java Framework

- Fedora Core 13

- IBM Thinkpad - with missing keys

- Arduino Duemilanove

- Arduino MyRobotLab - Slave program

- 2 H Bridges based on lm18200

Power

- 12V 18Ah SLA

- Laptop Batteries - (dying)

Actuators

- Motak - 2 X 24V DC worm drive motors - her name comes from the two Motak motors (MoMo)

- 2 X Servos

- Speakers (from laptop)

Sensors

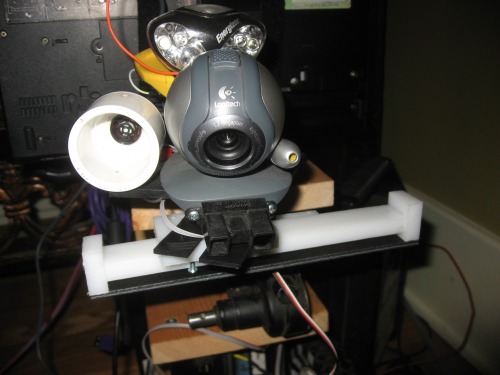

- WebCam - Logitech C500

- Microphone (from laptop)

- Sharp 2Y0A02

- EZ 1 Sonar

Communication

- TCP/IP over 802.1 - MyRobotLab can export gui control over TCP/IP

momo.1

Basic wiring diagram

Quick Assembly Instructions (1 day - just think if I had 2 cups of coffee !) (Video.1)

Software

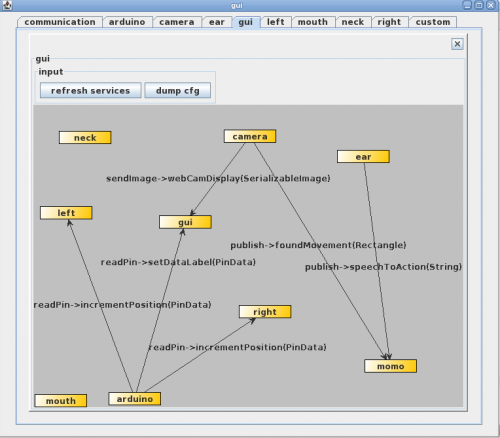

Here are a list of services MoMo is currently using and a brief description of their function.

- left, right, neck - 3 motors, Left & Right are attached to the wheels, neck will be used later

- arduino - the Arduino Duemilanove controller, used to control the motors, encoder feedback and most other IO related activities

- camera - a webcam with OpenCV functions

- ear - Sphinx 4 speech recognition

- gui - a GUI interface for manual override and control

- mouth - a mp3 file player

- momo - the specialized behavioral logic for this robot, isolated into 1 service. This allows the other services to be re-used in other contexts or different robots.

MyRobotLab - Service Map

This dynamically maps the current active services and their static message routes. Each little block

corresponds to a service. Each tab corresponds to the GUI of that particular service. The neck

is not being used. The left and right motor are attached to messages coming from arduino.

The gui also gets messages from the arduino and camera. When the camera finds movement

it sends a rectangle of the area to momo. The ear does speech recognition and sends recognized text

to momo.

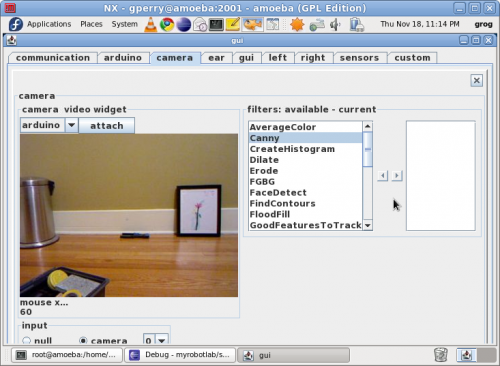

The camera gui is one of the more interesting ones.

momo.1.Test - Remote Control (Video 2)

Controlled from my desktop using NX over 802.1 wireless to the laptop (lame).

MyRobotLab was created to be remotely controlled and multi-processed.

Unfortunately, I'm still contending with a video bug, so this session was done through NX.

I found keyboard was preferable control versus clicking on buttons with the mouse.

momo.2.Test - Optical Tracking using Combination Sets of Filters (Video 3)

Watch for movement consists of the following filters :

- Dialate

- Erode

- Smooth X 2

- FGBG (foreground background)

- FindContours

Dialate, Erode and Smooth are all used to reduce "noise". FGBG purpose is to determine foreground and background

by comparing series of images. This also works well as a visual motion detector. Find Contours draws/groups pixels together.

Momo will go into this mode and wait until a Contour is found.

When movement is found the movement filters are removed. The LKOpticalTrack filters is added. Then a Lucas Kanade point is set in the center of the moving Contour.

After the point is set, tracking instructions are sent to the wheels based on the location of the LK point.

Switching the filters takes less than 300 ms and is difficult to see but I have captured a polygon in the frame just before the optical tracking starts.

Now the filter switches to LK Optical Tracking with a point set.

MoMo bad hair day picture

Ranging and Mapping

Active versus Passive Optical Ranging

Active optical ranging means sending some energy out and measuring it in some way. The lasers in LIDAR are actively sending out energy and using that energy to measure the distance. I have been interested in passive optical ranging. It less expensive than most LIDAR units, for example a really good webcam costs about $35. At this time I would consider a webcam one of the most cost effective and impressive robot sensors. It is really amazing how much data you can get from one of these cameras. The real problem is that it's too much data ! Or we don't know how to organize it.

In the simplest form data is read off a webcam in a 2D matrix. Each point contains 3 values, one for red, one for green and one for blue. So a 320 X 240 color image contains 230400 bytes. At a low frame rate (15 frames per second), this becomes ~3.5 Megs per second. Wow ! That's a lot of data for a little $35 sensor. But wait, there's more! With a single frame given to me, I could tell you all sorts of things about it. I could tell you what colors were in the picture. I could tell you orientation of the camera. Where the picture was taken to some degree. I could tell you what objects were in the image and how far away they were. I could tell the relative location of the object were to each other in the picture. Amazing, I could tell you all of this from a single image ! But what do you electronically? A big matrix of different values.

So, I think there is a huge potential for these sensors in robotics, but there seems to be the need for a lot of work to make all that data useful. One thing that will be useful is mapping the environment. Our robot will need know and remember the areas it can travel. It will be useful to tell the robot to go to a particular room. Lets get started.

Parallax Ranging using LK Optical Flow

I would like to implement a form of SLAM for MoMo. My strategy on implementing SLAM for MoMo is to use OpenCV and generate a point cloud. Then use LK Optical Flow with a horizontal camera slide to determine depth of sample points. Using edge detection and image stitching, my goal will be to create a 3D map.

Alright, the theory was easy enough. Now the next logical step is to build the camera slide. I will make the horizontal slide capable of about 3 to 4 inches of movement. The reason for this is to cater to human usefulness. People have eyes from 2 to 4 inches apart. This distance combined is tuned for an optimum useful depth perception on a human scale. Stereo vision depth maps are rather expensive for computers to implement. It takes more cpu power and complexity to match similar objects in two separate cameras versus tracking an object in a single camera. If the amount of movement is known, depth can be computed rather quickly.

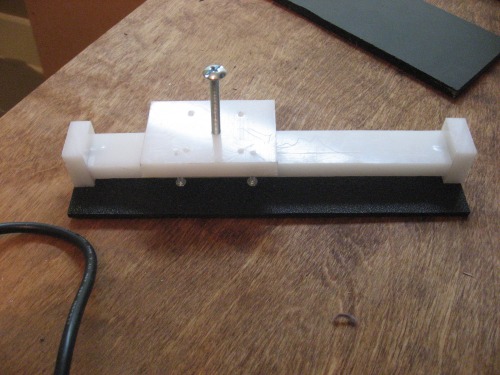

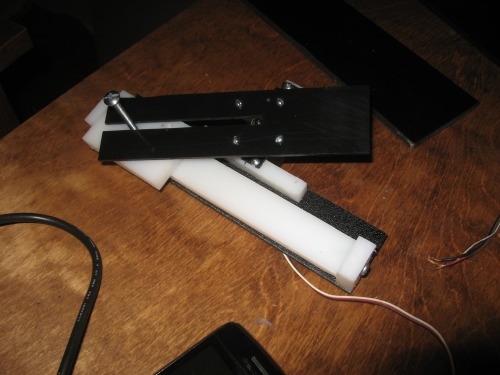

Camera Slide

I have some lovely plastic from a local plastic store. I forgot what its called but its used in cutting boards. It can be screwed together and is very slippery. I'll be using it for the camera rail.

stage 1.

stage 2.

stage 3.

Although it worked to some degree, overall the resolution seemed rather poor. Now, I am considering the location in the image plane might be more accurate to positioning. The higher something is towards the horizon the farther away it is. With video information there is so very much information and noise to filter through. There are also many, many strategies and visual clues to determine spatial positioning and orientation.

Fortunately, there are some general constants:

- the bot is on the floor - the floor is usually flat and level

- the camera remains a fixed distance from the floor - in this case 17"

- although the camera's pitch can be manually changed (or changed by servo) it is usually fixed or is known when changed by servo

- any identified point in the image could be approximated by where it is in the image plane (ie. how close to the horizon it is)

- assumption of 8' walls and a ceiling

- camera field of view

Here are the results of a scan. The number represent horizontal disparity. Hmm, looking at this now I can tell the camera swung a bit which would screw up the readings. What should happen is the points closer to the camera should have larger numbers, because they travel more as the camera moves.

|  |

I'll try this again, but I was kind of not very excited so far with the results.

Stadiametric(ish) rangefinding

After finding that the horizontal parallax was not getting the resolution I liked I came mulled over it for a day or two... then... Eureka !

I finally came to the realization that I should try to get a different method of ranging for higher resolution. I started thinking about all the many known constants in my setup. The height of the camera off the floor, the pitch of the camera, the floor is flat(ish), the focal length of the camera, the angle of the field of view, etc, etc.

My Ah Ha ! moment was at any particular time for any point of interest in the view, the height of th point if its on the floor (and most things are) would be relative to its distance away from the camera. I vaguely remembered seeing gun scopes with gradients which showed range. Wikipedia is great - Stadiametric rangefinding.

"By using a reticle with marks of a known angular spacing, the principle of similar triangles can be used to find either the distance to objects of known size or the size of objects at a known distance. In either case, the known parameter is used, in conjunction with the angular measurement, to derive the length of the other side."

Strangely finding objects with know size would be difficult, although I would be interested in this augmenting my data later. Distance is unknown, but I think MoMo has a lot of constants which someone running out in a battlefield would not.

So now to measure the details !

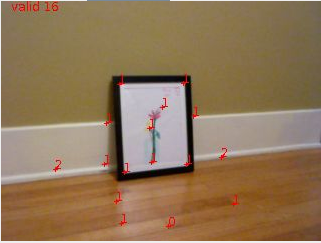

With a tape measure and some pens on the floor, I got the following 320 X 240 picture.

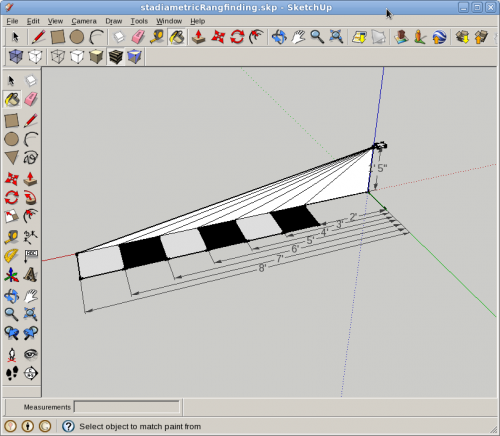

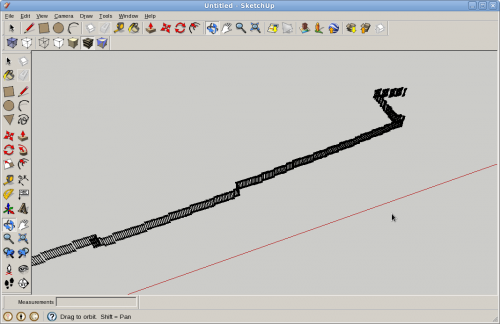

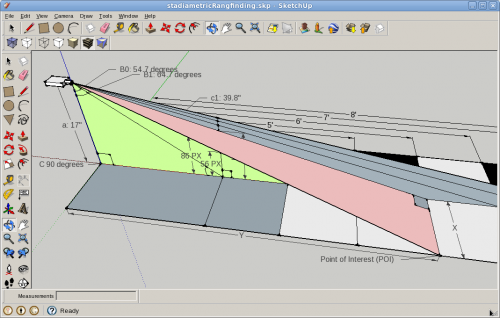

In Sketchup (running beautifully on Linux - Thanks Wine & Sketchup!) I did the following sketch.

Trig ! It can be useful. Here we are solving the the distance to the pen if we know the distance the camera is from the ground and the angle of the pen in the field of view. The angle will be related to the number of pixels in the image. This pixels per degree is a constant for the camera. I looked at the specs of my webcam and it did not come with that information, but I will derive it from the first image.

Lets get crackin !

Solving right triangle: a,b0,c0

a = 17" constant

b0 = 24"

c0 = 29.4"

cos A0 = 24/29.4

A0 = 35.3 degrees

B0 = 54.7 degrees

C = 90 degrees

Solving right triangle: a,b1,c1

a = 17" constant

b1 = 36"

c1 = 39.8"

A1: = 25.3 degrees

B1: = 64.7 degrees

C = 90 degrees

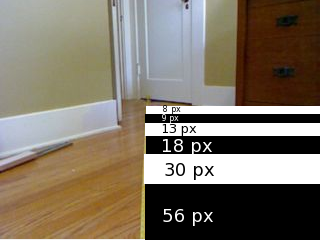

56 pixels = 64.7 - 54.7 = 10 degrees or 5.6 pixels per degree at 320 X 240 image size

Formula to derive distance based on pixel count from bottom of screen on flat surface.

b = distance

b = tan(54.7 + # of pixels / 5.6) * 17

To Test lets say I find something at 104 pixels from the bottom of the image.

B3 = 54.7 + 104/5.6 = 73.3 degrees

tan (73.3) = b / 17

b = 3.3 x 17 = ~57 inches

This technique is useful for calculating distances for points of interest ON THE FLOOR ! Anything point off the floor would be incorrect. Since it would be higher in the field of view in the image, it would "appear" farther away than it actually was.

Now that wasn't so painful was it ?

Let's see what fun we can have with it !

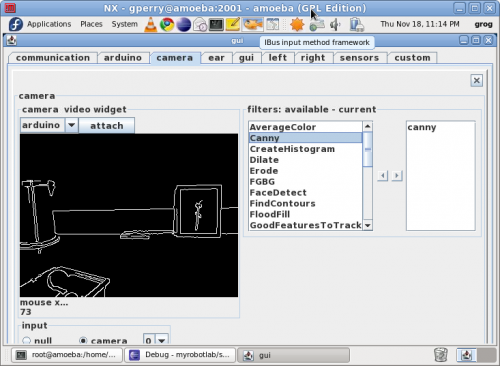

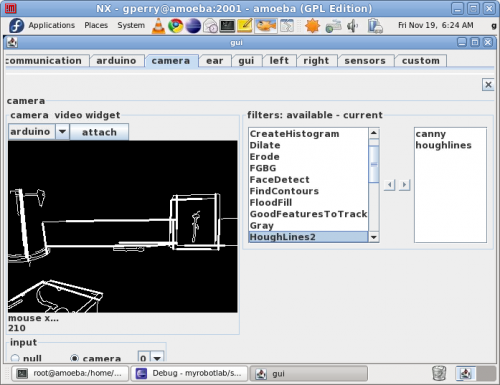

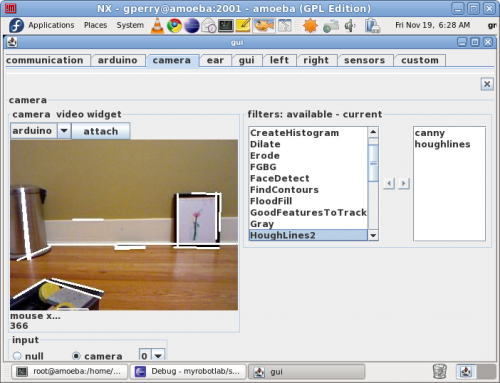

OpenCV has a function called HoughLines2. This function is an implementation of a Hough Transform. An interesting algorithm which will find lines in an image by a voting process. The best documentation I have found for this function has been here. The Hough transform input image needs to be gray scaled and filtered first in order for any usable output data. One possible filter is a Canny edge filter. Another pre-filter for the Hough Transform is an Adaptive Threshold filter.

| Original Video | Canny Filter |

|  |

| Canny Filter + Hough Lines | Hough Lines Overlaying Original Video Feed |

|  |

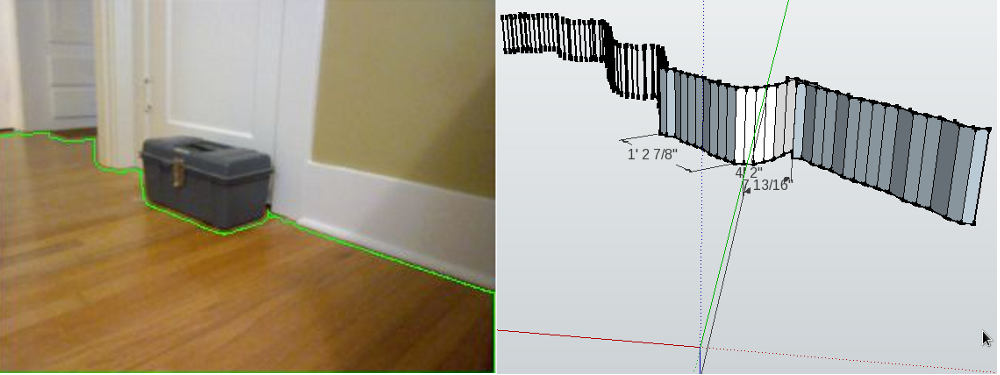

Hough lines is good for finding lines. From the function you can get an convenient array of lines. However, I have now come to the realization that I do not need this. Unfortunately, it has not picked up all of the lines in the picture. The box in the foreground is missing the vertical edge and the base molding of the wall is (temporarily) missing a line too. It will take some playing around with.

How many things are in this picture?

Possibly 3? A trashcan, a picture, and some sort of tool box? Answers would vary even between people. Then there's the objects which are so obvious to people they are not even counted. For example, the floor, the wall could be considered objects. Also questions could arise, is the baseboard an object or is it part of the wall? Do the contents of the toolbox consist of different objects or are they part of the toolbox. Is the picture of the flower a different object than the frame? All of these are subjective questions. With navigation, the floor becomes very important. All objects which intersect the floor would be relevant.

Back to the Floor

It would be very advantageous to find and store the perimeter of the floor. With the ability to map while navigating opens up a whole new range of possibilities. It would allow us the ability to create destinations. Destinations could be areas which allow the robot to recharge, or find a beer and return it to the robots master. So lets get started with the very basics of mapping. Within man made interior spaces there is a tendency to make 90 degree edges, there are brilliant exceptions - but we won't digress now.

With 90 degree edges, depending on lighting conditions there typically is a line which forms between the floor and a wall. Any floor/wall meeting would define a limit to the robots mobility and should be mapped as such. So now lets identify those edges. Since our primary sensor at the moment is a webcam, we will run the image through a Canny filter to extract the edges. The way the Canny edge filter works is it effectively checks every pixel with its neighbors. If the changes in brightness exceed a certain threshold, the OpenCV Canny filter will mark a resulting image with a white pixel. That is a good starting point. But there are several problems with the current black and white image. One would be that we are only interested (at the moment) in a certain number of pixels. A recurring process while using video data is filtering and reducing the amount of data into a usable form.

I thought about the data I would like to get out of the image. If I wanted to know the floor boundary I would let loose a mouse in the picture right in front of me, and let it scurry along the floor and walls to trace out a perimeter.

Left Hand Rule / Wall Follower Algorithm

This seemed appropriate. My first implementation did not keep track of the wall which was a large mistake, instead it just searched for an open access starting by looking left. It would loop back on itself.

This was a bit challenging. It turned out to be a type of Finite State Machine. The input states were were the last wall was seen. The transition state would be sweeping left from the last known wall to an square which was clear. If your going to use the Left Hand Rule, you better have a hand on the wall !

Searches are done from the last known wall position sweeping counter-clockwise (right hand) until a new position if found or until the start has been found (perimeter done)

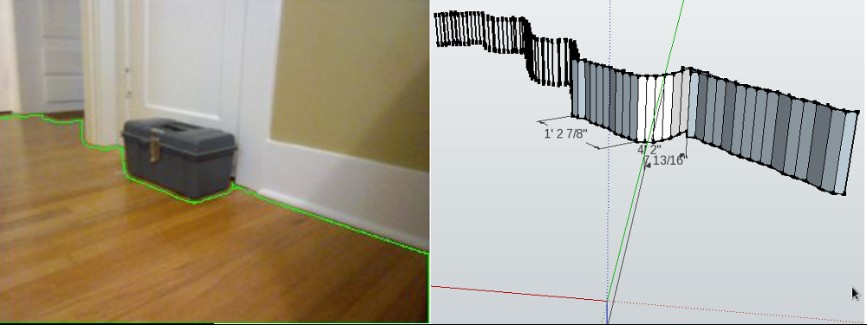

Original | Canny

|

Canny + Dilate | Canny + Dilate + Mouse with Mouse overlay Original |

I had problems with the mouse filter jumping through openings in the Canny filter, so I had to add Dilate to thicken the lines and keep the mouse inside the perimeter. From the Original picture to the Mouse, processing took about 3.6 seconds. I know this is rather abysmal.

Some performance possibilities would be :

- Don't draw the green lines - at this point its only a visual reference for debugging - MoMo has all the data

- cvGet2D is being used which is not recommended (performance problem), I think converting the whole image to BufferedImage and using its accessors for the Mouse filter.

- Look into cvFindContour again with a limiting Region Of Interest (ROI)

Although ~4 seconds is not good, it's not bad if the data persists and can be accessed in MoMo's memory. In that case its exceptionally fast. It would be equivalent of taking a 4 second blink, then having your eyes closed and navigating from memory.

Now to visualize the data we have. I threw away all the points on the image's edge because these were possibly open for navigation. The perimeter contained 886 points.

Here is the data in Sketchup. That is starting to look good !

|  |

The X axis is all wrong because I'm using pixels for inches, which completely screws up the scale and also is more incorrect the further a POI (Point Of Interest) is from the camera. I'll have to go back and work through those calculations to get a correct formula.

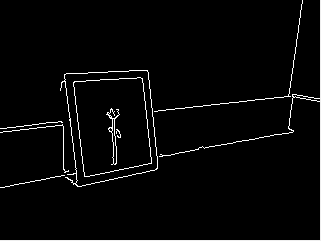

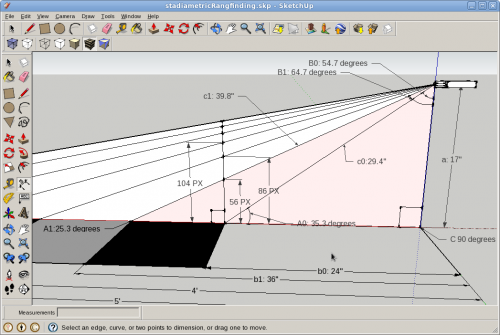

With another quick SketchUp, it was easy to visualize the algorithm of any point of interest within an image. Behold !

Any point of interests location on a Cartesian floor grid can be computed by solving 2 triangles. The adjacent screen leg of the green triangle would be the floors Y coordinate, and the opposite side of the red triangle would be the X coordinate.

Back to the formula:

Y is the opposite side of the green triangle

Y = Math.tan(0.01745 * (54.7 + (240 px - # of px from bottom edge) / 5.6) * 17);

Now we want the adjacent side of the red triangle, but we can get the opposite side of the red triangle, because it is the same line as the hypotenuse of the green triangle !

Law of Sines

a/sin A = c/sin C

To find the angle A of the red triangle we are counting the number of pixels from the center. Since the image is 360 px wide we subtract 160. Negative X will be to the left of the center of the image, Positive X will be to the right.

Divide by 5.6 to convert from pixels to degrees

Multiply by 0.01745 to convert from degrees to radians

X / Math.sin (0.01745 *(160 - # or px)/5.6 = Math.sqrt (Y^2 + * 17^2) / sin 90

X = Math.sqrt (Y^2 + * 17^2) / sin 90 * Math.sin (0.01745 *(160 - # or px)/5.6)

Woot ! Now let's use Sketchup to test, and slog through the calculations to test for our Point of Interest where X = 1' and Y = 5'.

Y = 56" (using the previous example)

X = Math.sqrt (56^2 + * 17^2) / 1 * Math.sin (0.01745 *(160 - x px)/5.6)

X = 58.52 * Math.sin (0.01745 * 49 px / 5.6) = ~9

Well, its a little bit off ~ 3 inches. I think the general calculation is correct. I'm also sure there is some amount of error, especially in the 5.6 pixels per degree. It will take a little fudging of the constants and some "real" testing to get it more accurate.

By Jove, I do believe I've found my Toolbox !

- The toolbox was 48" away - Calculated 50"

- The dimensions were 16" X 8" - Calculated estimate is 15" X ~8"

- Wall length to the right of the toolbox is 23" - Calculated came out to be 24"

- Farthest point was 10' 1" - Calculated distance was 11'

3.7 Seconds of processing to get the data. Took a considerably longer to put the data into SketchUp so I could visualize it.

What's next?

I would like to turn MoMo so we can continue to build the map. I believe this means Matrix multiplications (I'm a bit rusty, so this may take awhile)

Milestones

- Obstacle avoidance with multiple sensors and arbiter

- High level voice command

- Tracking/Following using webcam

- 2 D & 3 D Mapping

- Image stitching

- Template matching - Electrical Plug identification & Docking