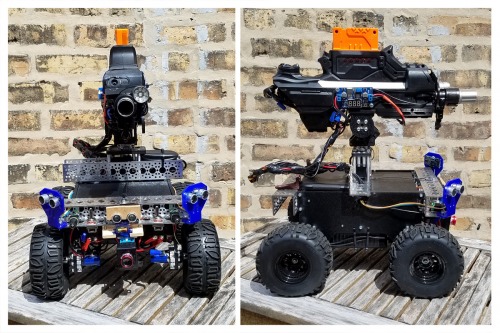

M1 NerfBot Build1

As a quick disclaimer, I am completely self taught in all areas we'll be discussing below (I'm a Tattoo Artist/illustrator). I'm joining this community in hopes of sharing ideas, filling in knowledge gaps and having some fun building things. This is a semi autonomous nerf blaster project not a mechanized death machine, I simple love nerf guns and this is a way for me to keep learning new things fun.

My primary goal is to log the progression of this project, and use it as a platform to learn and experiment. The current revision of M1 nerfbot is a steady progression in my pursuit of building something fun and cool. Every piece of this robot/ground drone has been upgraded and replaced in a progressive order related to power/amperage draw needs, weight conciderations, torque, speed, ect as I've learned.

This version of M1 nerfbot has hit a brick wall in-terms of what I'm looking to implement and how its built so I've taken a bit of a hiatus from it while I learned the required skills to continue. I'm planning a conciderable overhaul of the entire platform from the ground up inorder to further improve on the capabilities of M1 based on what I've learned and what I plan on tackling next.

I will first go over its current revision, how it works, what it does and then I will proceed to laying out my upgrade plans based on experimenting with it and also implementing new features I'd like to learn/play with such as ROS support.

//Current revision//

RaspberryPi2

USB Hub / Wifi Dongle / Blue Tooth USB/ RF Tranciever

RoboClaw Motor Controller

Servo Controller

3xUltrasonic Sensors

2xFPV (Web Cam above barrel and Pi Cam on servo front lower Chassis)

M1 connects to network on bootup, runs main python script >>

M1 connects to wireless xbox360 controller and maps buttons

2 seperate video streams run (still image streams) and are sent over the network, both streams are sourced into a local network webpage hosted on M1 which takes the video streams and lays them out across the page horizontally (so the screen is split with both video streams) I then split those streams again verticle this time so I can get a fake 3D effect and put my smart phone in a pair of 3D goggles to see FPV view through the drone.

Smart Phone sends gyroscope and accelerometer data back to M1 which upon button press accepts the data and runs it through to the pan/tilt servos for the turret on M1.

Currently 2 relays control allowing power to the flywheel cage motors that launch the darts and the pusher mechanism that pushes the darts. These are currently completely stock motos in the flywheel cage and pusher box, I push 12v to them but they're really only meant to run at like 7-9v, these issues have all been addressed just not implemented. Several firing modes have been programmed in and despite the lack of motor controller/stepper mottor I've been able to successfully time out single fire as well as 3 round and 5 round burst fire. These are all mapped to the xbox controller and I have a select fire script setup as well as an attempt at calculating ammo but will eventually need to use a sensor for that instead of monitoring button clicks.

Turret also has a simple LED light, bottom PiCam is positioned low on the front of the drone on a servo, I found it was helpful to be able to check your wheels for obstructions/proximity when driving it around with the 3D FPV goggles on.

Ultrasonic Sensors act as obstacle avoidance sending a command to the xbox controller to rumble when within 6" of an obstacle, It will immedietly hault and reverse when it gets to close to an obstacle (about 2"). I've been working on a way to try and get some onscreen display data going to you'd be able to have up to date readings about proximity to obstructions when wearing the 3D goggles.

[// working on diagram drawing //]