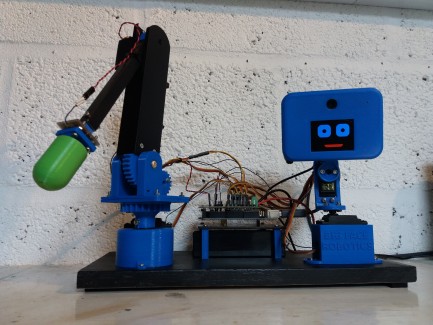

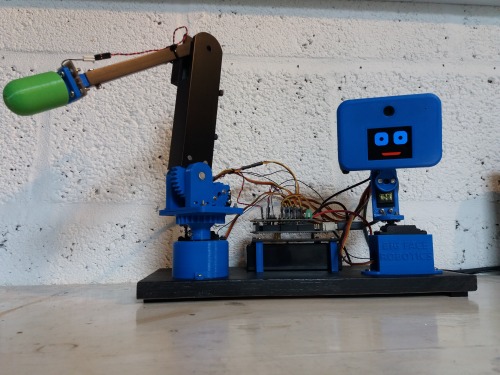

Desktop Robot Head and Arm

Hi Everyone. Its been a long time since I last posted a robot project on here but I wanted to share my latest project with you all. I have always enjoyed making various types of robot but I have a particular soft spot for desktop robots. I like the idea of a little robot pal sat next to me on the desk that I can develop when I get the spare time.

I have made several humanoid type robots in the past, along with many mobile robots. I didn't want to make another humanoid torso type robot so with this project I have taken a slightly different approach. Inspired by so called social robots, I wanted to design and build a robot head with a face that could express emotions and interact with me or another person. So I did. I designed and 3D printed the required parts and made a robot head that moves using 3 servos. I have made pan/tilt mechanisms in the past but I added the third servo to give the head the ability to roll as well. I think this ability to roll the head from side to side allows the robot to convey more emotion in its movements. The robots head contains and Adafruit 2.2" TFT screen so that I can display a face, and also has a webcam mounted in the head.

I am using an Arduino mega with a custom made interface board to control the servos and write to the TFT screen. I have added a connection to each of the servos internal potentiometer which is being read as an analogue input by the Arduino. This is so that I can have closed loop control for each servo and also I can read the servo position which I hope to use for teaching sequences of movements to the robot.

To accompany the robot head, I have also designed and built a simple robot arm. I have both of these mounted on the same base plate so they now form one unit. The arm is to extend the interaction of the robot with its environment. The arm uses 3 servos, again with the potentiometers connected to analgue inputs. No gripper on the end of the arm at the moment. I have designed a simple switch that connects to the end of the arm so that the robot can detect when it has touched something.

I am currently working on the code for the Arduino and the code for the python scripts running on a controlling PC. I have started work on a user interface to manually control the servos, get servo positions and grab images from the camera. Whilst the mechanical build is essentially finished, I have a lot to do to get this robot doing something fun/interesting, but I now have a solid platform to begin development.

To record my progress I have started making a series of Youtube videos to share what I am doing and how I am implementing some of the funtionality. I regret not starting these videos at the beginning of the project but better late than never. If anyone reading this would like me to cover any aspect of the project in future videos I'd be more than happy to do this.

I also update my blog with progress on my projects which can be found at www.bigfacerobotics.com

14/10/17

I've started playing around with having the robot replay sequences of stored positions along with adding some more facial expressions to see how the head movements convey emotions (see video Part 4 above). I'm pleased with the result but more work is needed on the GUI so that the sequences can be programmed and replayed at the click of a button. I am also planning to start on some machine vision work soon so that the robot can react to its surroundings.

14/01/18

I have added some more videos documenting the build of this robot. These include face detection and tracking by the robot head and the detection and tracking of coloured objects. I have also modelled the robot arm in 3D space and, combined with readings from the sonar sensor, I have been able to build a point cloud of the robot surroundings. The most recent video I have made shows this in action.

https://www.youtube.com/watch?v=Jf2UzjYeCnQ&t=15s

This was a proof of concept and a bit of fun, which should help in the next step of the project which is combining the sonar with vision, using the webcam and OpenCV to detect objects of interest and store their location in 3D space.

Desktop Robot

- Actuators / output devices: rc servos

- CPU: Arduino Mega

- Operating system: Linux (Ubuntu)

- Power source: 6V

- Programming language: C and Python

- Target environment: indoor