Processors

- Mele Windows Stick PC with 8GB RAM and 256GB disk.

- Arduino Mega ADK w/ Sensor Board

Controller Boards

- SSC-32U Servo Controller - one side is powered at 7V, one side is 6V

- Sabertooth 2x5 Motor Controller

Sensors:

- Orbec Astra S 3D Depth Camera (on top of head)

- Color Video

- Depth Video

- Intel T265 Tracking Camera (on top of head) - for pose estimation

- 2 Black and White Fisheye Camera

- Integrated IMU, SLAM, and Pose Output

- 2 Adafruit AMG8833 Thermal Cameras (8x8 Pixel) - 1 in each ear, I plan to ugrade these to 36x24 models soon.

- Devantech SRF-01 Sonars (12 Installed in Body + 2 more planned for ears) - 12 in 4 pods of 3 each in lower body, 1 in each ear

- CMPS010 Tilt Compensated Compass - provides heading, pitch, and roll

- Sharp IR Distance Sensors (2)

- Microphones (1 in Body) - this is used to analyze sound frequencies, which can be displayed on the front LED ring in the chest.

- Bluetooth Keyboard, Mouse, Game Controller, and Earpiece - the earpiece can also be used to issue voice commands on an "open mic" mode and is processed through a speech-to-text transformer model...still not that reliable though.

- Android-based voice remote with Google Speech-to-Text - this is my normal means of talking to the bot and is mostly reliable, even for fairly complex sentences

Actuators:

- 2 12V Planetary Gear Motors for Moving the main sprockets and tracks

- 8 Actuonix Linear Actuators

- 1 in each hip to move each "leg" forward and back, which results in the core moving forward and back or lifting a leg.

- 2 in the core for tilting the core left or right by around 30 degrees or moving the whole core up or down by 4 inches. The front/back/left/right tilt is what allows the bot to reach objects on the ground.

- 2 in each upper arm.

- 4 Servo-City Gearboxes and Servos - Shoulders (2), Neck (2)

- 8 7-Volt Hobby Servos - Ears(2), Arms (2 in each forearm), Grippers (2)

Other

- 24-LED NeoPixel Ring in Chest - typically is responding to sound frequencies from microphone

- 4 7-LED NeoPixel Jewels - for front and back driving lights, brake lights, and turn signals

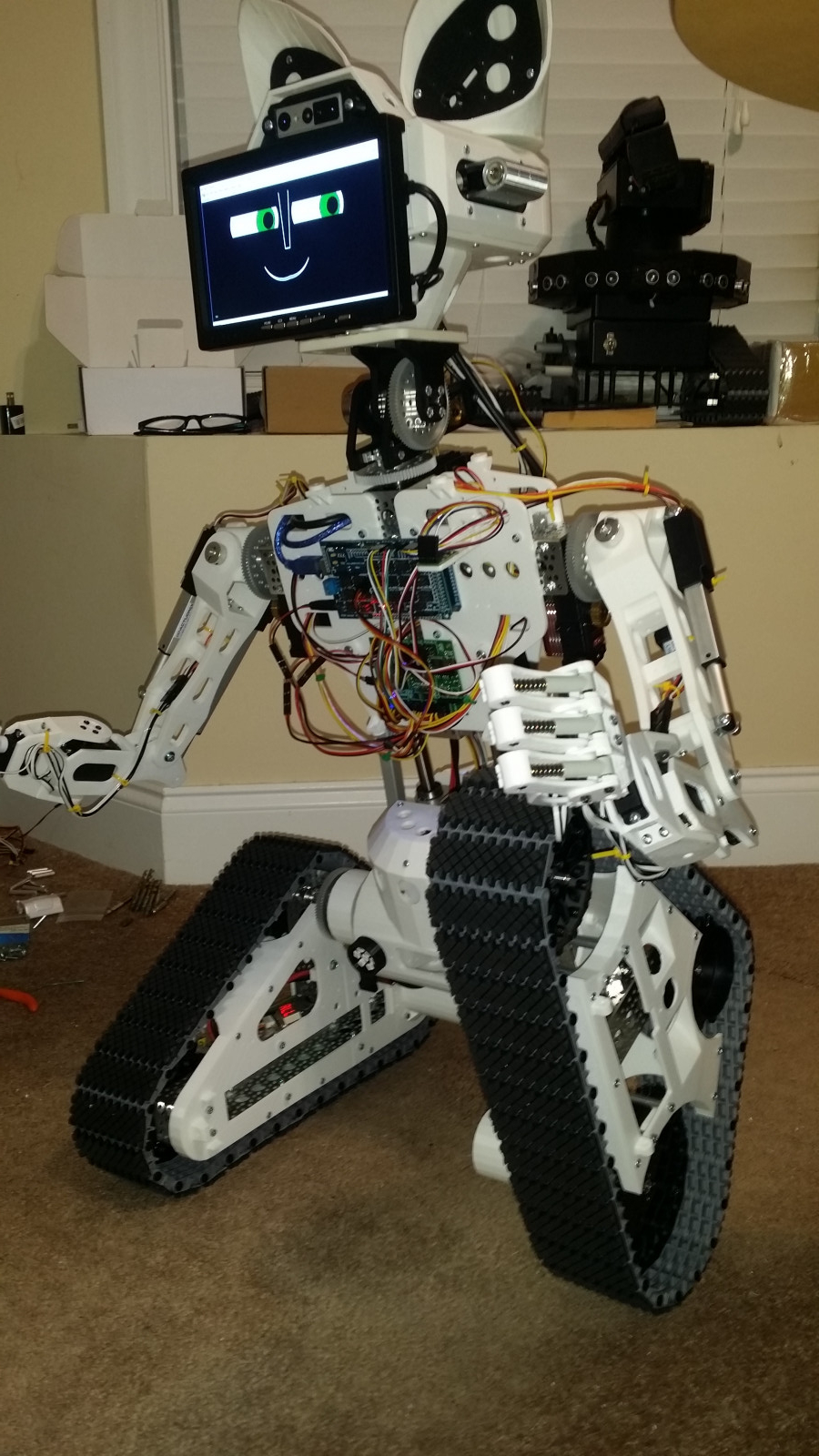

- 5in HDMI screen with Animated Face - eyes, mouth. Notably, lip movements are synced with syllables of words using visemes.

- Speaker - built into 5in HDMI screen on face, used for voice and music playing.

- 2 Lasers - one on each side of the head, not connected yet as my last bot shot me in the eyes a lot.

Power Supply

- From 1-4 4C Lipo Batteries (in parallel) stored in 4 different compartments in the inner and outer part of each track housing. The bot can run on 1 battery but can hold up to 4.

- 7 UBECs of various voltages (12V, 9V, 7V, 6V, 5V)

The primary focus of this bot is in being smart and interactive. This takes many forms. I will highlight a few areas here and add more over time.

Kinematics Engine - Forward and Inverse (NEW)

The bot has 22 degrees of freedom in its movement, so figuring out how to reach out and touch something, look at at, or point to it, is a complex task. For inverse kinematics, the bot uses a database of around 250K pre-calculated solutions for relative positions all around the bot. The bot then selects the optimal solution for how to move one of its end effectors from its current position to a desired position in space. The key is selecting the best and most natural looking solution when multiple exist (often around 20-30 for a given goal). This is down by choosing the solution that involves the least joint movements in degrees...where each joint is also given a weight (cost) so that larger heavier joints that require more power (like the hips) will move less, and lighter joints like forearms and wrists will move more. This algo combined with the db is fast and creates natural transitions. These are two of the significant challenges in the inverse kinematics space when lots of DOFs are involved, along with handling constraints for each joint.

Object Recognition Engine (NEW)

Some objects can be recognized by handing the entire color image to a model (like YOLO v3), or a Face Detection. If a face is found, there are off-the-shelf models for age, gender, and emotion detection.

In addtion, Ava now can determine regions of interest from the color cam and the depth cam. For the color, ava picks out objects that are lighter or darker then the background (when looking towards the ground), or objects that are significantly different in the HSL color space. For depth, regions of interest are determined by subtracting a pre-saved background image (for the given pose the bot is in) from the incoming images and doing some other filtering to determine what are objects and what is background.

Once regions are determined and de-duped or aggregated, classification can be performed on each region using a variety of techniques.

Ava has a DB and several libraries of images for household objects, playing cards, spare parts, tools in my office, etc. Her DB stores a signature for each image, with its size, color signature, and other useful data. At runtime, Ava can select similar objects from this DB. The inherent size and color matching is very important as many of the follow on techniques like point/feature matching only work on greyscale images. My pre-matching based on color percentages, the accuracy and efficiency is greatly improved. Also, the length/width and the ratio of them is a valuable filter in this process for improving accuracy. Later on, I will probably incorporate a filter based on the variance of depth for the object. Once the list of "possible" matches is found, Ava then uses point/feature descriptor matching to find the best match from that list, best is the image with the highest number of matching points, or a high percentage of points matched.

There is one final step for extensibility. If the "winner" falls within a particular library that has a "custom" recognizer associated with it, the custom module can then evaluate and refine the classification further. This is used for improving the accuracy of recognizing the various playing cards in the video. The custom module uses template matching to count the symbols on each number card to make sure a "5 of clubs" is really a 5 and not a 6, and really clubs and not spades.

Advanced Neural Net Architecture

Conceptually, Ava's brain is a neural net of neural nets. Each node in the high-level brain can accept inputs, have custom activation rules, and send outputs to other nodes. Every node has a name and one or more aliases. Any node can also be autonomous. Internally each node can have its own internal neural net, fuzzy logic rules, verbal "English Paragraph" script, or procedural code.

Natural Language Orchestration

People can talk to the bot using language. Because every node in the brain has a name, a person can talk to any node and get, set, increase, decrease, etc. its value. In the same way, nodes can talk to each other...using natural language. This allows complex operations (like coordinated movements) to be performed using simple sentences and paragraphs.

Self-Learning

Ava is self-learning. It builds knowledge graphs from linked open data sources on the web (such as dbpedia, wikipedia, concept-net etc), databases, APIs, etc. Ava integrates all the data for any given topic into a single graph. Ava can build a graph around people, places, users, or any given word or topic.

Natural Language Question Answering & Comprehension

Based on the knowledge graphs, Ava can then answer natural language questions. Ava can also answer questions based on text sources using a "transformer" BERT-based model for question answering. Ava can also compare and contrast topics..."Compare hydrogen and helium."

Machine Learning Models

Currently, this bot uses several off-the shelf machine learning models (from the Intel Model Zoo or HuggingFace) to do object recognition (like Yolov3) and other visual functions as well as many natural language-based functions. Over time, more models will be added. Some of these models run on the neural compute stick and other run on the LattePanda. For NLP, I make heavy use of the spacy library and a variety of large transformer models for questions answering, dialog, sentiment, speech-to-text, etc. Some of these run on bot and some run off-bot through an API.

Core Movement

Using 4 linear actuators and a slide mechanism with 2 8in stainless steel tubes, the core can move front to back, up to down, and side to side, and hold almost any position indefinitely, even with a loss of power.

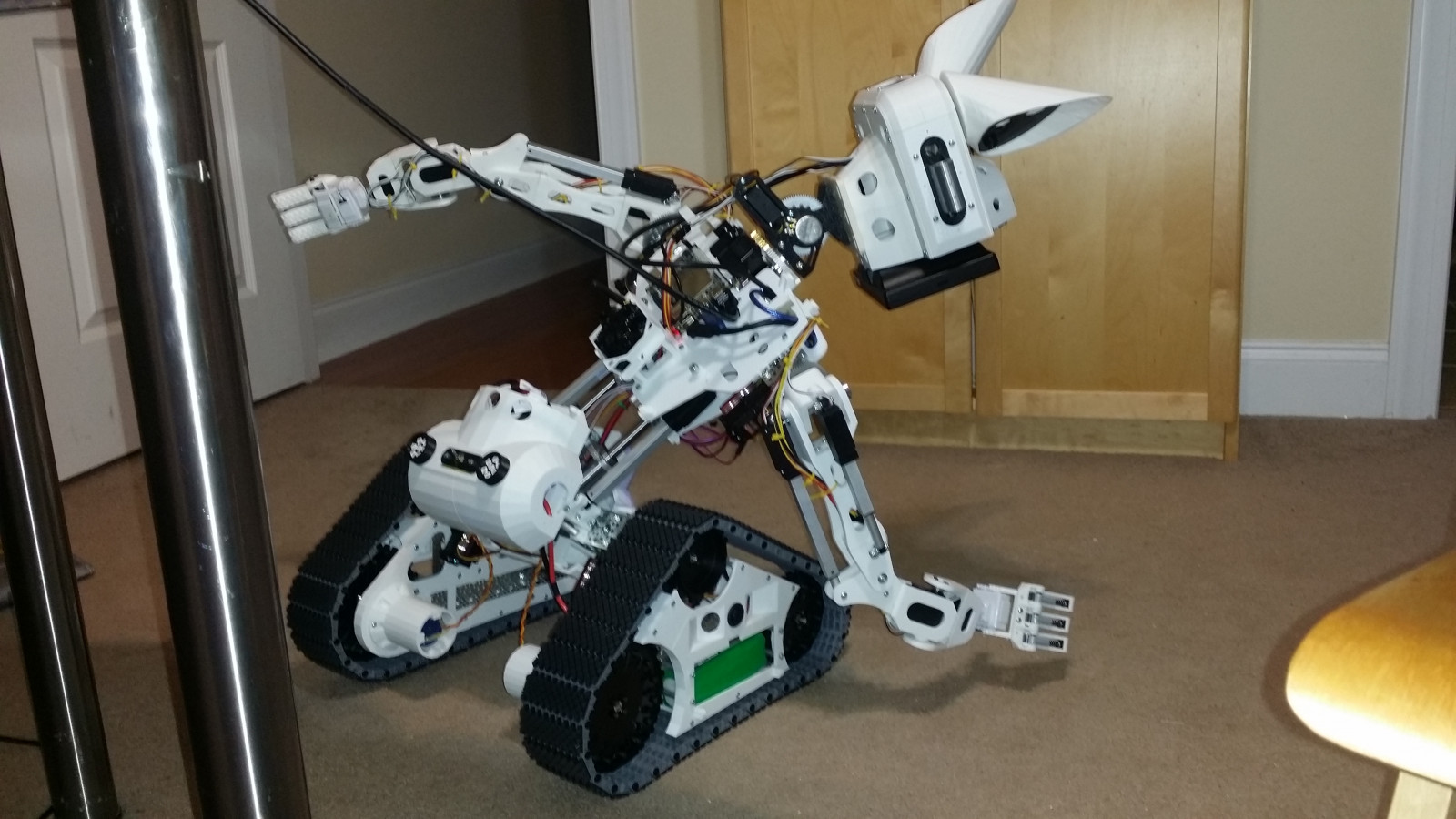

This was an early pic showing how Ava can reach for objects on the ground. To do this, the core leans forward and to one side. This brings the head and camera over the object in question and puts the hand directly next to an object.

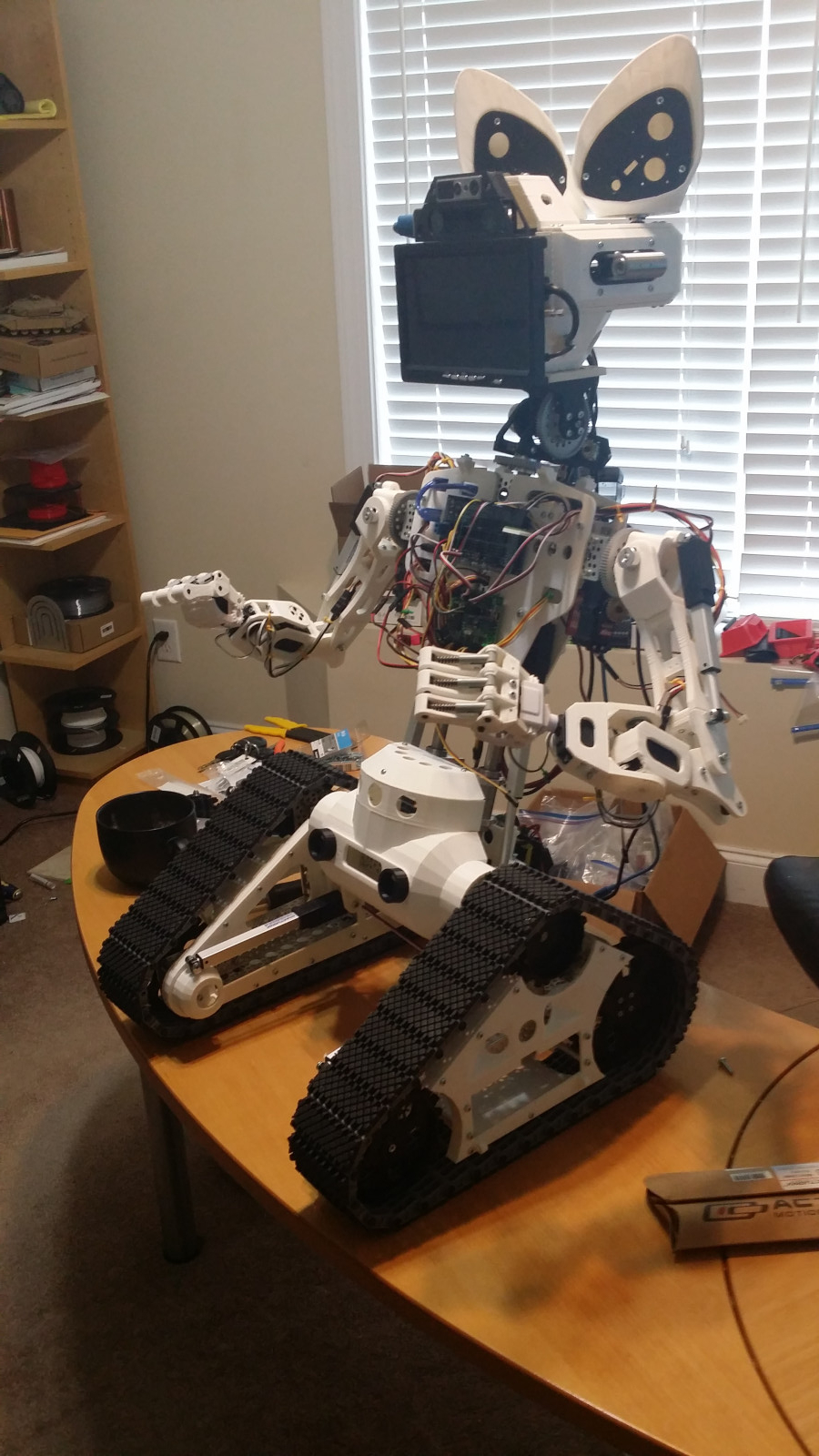

Here is another pic without the skin...doing a Usain Bolt pose:

This shows the side to side tilt:

This pic shows the bot leaning back.

This is one I call "The Heisman" showing how the bot can lift a leg on one side or the other.

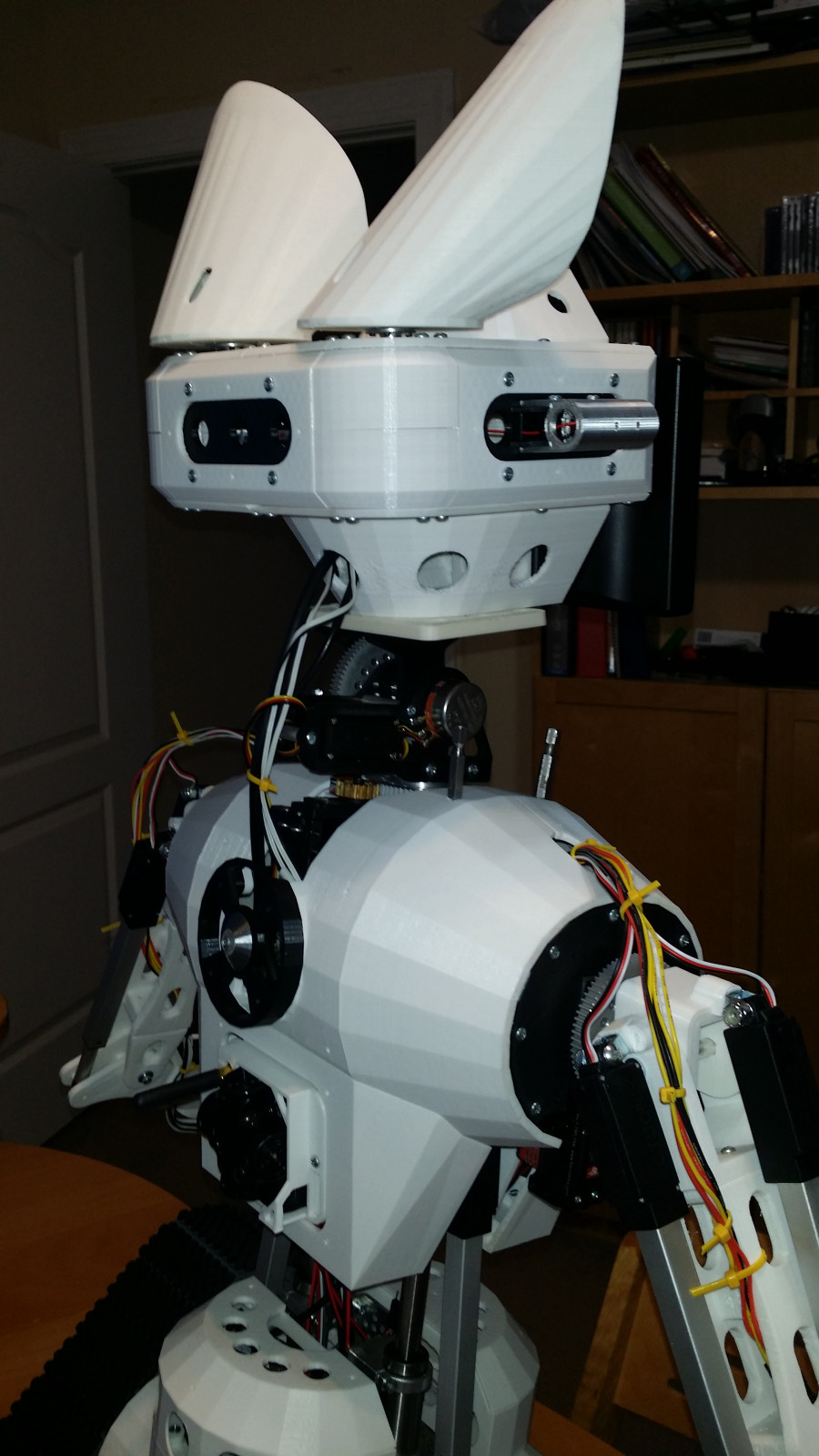

Head and Cameras

The two main camers are just about the face. The thermal cameras are in the ears.

Here is a closeup of the main cameras. The upper one in the Orbbec depth camera. The lower one is the Intel T265 Tracking cam with SLAM. You can see the two fisheye lenses. This sensor uses fisheye lenses to give the onboard SLAM more landmarks at more angles in order to localize more reliably.

Here is a pic of the back of the head, shown with the ears rotated back.

This is an older pic as well. At the time I was using a localization system from Marvelmind, seen below sticking out of the lower back in black. I have since removed it now that I have the T265 SLAM cam to estimate pose and location.

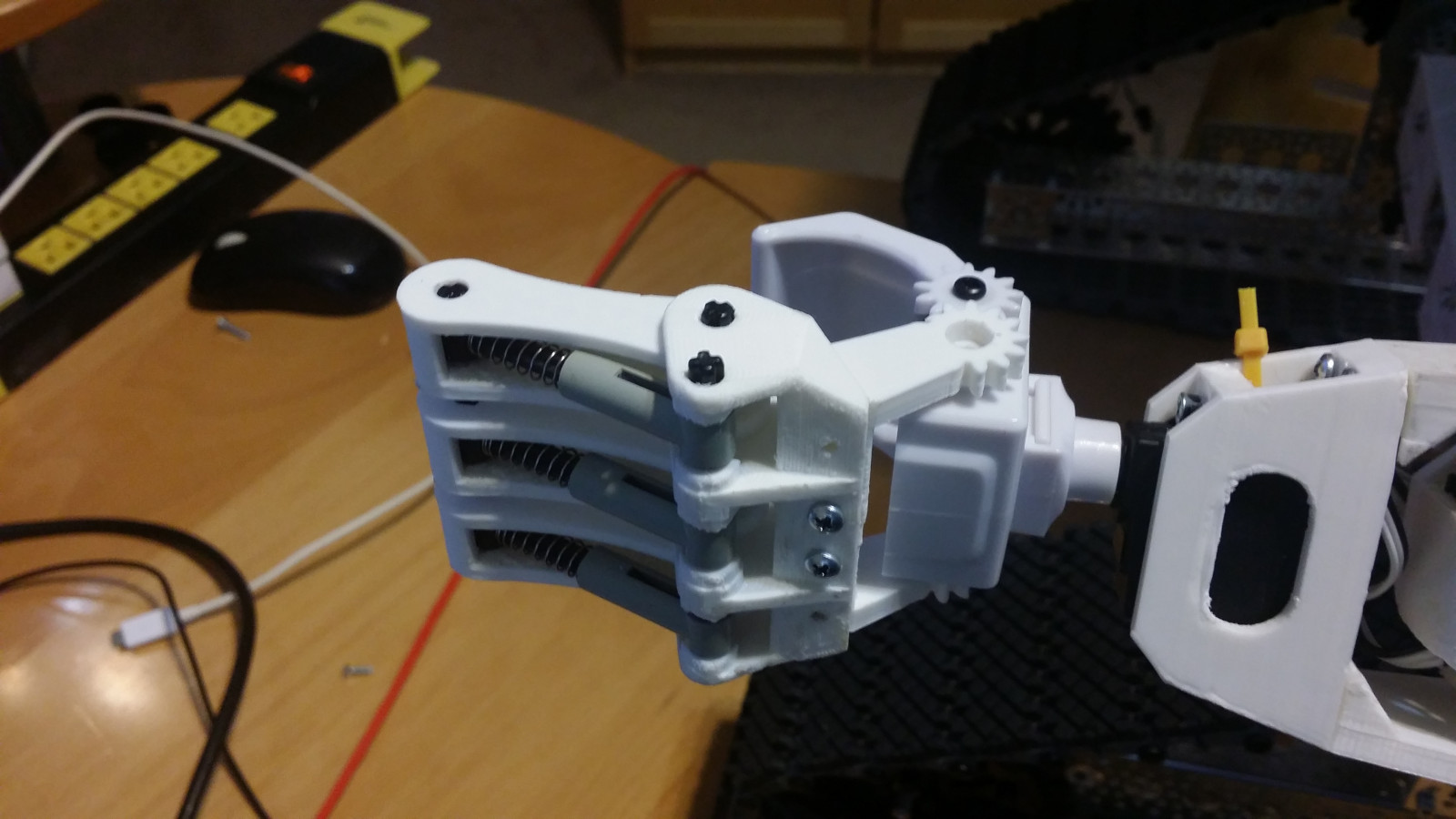

Arms and Hands

This is an upper arm...before I installed the hands.

Here is a hand. Note the lego spring shocks in each finger.

Outer Fairings, Lighting, and Body Sensors

The front LED ring has multiple modes. I usually have it in audio spectrum anayzer mode, so it lights up in real time with music, voice, or other sounds in the room.

This pic shows the front 3 sonars installed. The other 11 are or backorder. There will be 3 front, 3 or each side, 3 in the back, and 2 in the ears. You can also get a glimpse of the driving lights in this pic, for driving, brakes, and turn signals.

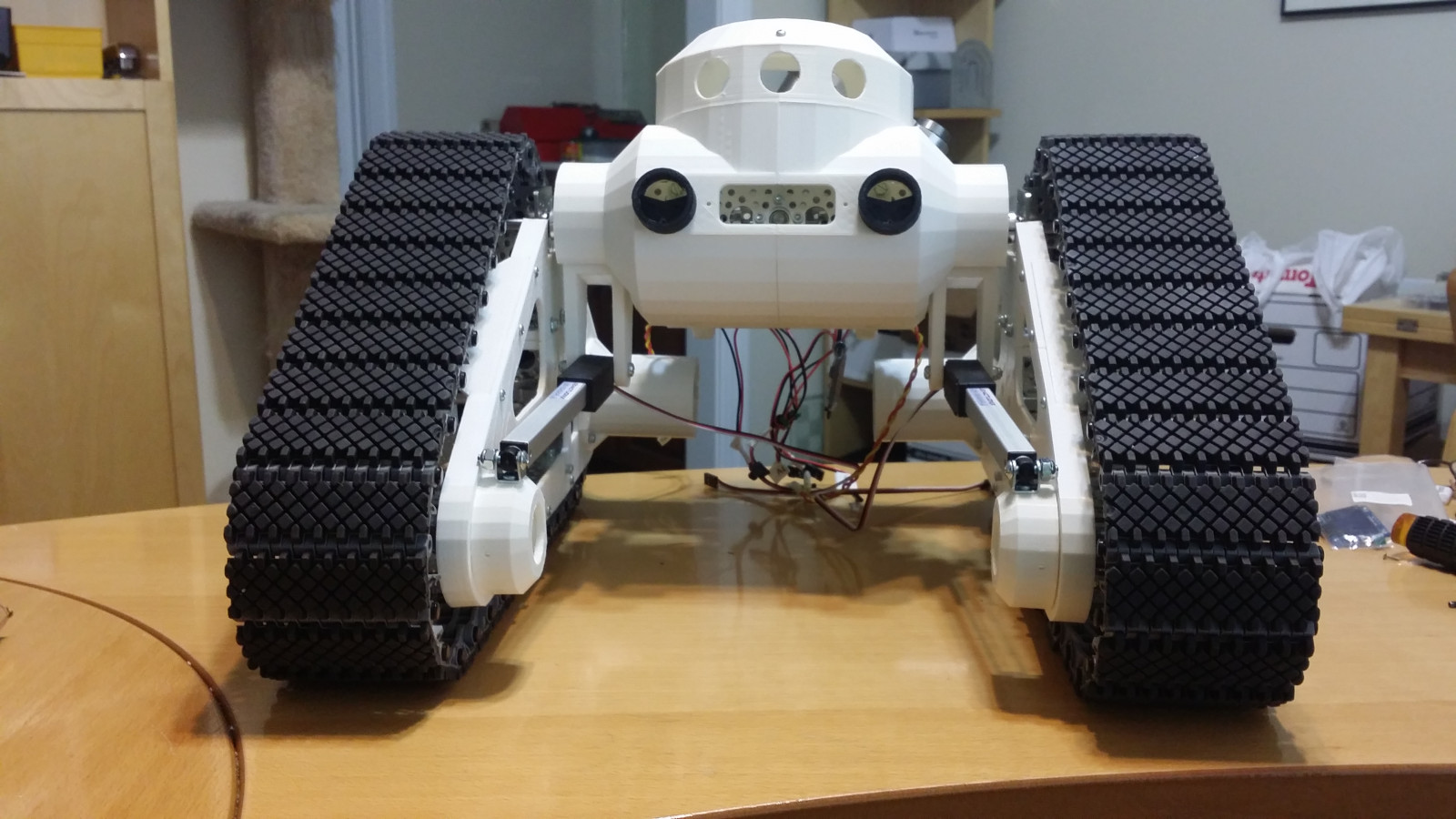

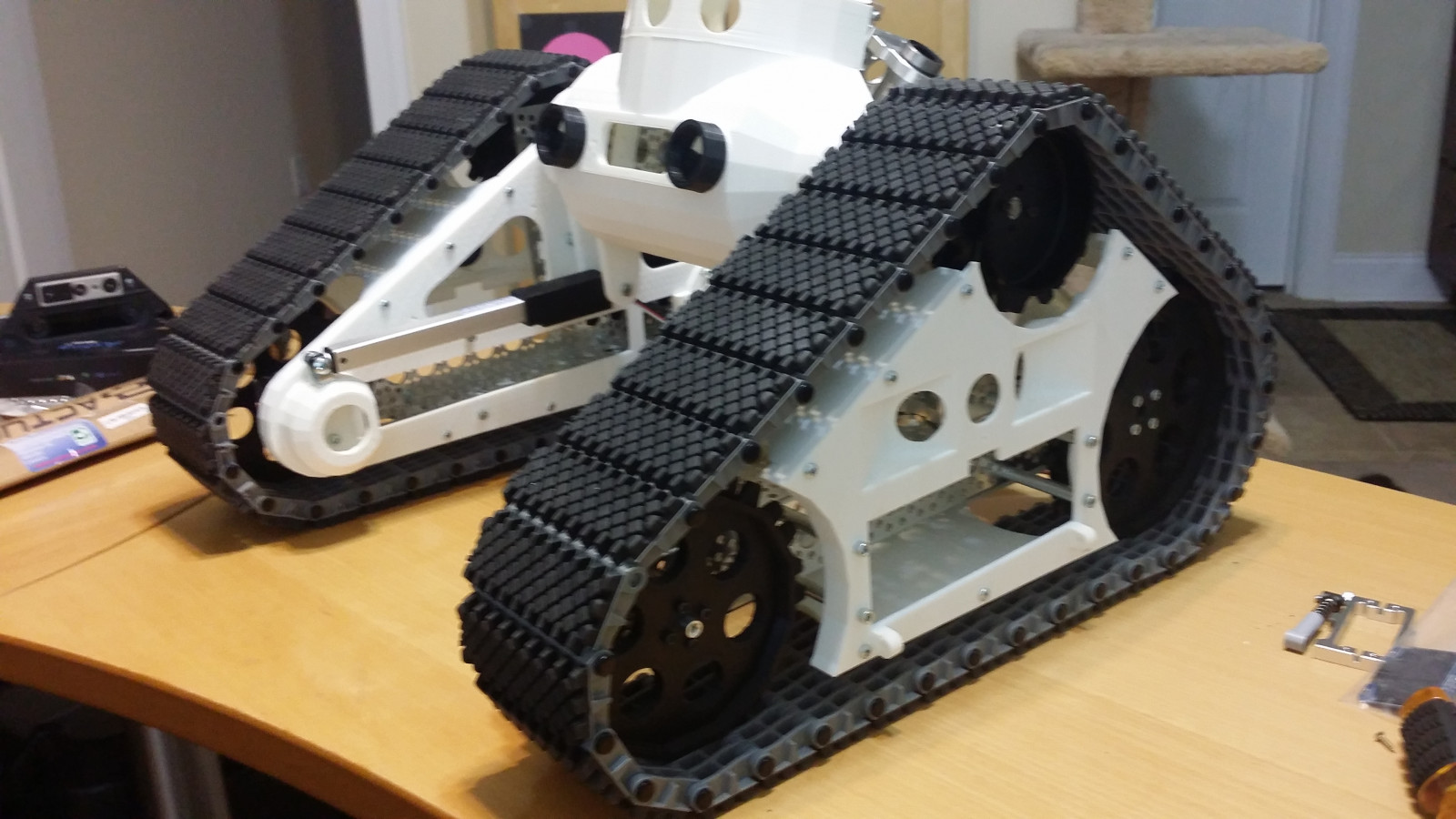

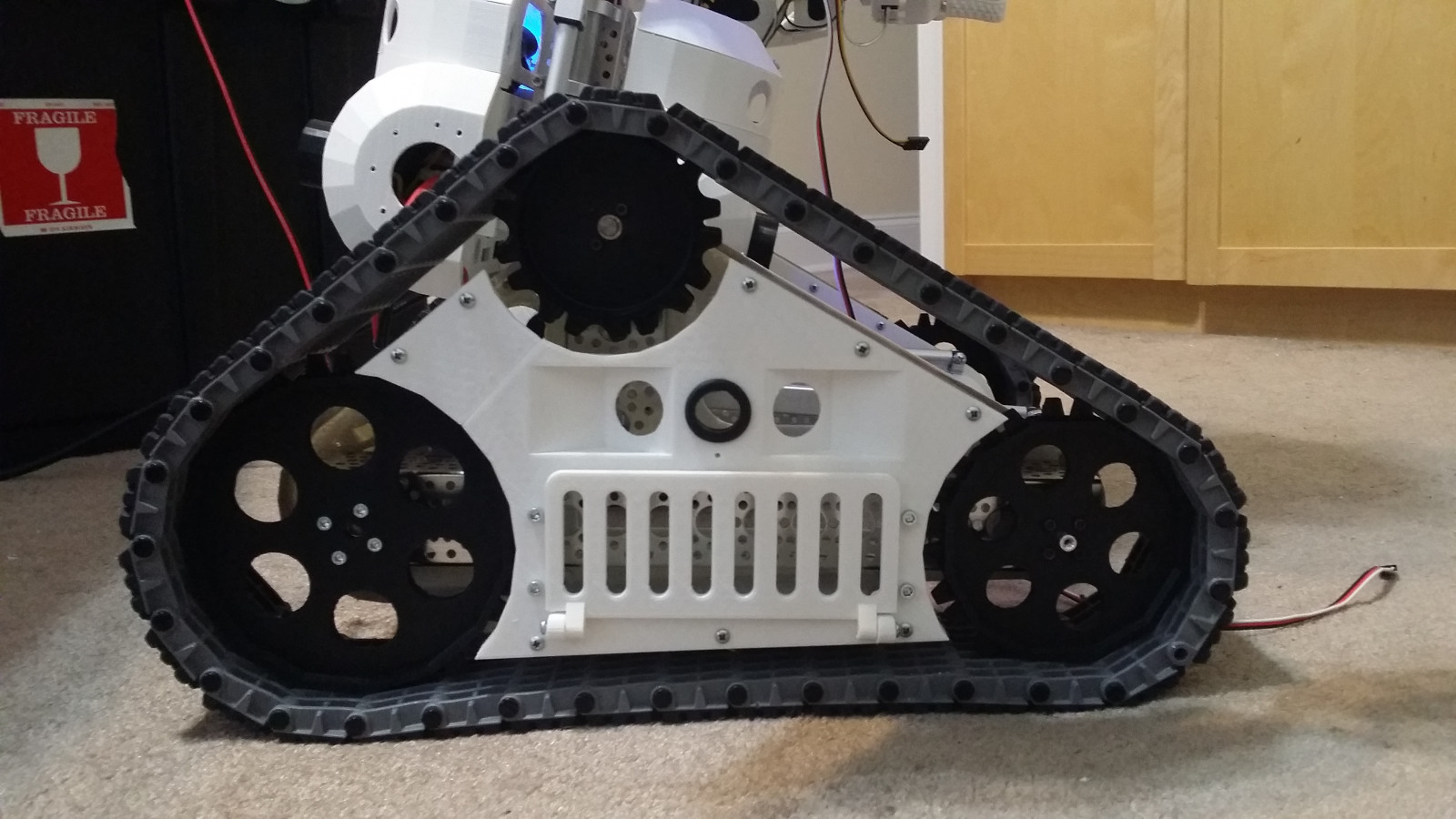

Lower Body & Tracks

Here is a picture of the lower body right after I replaced the hip servos gearboxes to use linear actuators instead.

The bot uses 3in wide Lynxmotion tracks, with custom designed and 3D printed sprockets.

This pic shows the outer battery compartment doors installed.

Processors

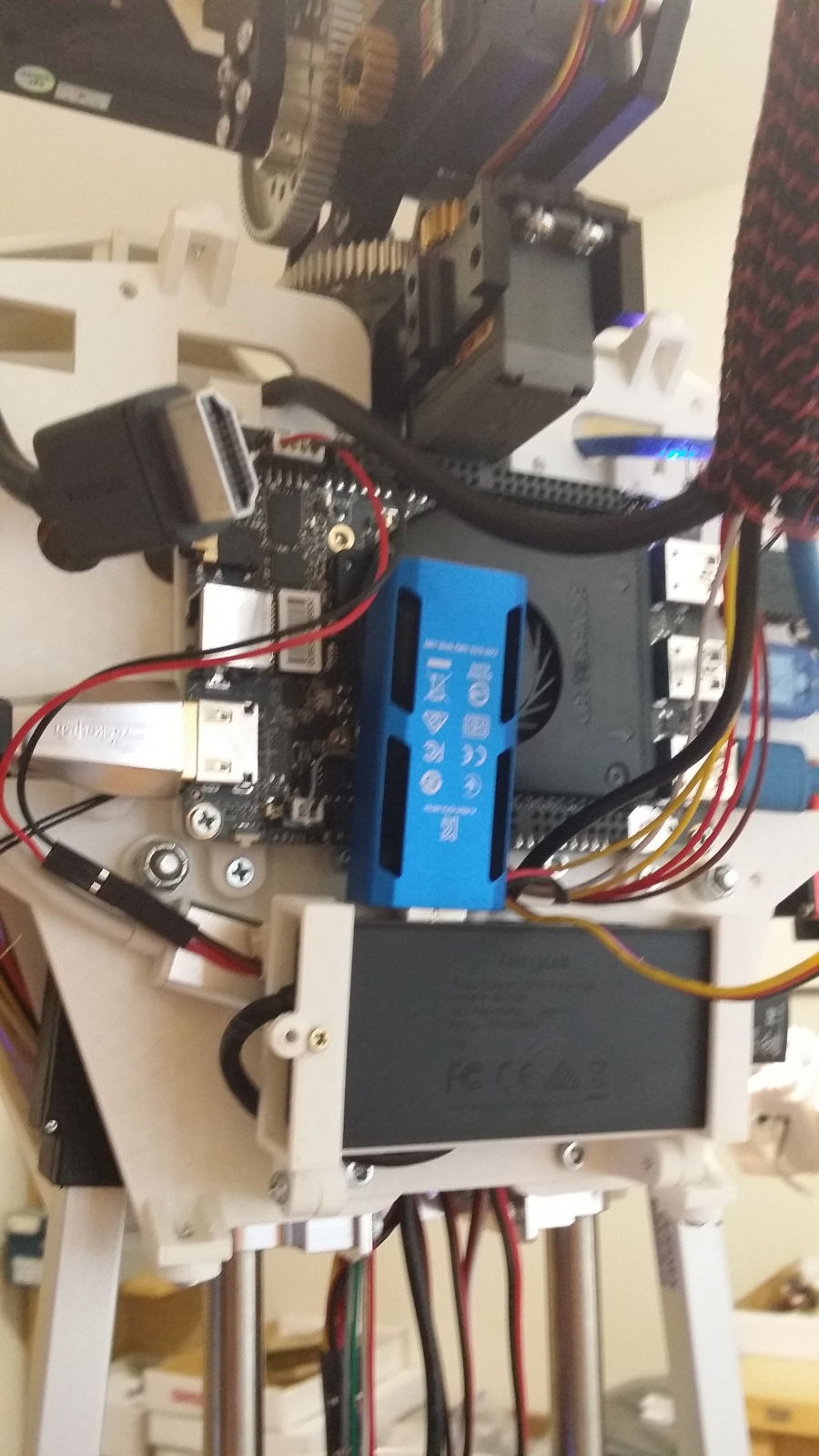

This (rotated) pic from the upper back of the bot shows the LattePanda Alpha, USB Hub, and Intel Movidius Neural Compute Stick 2 (in blue). The SSD mounted below the Panda out of sight.

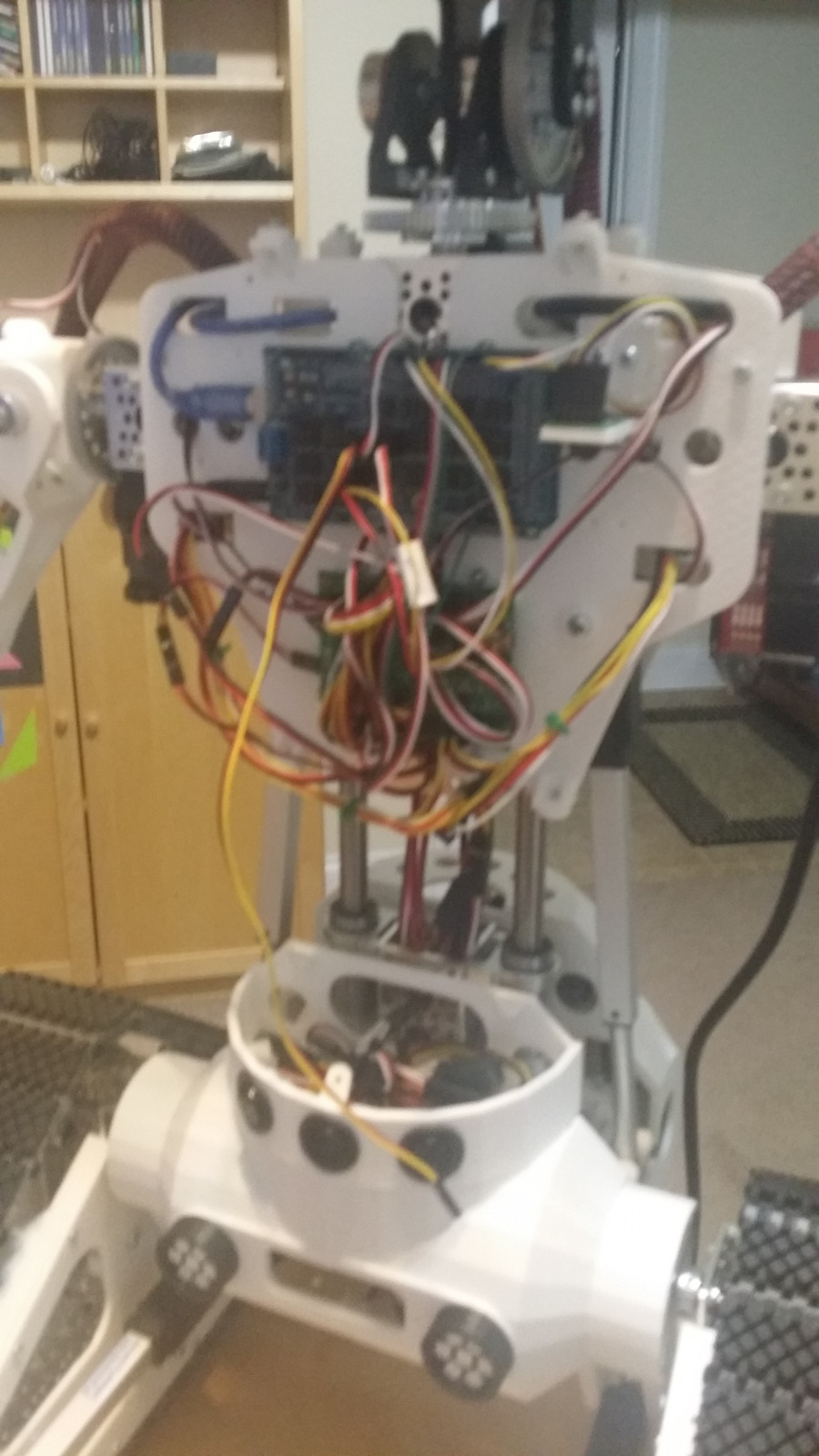

This pic from the chest area, shows the Arduino Mega ADK, with a sensor board on top, and the SSC-32u servo controller board.

The motor controller and power regulators are buried in the rear buttocks area. I'll post a pic next time I open that area up again.

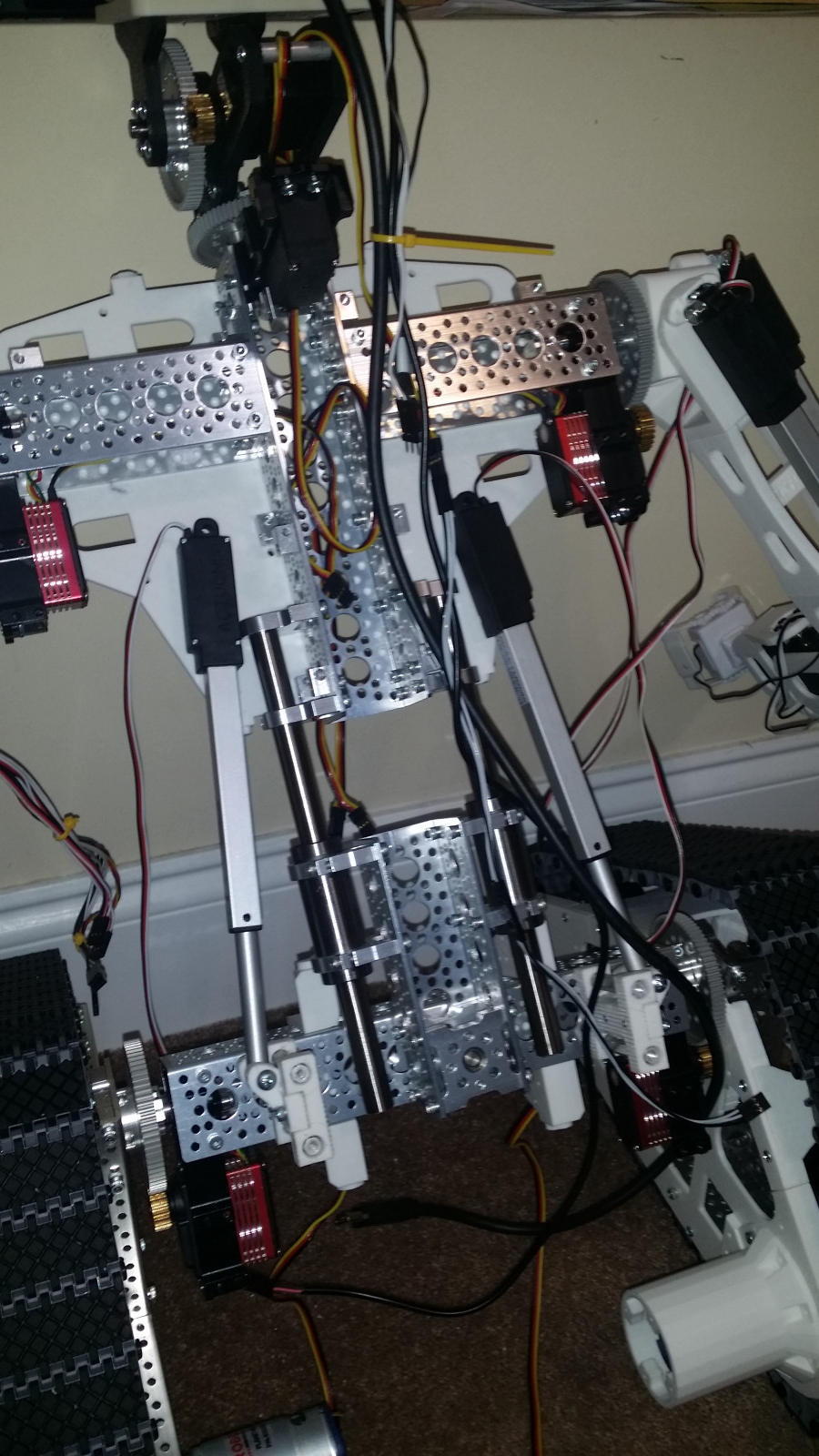

Aluminum Endo-Skeleton

This is an old shot of some of the internal aluminum skeleton (ServoCity parts mostly). The linear actuators in the pic give the core its side tilt and/or up/down movement. I ordered some custom 8in x 12mm hollow rods for the slider to save weight. In this shot, you can see gearboxes operating the hips, I have since replaced the hip actuation to use Actuonix linear actuators to be more steady and save power.

An early skeleton pic...with her 2 older sisters.

Demo of Depth and Kinematics to Detect and Touch Nearby Objects:

Demo of Ava Playing a Card Game using new and improved Object Recognition Engine that matches size, color, points, features, and templates all at the same time.

Navigation video showing SLAM and A* Pathfinding and Mapping, untethered on battery power.

Video:- First with new brain!

First Video - with old Latte Panda Alpha brain