Using V-REP, I designed a very simple simulation environment, which is essentially a large floor with a track on top of it. The track was designed in Fusion 360 and exported as STL. Automatic generation of those track shapes, maybe from simple 2D drawing, would be nice.

The car is based on the V-REP example car "simple Ackermann", that has similar mechanics to real world car steering, that reduces slipping. (It ensures that rotational force applied to both wheels result in rolling instead of forced slipping. If you imagine a car driving around a circle, the driven path is really made of two concentric circles, one for each wheel. By having the wheel on the smaller/inner circle steer slightly more than the outer wheel, proper rolling of both wheels is ensured.)

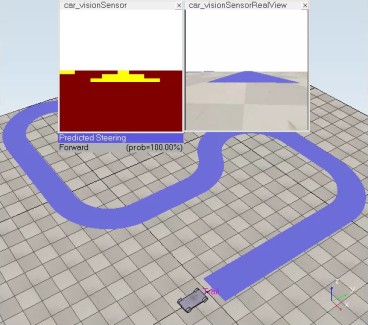

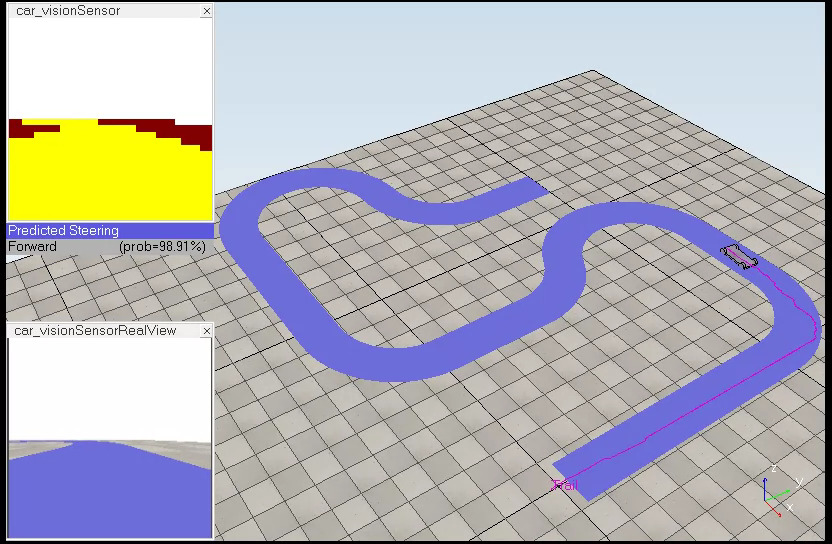

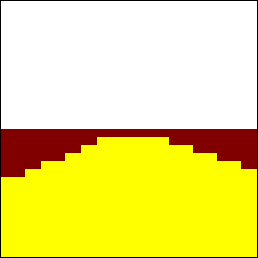

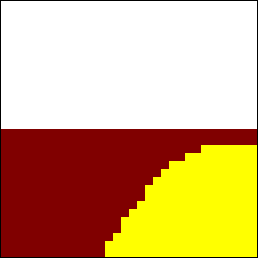

I added two vision sensors to the robot car, one that shows the original "true" world image (bottom left in picture above), and another that shows a filtered view (top left in picture above), where each object has a simple color that ignores lighting or other texture variations.

This was to allow for easier debugging of the neural net later, and reduce the possibility of noise and irrelevant variations that would complicate training. (I have yet to experiment with some debug visualizations, such as produced by innvestigate.)

I also added an embedded graph, that leaves a trace of the car in the scene, so it's more obvious which path the car took. Between the two windows for the vision sensors, there is a label that displays the steering command predicted from the current frame of the car_visionSensor. The probability/percentage is the confidence of the neural net, that it categorized the current frame correctly.

Finally, I added a script, such that the car and some simulation parameters could be remote controlled by a program external to V-REP, which I wrote in Python.

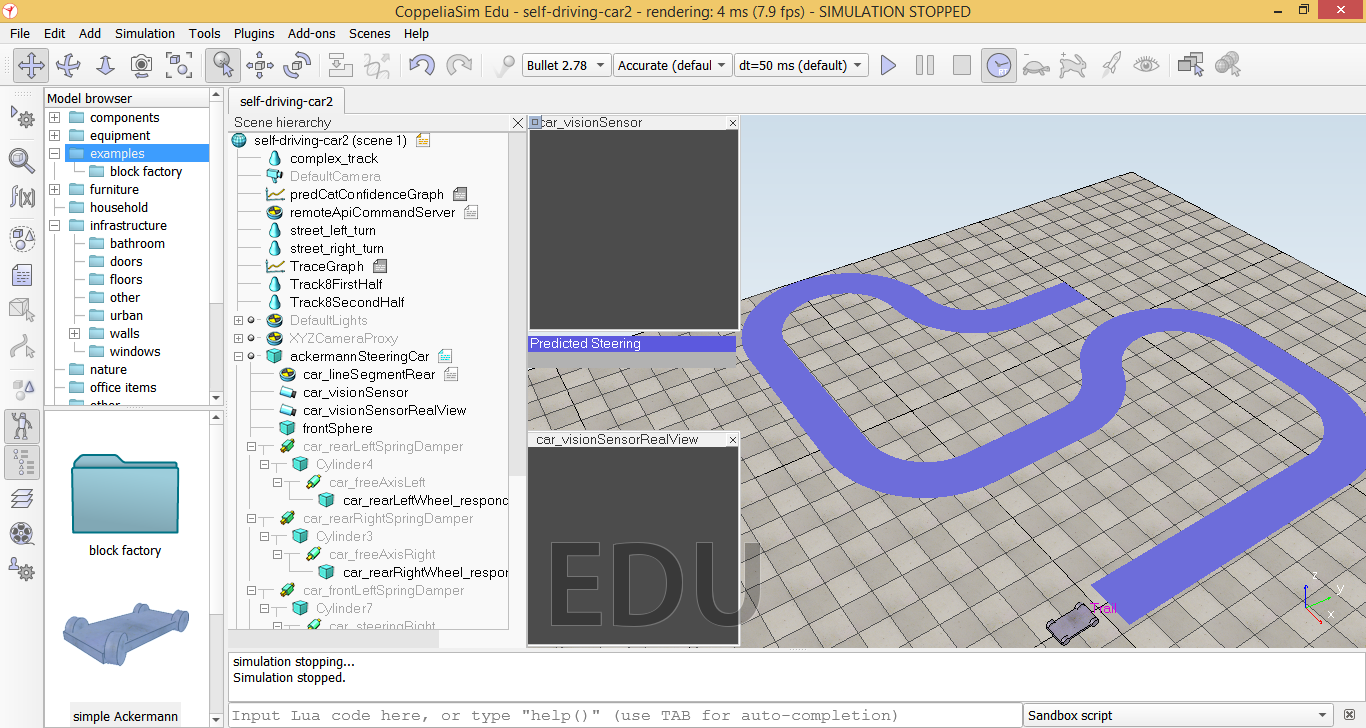

Here is a view of editing the world, from within V-REP:

The simulator enabled experimenting with hardware, such as camera FOV and positioning, but also having the ability to slow down the simulation, allowing the neural network to finish predicting steering commands in time, before the next camera frame.

These were factors that affected my previous attempt, where I used a modified RC car, that I let autonomously drive in the real world. I turns out that a large field of view, even if it distorts the camera image, is better, since it gives a larger view of the road. High latency in prediction, due to lack of processing power and camera streaming from a Raspberry Pi to a laptop, also meant that steering commands would arrive too late. All of these factors are no issue in the simulation, which can simply be slowed down and synchronized as needed.

That way I could focus on the actual training issues.

Training data is obtained by manually driving the car in the robot simulator (V-REP) with the keyboard (arrow keys). Some convenience keys reset the car and put it in one of several standard starting positions, start/stop data collection, or engage automated driving once the model is trained. A Python program I wrote, controls V-REP and reacts to key strokes, but also stores each vision sensor frame it receives from the simulator, and labels it with the direction the wheels are pointing at during that frame.

Example camera frame when driving left.

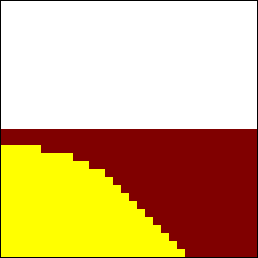

Example camera frame when driving forward.

Example camera frame when driving right.

The examples above show what typical (camera / vision sensor) frames look like for the three possible categories: driving left (and forward), driving forward, driving right (and forward). There is no way to identify whether the car is driving backwards or is stopped from a single frame, the car can only automatically drive in the forward direction.

Quality of training data

The major reason for this project was that neural nets proved to be sensitive to noise and were not predicting reliably, when trained in my previous RC car based project. So I tried to simplify the problem as much as possible, and make "debugging" easier.

One approach was to apply filters to the camera, to make objects appear in solid colors (white, brown, yellow -- as seen in the frames above), with no shading or lighting or other artifacts, that may be randomly correlated to steering commands. This made it more likely that correlations were also the causing reason / distinctive features that should control steering. In other words, I was trying to avoid overfitting.

Another approach was to radically reduce training data: instead of training on many slight variations of the same track, or giving many slight variations of exemplary steering along those tracks, I provided only two (but perfect and clearly contrasting) examples.

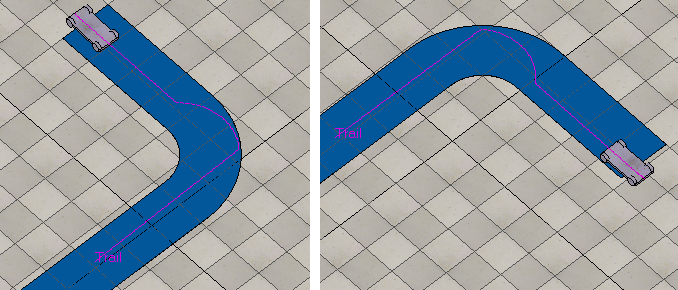

Training examples: manual driving with arrow keys to create a perfect left/right turn.

The purple “Trail” shows the driven path (geometrically clean after several tries).

After several tries, I managed to drive the car along the two tracks above, with a perfectly clean / ideal trail, with no unintentional / erroneous steering. This prevents collecting confusing information, where some camera frames are correlated with erroneous steering.

Usually, training data is full of contradictions and little errors, which is not tragic as long as it is a small portion of the overall data. Obviously, though, cleaner training data leads to a cleaner model. Less but more representative data, also allows for easier determination of the key visual features.

After experimenting with various architectures, I settled for a transfer learning approach, to reduce training time and the need for training examples. As the predictions need to be done with low latency, such that the car can react in real time, the model should be relatively small.

I ended up picking resnet18, which is a convolutional neural network (CNN) with a depth of 18 layers, and a native image input size of 224×224 pixels. It provides a good balance between accuracy and speed in my experiments (and with my hardware setup).

As mentioned earlier, the resulting collected data is a sequence of (current-camera-frame, steering-command) pairs, or in other words, labeled images. This data is uploaded to an IPython/Juypter notebook on Google Collab for training on dedicated hardware.

The final training accuracy was 0.985337 (98.5%) with a training loss of 0.045329 and validation loss of 0.028133. The exact values vary a bit each time training is redone, but remain close in value.

Interestingly, the convolutional neural network (CNN) seems to interpolate nicely between the provided training examples, and is able to handle unknown degrees of road bends.

The learned automated driving generalizes better than expected, even managing road crossings, that were not in the training data set. While the car “hesitates” and wants to take a right turn, it does a left turn again when it comes close to the end of the crossing and drives through successfully.

When positioned outside of the track facing it at a slight angle (not shown in videos), the car also manages to steer in the “hinted” direction and aligns properly with the track, which has not been trained either!

Overall, I am rather pleased with the results: The bending/curvature of the curves on the testing tracks vary constantly, also differing clearly from the 90° bend of the training tracks. Yet the car manages to approximate the curves by using the most appropriate steering command available, most of the time.

While I am very pleased with the results, smoother driving could be achieved with more variations in the training data. On the other hand, the reduced training variations prevent simple memorizing/overfitting, and instead force generalization, as many cases were never seen during training. Providing few clearly distinct, but representative examples that "span" the space of possibilities, proved to be a good approach.

Generating training data automatically (by using other approaches to identify ideal paths, then collecting training data, by letting the car follow this path with traditional algorithms), or using regression instead of classification, are projects I would like to explore in future.

One major road-block in machine learning, compared to traditional development, is that training and testing are a lot slower, even with dedicated hardware. While Google Collab helped, upload speed and training data collection limit the iteration frequency.

More details on the approach and future work is available on my blog: Self-driving car based on deep learning.