The key objective is to develop the basis of any robot - being capable of

- recognition of its surroundings, resulting in 2D / 3D Map

- navigation within the environment and position verification

- avoid dynamic obstacles

All of the required code shall be written in Python (Cython) or C.

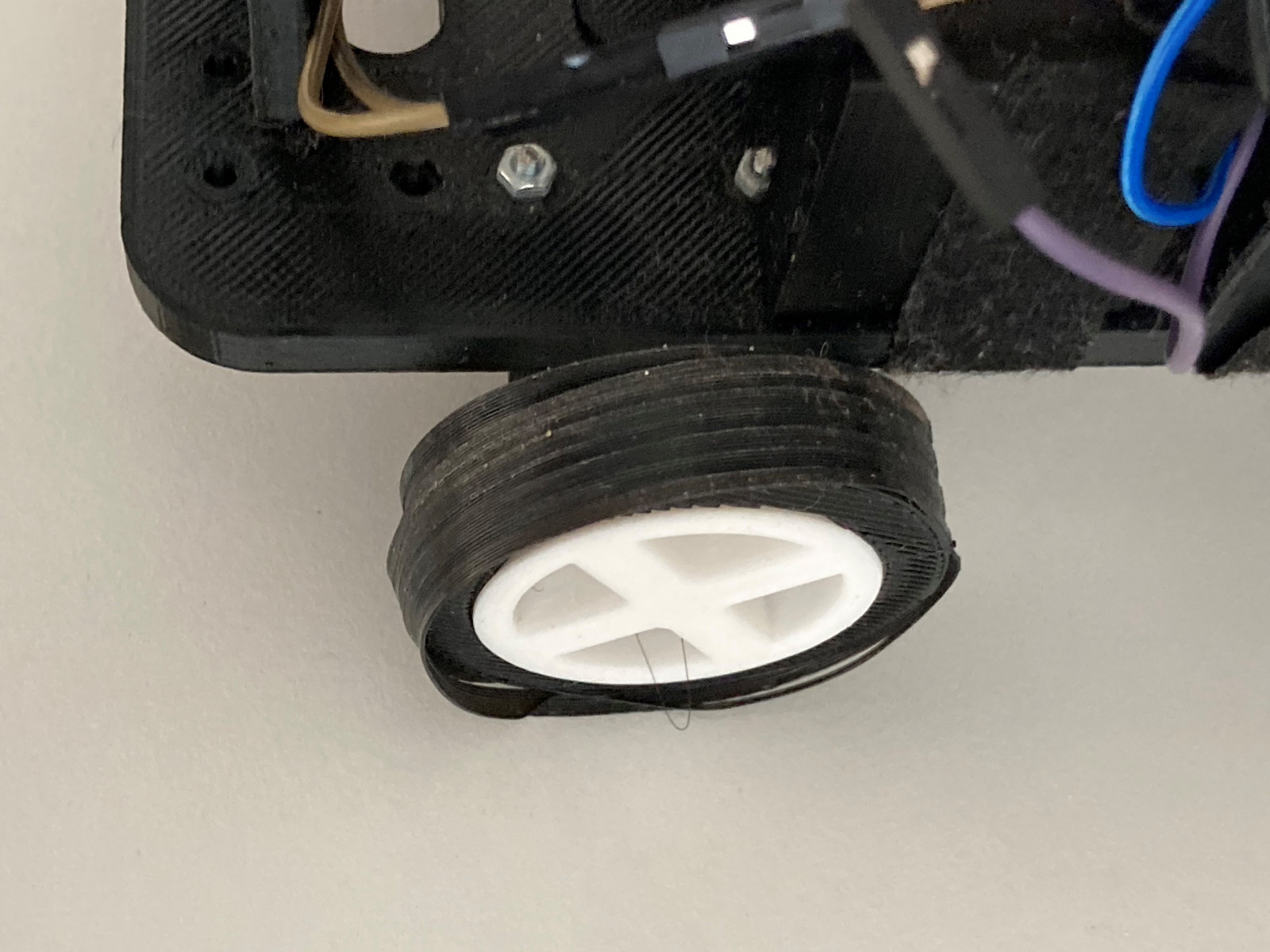

The hardware is self designed and 3D printed, all other parts are low cost.

Basically the project is split in following steps, worked on in parallel as far as possible:

- Hardware design and 3D Print

- 2D / 3D Mapping

- Navigation

- Drive Control with dynamic obstacle avoidance

- Integration

More information, videos and the latest progress of the project can be found at www.to-bots.com

All parts required were designed in FreeCAD and printed on a Flashforge Dreamer.

The result can be seen in the overview picture.

Lessons Learned:

- use of a metal chassis, preferably with mecanum wheels / tracks or steering gear

- use of non 3D printed Tyres (see delamination picture)

Not perfect, but at least completed and good enough to focus on the code part :)

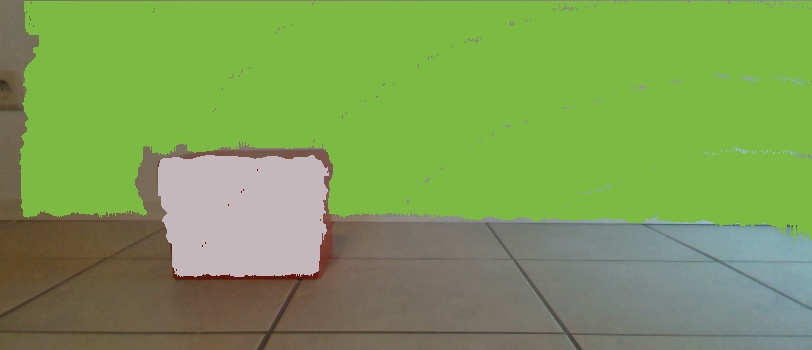

The Key feature - using the depth image to identify objects and generate a 2D / 3D Map.

Object identification (static / dynamic)

GREEN - Floor (driveable)

GREY - Wall

RED - Obstacle (dynamic / static)

YELLOW - inconclusive

Initial Object Detection Video

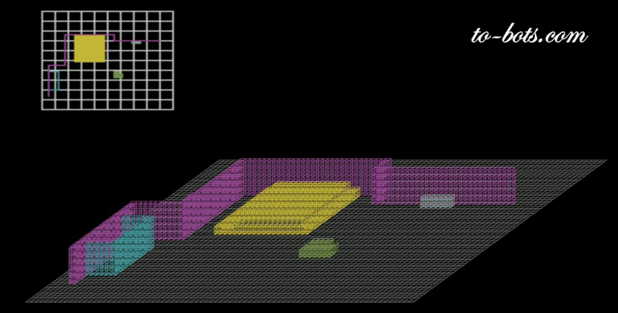

Develop 2D / 3D Map

Including "Map Builder" - joining multiple scans and their objects together.

Individual colors for each object

Example 2D & 3D Map

To enjoy the "object merger" / "map builder" on sample case please refer to following video:

Environment Scan to 3D Map

Currently WIP - to be continuously updated

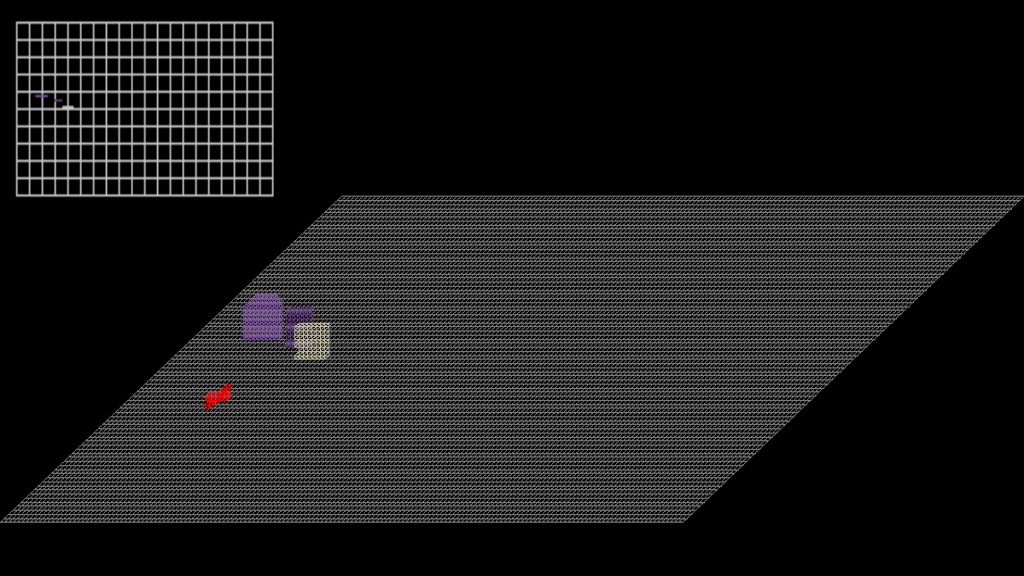

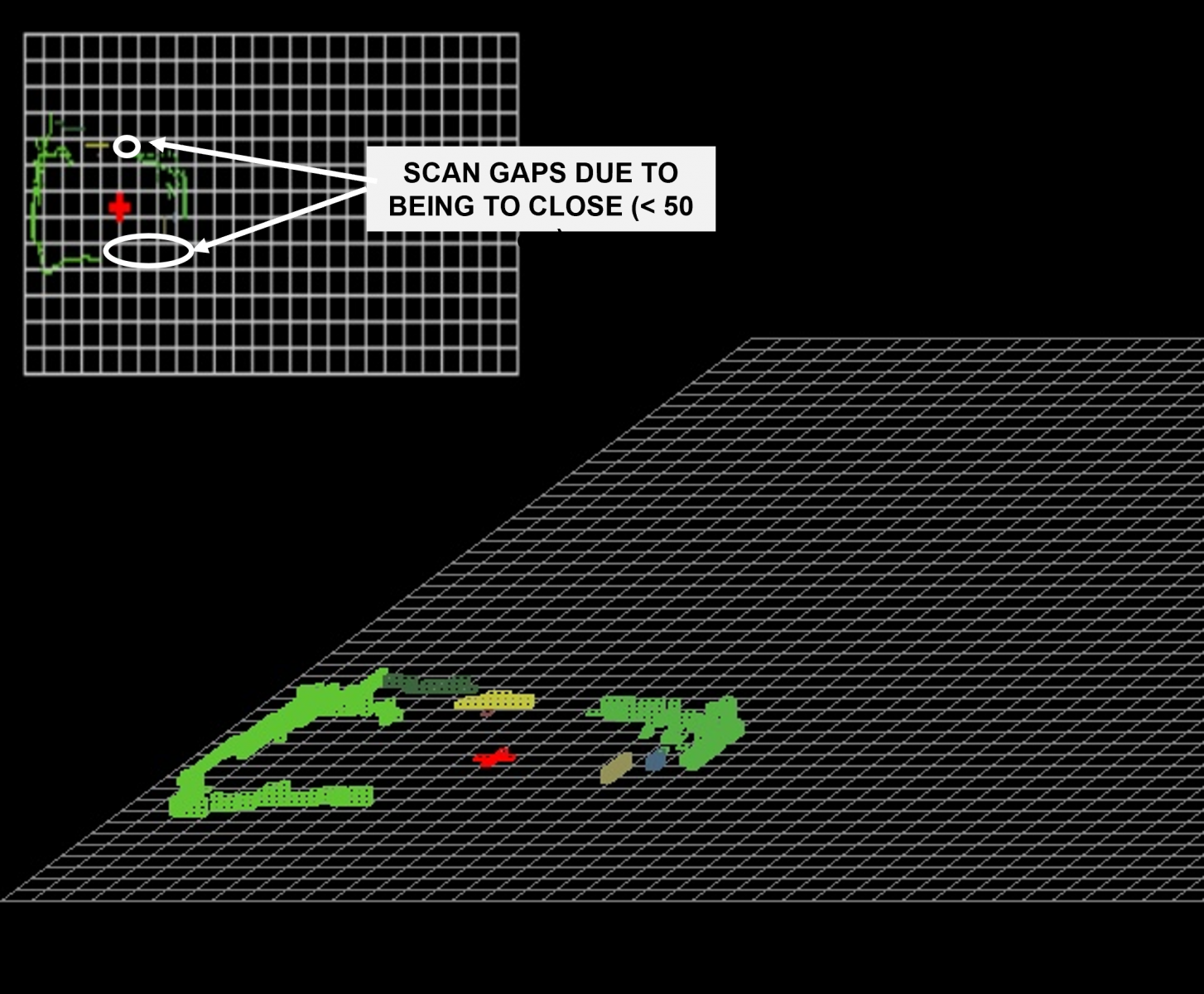

Testing Example:

Environment

Object Detection (each identified object gets individual color)

Transfer to 3D Grid (simplified objects)

Red cross is current Robot Position

Further Example:

Note: the grid only shows a simplified version of the detected object

UPDATE 25-10-2021

Initial 360 Degree Scan - SLAM - Result

Initial environment scan on example room - small tiled room.

The holes, marked with a white ring on picture, are due to the current setting, no scan of objects which are 50 cm or less away from the robot. The robot scans, drives forward and rotate three times 90 degrees, each time scanning the environment.

Key problem is the lack of rotation accuracy, this is main cause of the noisy 3D Map as a result.

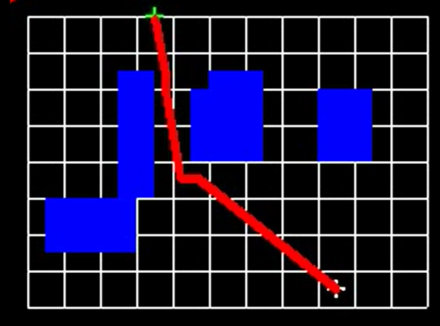

Write a simple algorithm to create a route from current position to target

Currently WIP - to be continuously updated

Version 0.3

For full enjoyment please have a look at following slowed down Video:

Drive control - two key topics:

CAMERA BASED SELF CALIBRATION

As there are no hall sensors in the motor, a camera based self calibration sequence has been developed for rotation and for drive speed.

Steps:

- find suitable object

- 1 sec drive and measure driven distance against reference object

- check for best rotation direction for rotation calibration

- Rotate 45 Degrees and correct until reference is achieved

- Return rotation 45 Degrees and and correction

- ... rotate 45 Degrees and drive off after successful completion..

Dynamic obstacle Avoidance

Random target definition with obstacle avoidance - static & dynamic

OPEN:

- Final build and testing

- Final drive control

The original platform has been decommissioned and an updated version created.

The Final Version

The Updated version features a simple 3 DoF Arm and started complete robot testing in April 2021.

Initial Capability Target:

Map environment, Identify target, pick and deliver to defined target.

Includes object detection with simple map and independent Object classification.

Please enjoy the Video below - Mission: ball to bowl

For more info to the project: www.to-bots.com