【The first AI LLM + embodied intelligent robot dog】DOGZILLA-Lite is the world's first educational robot dog that integrates multimodal large models + embodied intelligence. Built-in Raspberry Pi module supports multiple AI visual functions such as face detection and object recognition. It is not only a walking robot, but also an AI partner that can understand images, voices, environments, and make autonomous decisions.

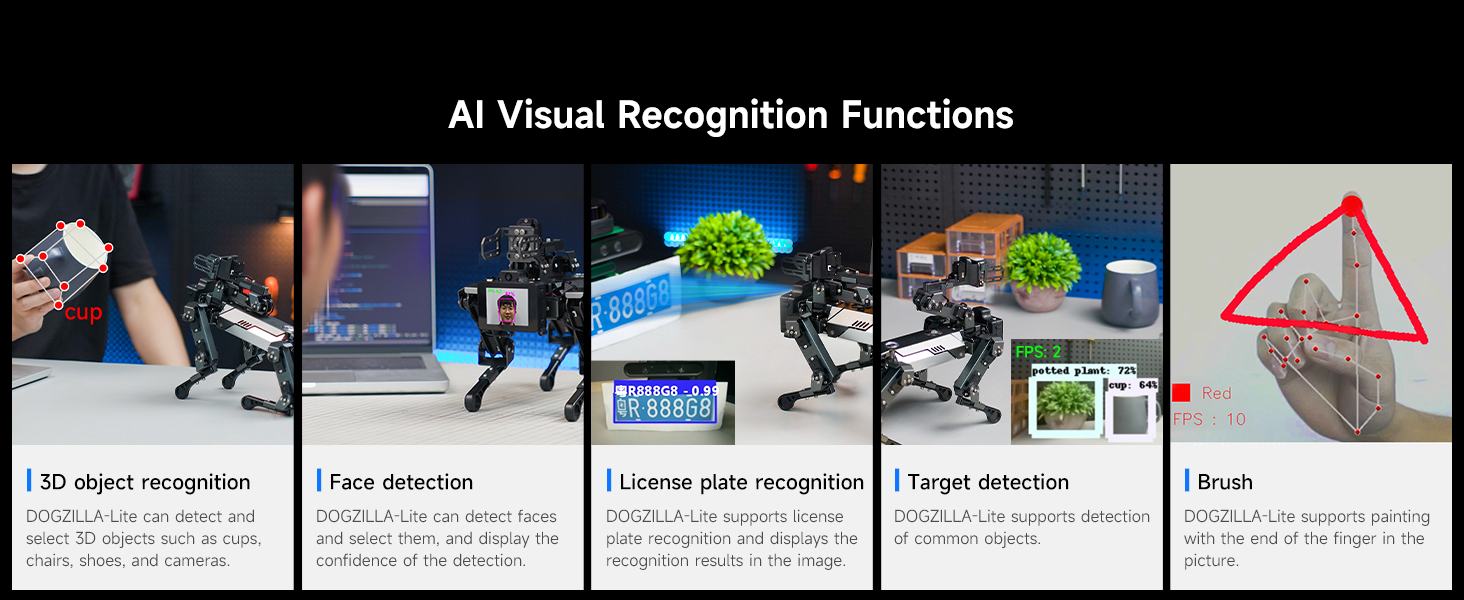

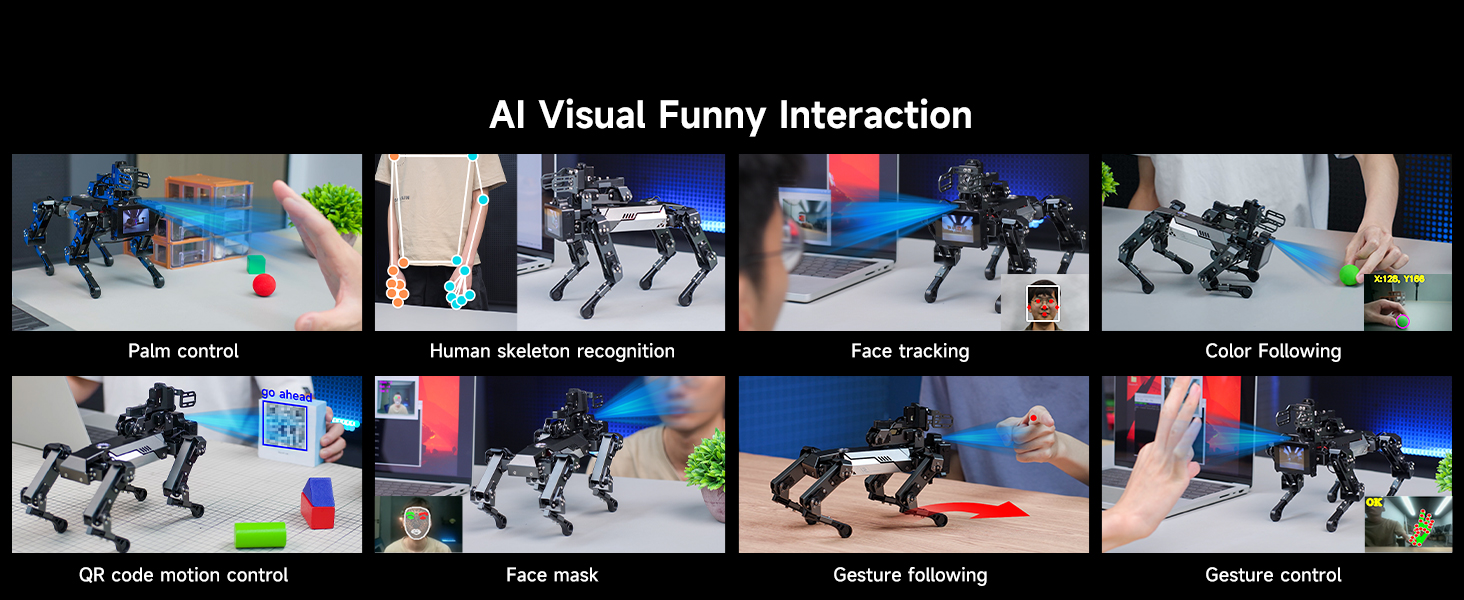

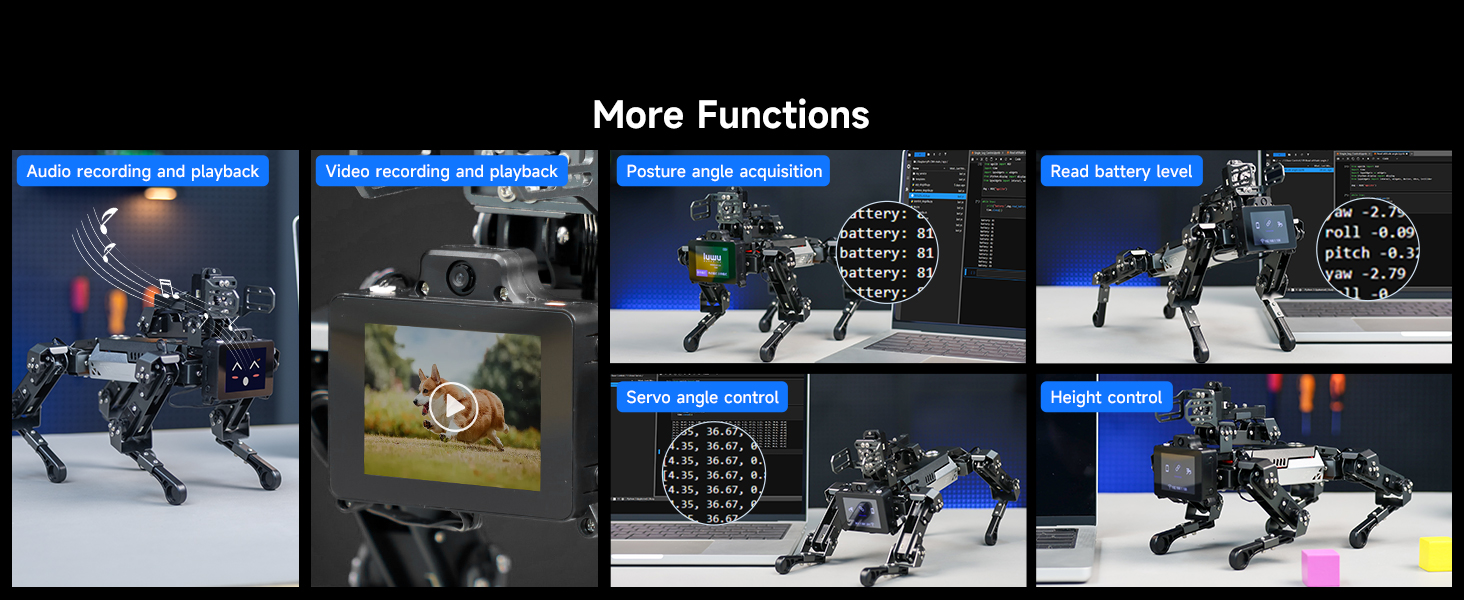

【Robot arm expansion & AI vision technology】Supports expansion of 3DOF robot arms to achieve autonomous grasping and handling of objects. Pre-burned GUI system, built-in more than 40 AI vision/voice programs, realize 3D object recognition, color, face, AI visual interaction and many other exciting functions, providing unlimited possibilities for creative projects. Note: The robot arm is limited to grabbing standard EVA blocks/balls.

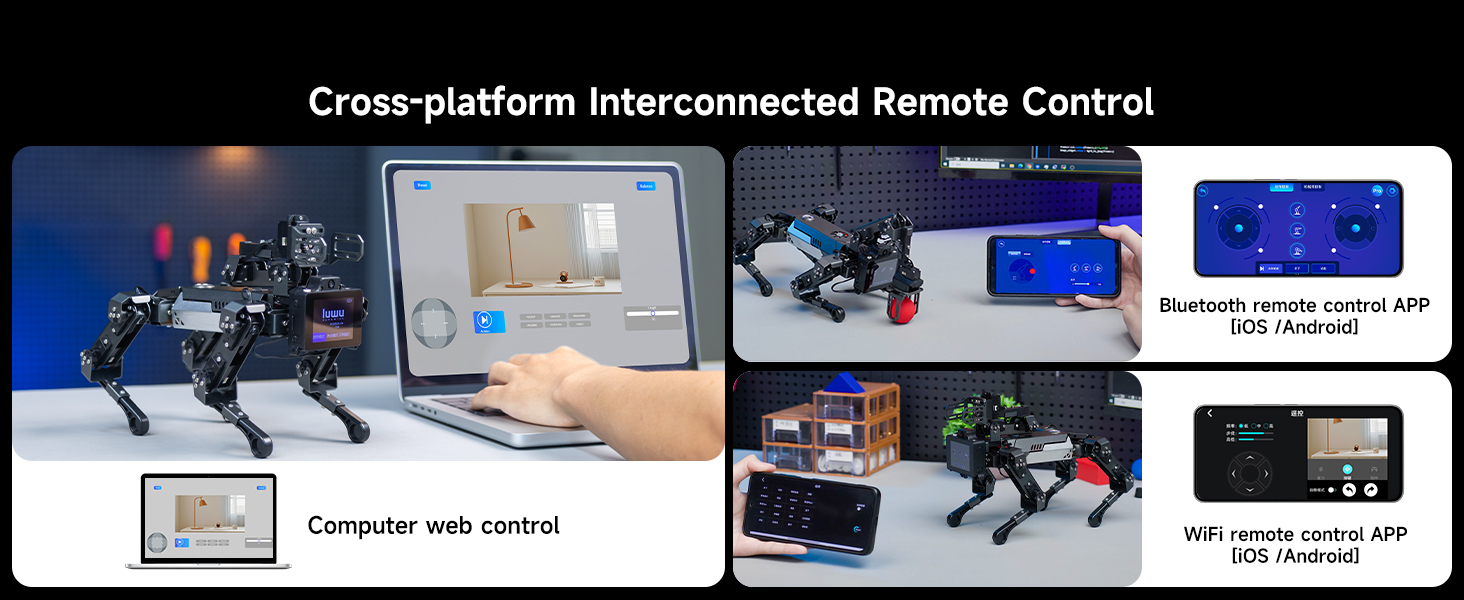

【Multiple control methods and real-time images】You can easily control DOGZILLA-Lite through the XGO APP and PC software for Android and iOS devices. In addition, DOGZILLA-Lite can also transmit real-time images to the application, bringing a first-person perspective control experience. Yahboomrobot can control the movement of the robot dog and support video images, but cannot control the robotic arm.

【Gait planning, free adjustment】DOGZILLA-Lite integrates inverse kinematics algorithms to accurately control the ground contact time, lift time and lift height of each leg. You can easily adjust these parameters to achieve different gaits. Provides detailed inverse kinematics analysis and source code of inverse kinematics functions.

【Why choose DOGZILLA-Lite? 】It is not just a toy, but a ticket to the future. Students use it to understand AI principles, geeks use it to develop autonomous driving algorithms, and families use it as an interactive technology partner. Yahboom provides AI visual interaction, Open CV, AI LLM open source data code and technical support.

◆Large Language Model

DOGZILLA-Lite is connected to the large model in real time, not only understand text instructions, but also respond flexibly.

◆Voice Large Model

DOGZILLA-Lite is equipped with a highly sensitive microphone and speaker, supporting real-time conversion between voice and text. After connecting to the large model, it can achieve intelligent interactive experience.

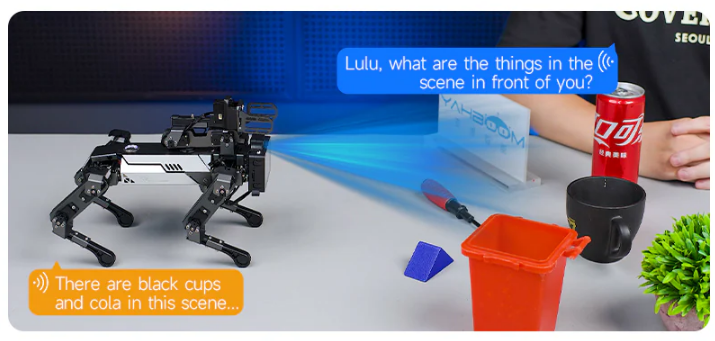

◆Visual Large Model

DOGZILLA-Lite is equipped with a 5MP camera, which can understand and analyze image content, accurately identify objects, and output text and voice feedback.

◆Scene understanding

Through the visual large mode, DOGZILLA-Lite can understand the scene information in the field of view and output text or voice feedback.

◆Voice control

DOGZILLA-Lite supports voice dialogue, and can recognize voice input content through the large model and respond.

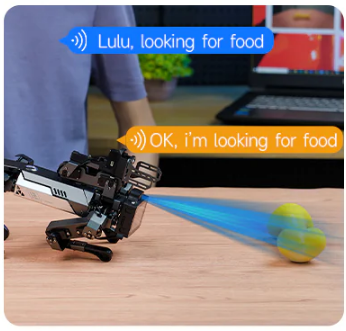

◆Identify & Select

Through the semantic understanding of the large language model, DOGZILLA-Lite can lock the target according to the command, adjust the body posture in real time, and accurately complete the playing football action.

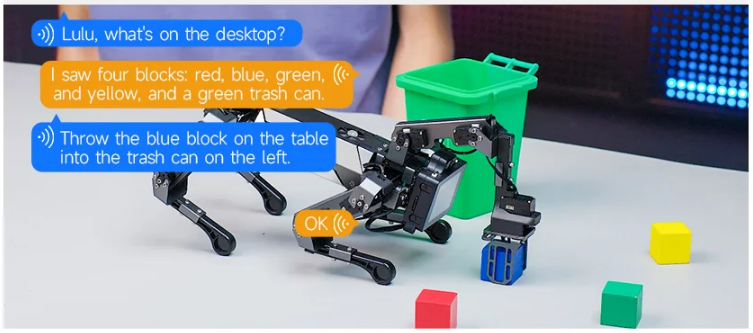

◆Embodied intelligent handling control

Through the large language model, users can control DOGZILLA-Lite to carry the corresponding objects to the specified location through voice commands.

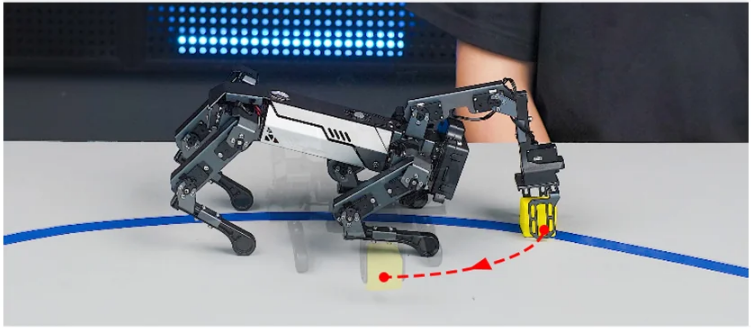

◆Line tracking and remove obstacle

Through AI vision, DOGZILLA-Lite can identify obstacles on the path during line tracking, and use the robot arm to remove obstacles.

DOGZILLA-Lite is not only a flexible robot dog, it can also complete a variety of visual recognition tasks, bringing unprecedented interactive experience and unlocking more interesting gameplay.

Pre-installed Yahboom self-developed GUI program, which contains more than 40 functional functions and integrates most core functions, so users can quickly experience functional cases.

WiFi remote control APP

Bluetooth remote control APP

Tutorial Link:http://www.yahboom.net/study/DOGZILLA-Lite